Right, so people are slowly working their way to Elasticsearch, and besides myself and Deebs we don't have many stats out there.

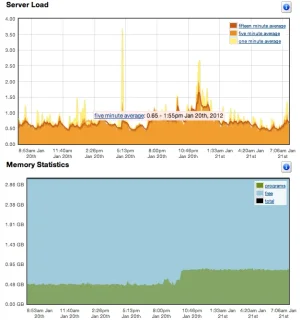

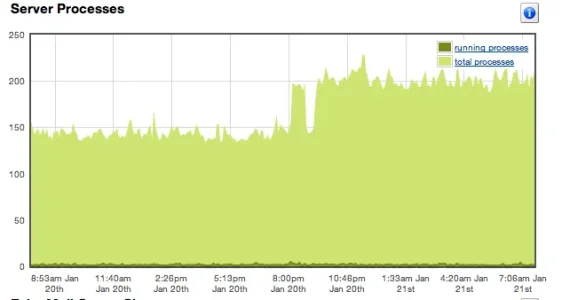

So, the idea of this thread is to see the impact of the search on the server.

To find out the file size on disk, navigate to your elasticsearch index directory (/var/elasticsearch if you followed the guide in this forum) and run "du -ach"

www.p8ntballer-forums.com

Posts Indexed: ~1.2 million

Size on Disk: 925M Total

Min/Max Ram Allocated in config: 512mb/2048mb

http://forums.freddyshouse.com/

Posts Indexed: ~4 million

Size of Disk: 2.9GB Total

MinMax Ram Allocated in config: 4096mb/4096mb

So, the idea of this thread is to see the impact of the search on the server.

To find out the file size on disk, navigate to your elasticsearch index directory (/var/elasticsearch if you followed the guide in this forum) and run "du -ach"

www.p8ntballer-forums.com

Posts Indexed: ~1.2 million

Size on Disk: 925M Total

Min/Max Ram Allocated in config: 512mb/2048mb

http://forums.freddyshouse.com/

Posts Indexed: ~4 million

Size of Disk: 2.9GB Total

MinMax Ram Allocated in config: 4096mb/4096mb