Hello,

since the AI hype started, I noticed more than once that companies started to search our forum in a way that works almost as a DDOS. We are a small hobby project, and those request (that often do not follow robots.txt directives) block our bandwidth.

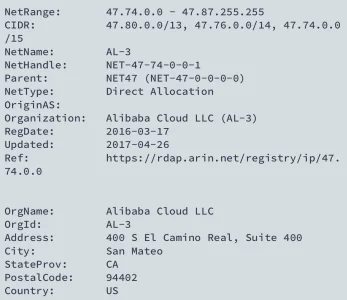

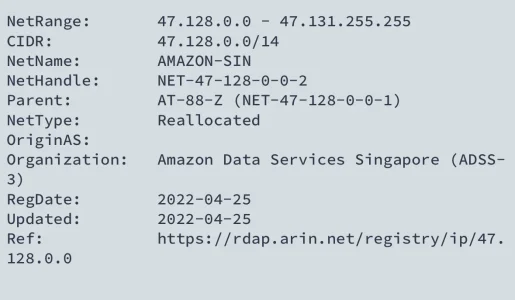

Amaz*n, Faceb**k, Huaw*i - I could block them more or less successful, but since a few days we get request (yesterday they crossed the 10,000 queries border, so I had to set the forum offline for the night). What frustrated me most was that at least one of the ignored the robots.txt setting.

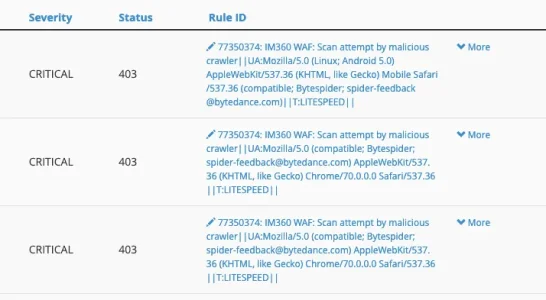

The requests originate from two American ISPs (we host almost only German content). The user agent string looks like a normal browser request, e.g.

but in such a mass it is not. Any idea how to stop it?

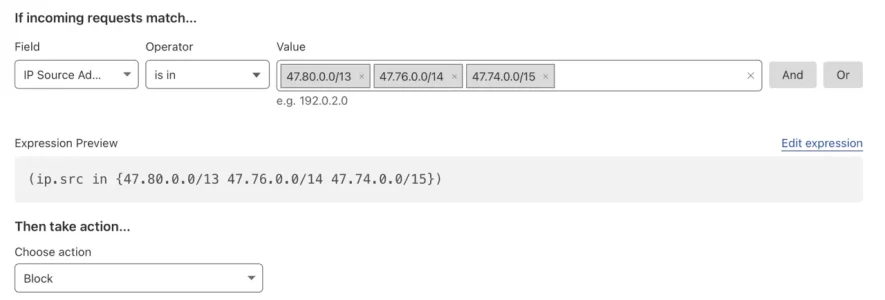

I miss any clear identification for that. Even the IPs come from five or six group, like 277.1xx.xxx.xxx (invalid example, I know, just to tell you what I can identify) and I don't want to blacklist that whole group of IPs.

since the AI hype started, I noticed more than once that companies started to search our forum in a way that works almost as a DDOS. We are a small hobby project, and those request (that often do not follow robots.txt directives) block our bandwidth.

Amaz*n, Faceb**k, Huaw*i - I could block them more or less successful, but since a few days we get request (yesterday they crossed the 10,000 queries border, so I had to set the forum offline for the night). What frustrated me most was that at least one of the ignored the robots.txt setting.

The requests originate from two American ISPs (we host almost only German content). The user agent string looks like a normal browser request, e.g.

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.91 Safari/537.36

but in such a mass it is not. Any idea how to stop it?

I miss any clear identification for that. Even the IPs come from five or six group, like 277.1xx.xxx.xxx (invalid example, I know, just to tell you what I can identify) and I don't want to blacklist that whole group of IPs.