Mr Lucky

Well-known member

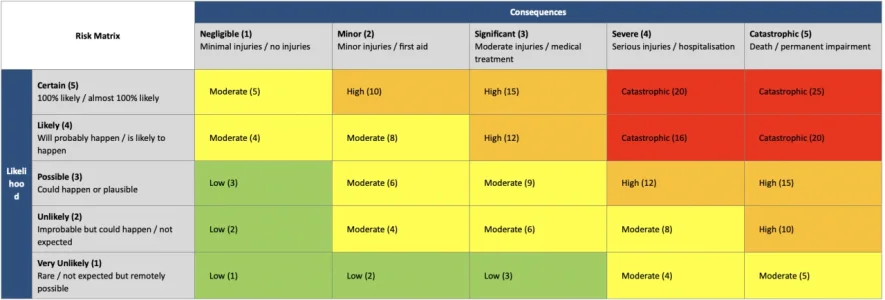

Someone correct me if I’m wrong please but my understanding is that if direct messages could be moderated this goes a long way towards a low risk assessment for the online safety act.

My current risk assessment shows direct messages to have the highest risk level.

Currently seeing direct messages of others as a moderator (with no access to the database) is possible only via a login as user add-on. However having to check individually for every member would be impractical in most cases.

So this suggestion is for a log of all direct messages with mod viewing permissions so that they can be checked for anything harmful.

Ideally there could be an option for only DMs involving members identified as under 18 years (based on user field). EDIT: however this only addresses one or two types. of the l17 listed harms)

This would basically extend the current site admin's ability to view the contents of

My current risk assessment shows direct messages to have the highest risk level.

Currently seeing direct messages of others as a moderator (with no access to the database) is possible only via a login as user add-on. However having to check individually for every member would be impractical in most cases.

So this suggestion is for a log of all direct messages with mod viewing permissions so that they can be checked for anything harmful.

Ideally there could be an option for only DMs involving members identified as under 18 years (based on user field). EDIT: however this only addresses one or two types. of the l17 listed harms)

This would basically extend the current site admin's ability to view the contents of

xf_conversation_message to moderators with permissions, but in the more convenient form of a log in the ACP.

Last edited:

Upvote

7