digitalpoint

Well-known member

@Kirby

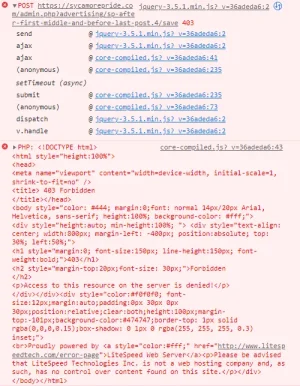

BTW, this is my current implementation I'm testing that (I think) maintains backward compatibility (again, not in a position to change XenForo's fundamentals myself, so not trying to deal with the "should GET requests even have CSRF tokens?" issue) and hopefully solves all the problems.

A little annoying to have to explicitly tag the links it should apply to by adding the

So no JavaScript needs to happen on click, it doesn't get applied to links that it may not be intended for, etc.

BTW, this is my current implementation I'm testing that (I think) maintains backward compatibility (again, not in a position to change XenForo's fundamentals myself, so not trying to deal with the "should GET requests even have CSRF tokens?" issue) and hopefully solves all the problems.

JavaScript:

XF.config = new Proxy(XF.config, {

set: function(object, property, value){

object[property] = value;

$('.has-csrf').each(function()

{

let url = new URL($(this).attr("href"), XF.config.url.fullBase);

url.searchParams.set("t", value);

$(this).attr("href", url.toString());

});

return true;

}

});A little annoying to have to explicitly tag the links it should apply to by adding the

has-csrf class, but it's not a crazy number of them.So no JavaScript needs to happen on click, it doesn't get applied to links that it may not be intended for, etc.