You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

[TAC] Bot Arrestor [Paid] 2.0.12

No permission to buy ($19.00)

- Thread starter tenants

- Start date

tenants

Well-known member

tenants updated DeDos - Anti DOS for spam bot/scrapers with a new update entry:

fixes

Read the rest of this update entry...

fixes

- fixes related to js detection.

- removed xenforo search from detection area (this is never crawled by spam bots / scrappers)

Read the rest of this update entry...

Last edited:

tenants

Well-known member

@Case can you confirm if the issue for search is still there

- I've removed it as a detection area, for bots this was never used anyway (they hit index, threads, posts, forum, members profile, register, login, rss and not much else), so there was no point in keeping search as a detection area in

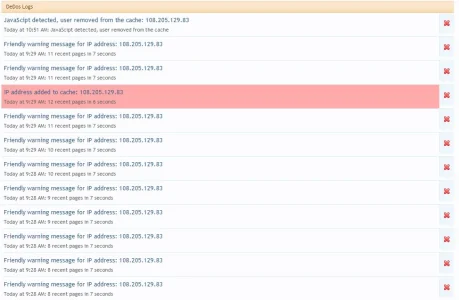

Even for humans that hammer your site, if they get locked out with a 401, they will now automatically redirect and get removed from the 401 cache (and prevented from getting re-added for that session). This appears as a flashing warning in your logs to draw your attention to it (so, if you've used harsh DOS conditions, it should be fairly apparent)

So you can now test as a logged out user: Go to a thread, refresh 10 times quickly (you will see the 401 flash and then you will be redirected to the original page) -> Then check your DeDos logs

- I've removed it as a detection area, for bots this was never used anyway (they hit index, threads, posts, forum, members profile, register, login, rss and not much else), so there was no point in keeping search as a detection area in

Even for humans that hammer your site, if they get locked out with a 401, they will now automatically redirect and get removed from the 401 cache (and prevented from getting re-added for that session). This appears as a flashing warning in your logs to draw your attention to it (so, if you've used harsh DOS conditions, it should be fairly apparent)

So you can now test as a logged out user: Go to a thread, refresh 10 times quickly (you will see the 401 flash and then you will be redirected to the original page) -> Then check your DeDos logs

Last edited:

That's fixed the search error.@Case can you confirm if the issue for search is still there

- I've removed it as a detection area, for bots this was never used anyway (they hit index, threads, posts, forum, members profile, register, login, rss and not much else), so there was no point in keeping search as a detection area in

Even for humans that hammer your site, if they get locked out with a 401, they will now automatically redirect and get removed from the 401 cache (and prevented from getting re-added for that session). This appears as a flashing warning in your logs to draw your attention to it (so, if you've used harsh DOS conditions, it should be fairly apparent)

So you can now test as a logged out user: Go to a thread, refresh 10 times quickly (you will see the 401 flash and then you will be redirected to the original page) -> Then check your DeDos logs

XxUnkn0wnxX

Active member

im a bit confused with some of these settings:

i get confused with friendly and Malicious Dos settings

does the friendly settings have to always be lower then the other one?

i did a test and i could open around 14-16 pages within 10 seconds

but so can bots and they can do more properly within 10 seconds.

so i am a bit unsure how to detect humans and block bots with the time range/request limits

yes if a human opens 14-16 pages within 10 seconds or less i want them to get the friendly warning messages that all but if they keep doing it non stop on purpose i want them blocked same goes for bots

i am thinking no more then 11 requests within 8-10 seconds for humans.

but if they keep doing it i want them to get blocked for 24 hours same goes for bots

and what is the difference with Avoid Cache For Logged in users and Avoid All?

I got really confused with this one.

i want to also for registered/logged in users to have the same friendly warning filtration but thats it they should never be added to the 24 hour ip ban list.

so please help me out here on explaining this a little bit further for me?

i get confused with friendly and Malicious Dos settings

does the friendly settings have to always be lower then the other one?

i did a test and i could open around 14-16 pages within 10 seconds

but so can bots and they can do more properly within 10 seconds.

so i am a bit unsure how to detect humans and block bots with the time range/request limits

yes if a human opens 14-16 pages within 10 seconds or less i want them to get the friendly warning messages that all but if they keep doing it non stop on purpose i want them blocked same goes for bots

i am thinking no more then 11 requests within 8-10 seconds for humans.

but if they keep doing it i want them to get blocked for 24 hours same goes for bots

and what is the difference with Avoid Cache For Logged in users and Avoid All?

I got really confused with this one.

i want to also for registered/logged in users to have the same friendly warning filtration but thats it they should never be added to the 24 hour ip ban list.

so please help me out here on explaining this a little bit further for me?

tenants

Well-known member

There are 3 things a human might observes with this addon

1 They dont notice anything (since the DeDos setting are fair enough that they never bump into any DeDos messages) - This would be the desired setting

2 They receive a friendly warning message, which tells them to stop hammering the site, and then redirects them to their original page after 10 seconds

3 The receive a 401 unauthorised message, telling them that they are temporary locked out of the site (since they have been detected as malicious and added to the DeDos locked out cache)

Obviously, it would be to the users advantage to view the friendly warning message before they get locked out of the site for hammering. For this reason the friendly settings should be caught with less page views

For instance, 8 pages (in x seconds).

The second thing to point out, is that you still want to catch spam bots / scrappers. The bots wont care if they see a friendly message, however humans will.

So, to catch the bots and avoid humans, we should use more pages for the malicious settings (greater than 8 for instance 9 pages or more in y seconds). So, now at least a human will always bump into the friendly warning message, before they get locked out with the malicious settings.

Thirdly, you will want to set the malcious settings to be caught within a smaller time range. So by default, this is set up as

Friendly settings: 8 pages in 7 seconds

Malicious settings: 9 pages in 6 seconds

This means, to obsever the friendly warning message, a user with have to hit 8 pages in 7 seconds or less. They could hit 8 pages in 6 seconds or even 5 seconds, and they would be shown the friendly warning message.

However, if they ignore the message, on the 9th page, if they have requested all 9 pages in 6 seconds or less, they will then be added to the cache and locked out of the site.

Once a user is added to the cache they are blocked (it's a cache used for locking IPs out)

I try to prevent human regardless, since as you mentioned, some human can do things quite fast.... for this reason, we're concentrating on stopping spam bots and scrappers, but avoid detecting humans

To avoid detecting humans, there are 3 options:

The 2nd option (Avoid All DeDos for Logged In Users) allows members to avoid getting locked out and also prevents them from getting the friendly warning messages, this means DeDos is just operating on logged out users

The 3rd option is quite important(Automatically Remove JavaScript Users From Cache). Even if your members / users are logged out, and they then some how managed to hammer your site.. they will get added to the cache. This option detects the JavaScript of those users and automatically removes them from the cache and prevents them from getting detected by DeDos for the rest of their session (avoiding the admin ever having to deal with false positives, since humans are automatically removed from the cache). On automatically removing a human from the cache, the human is redirected to their original page (very quickly), and the row in your logs will flash and let you know (so you can adjust your DeDos setting to further avoid humans if need be)

However, it must be kept in mind that DeDos is targeted at avoiding humans... If a human is DOSing your site, you could turn the above 3 options off, but it would be smarter to block that user with htaccess. The reason DeDos is useful for spam bots / scrappers, is that spam bots / scrappers change their IP address so frequently, and DOS sites so often. To manually update your htaccess file to stop spam bots / scrappers would require a lot of effort, time and constant monitoring (it's just not realistic), DeDos solves this issue of spam bots & scrappers, and does everything it can to avoid detecting humans. DOS from individual humans it better managed by yourself rather than automation. DDOS from humans / botnets is better managed with load-balancing / hardware

If you want to be extra cautious, to avoid humans, you could change your settings to something like this:

Friendly settings: 11 pages in 10 seconds

Malicious settings: 16 pages in 13 seconds

Making sure that your Malicious setting has a larger number of "pages" than your friendly settings (so a human should always observe the friendly message 1st). However, more cautious settings may catch less bots (and not as early)

1 They dont notice anything (since the DeDos setting are fair enough that they never bump into any DeDos messages) - This would be the desired setting

2 They receive a friendly warning message, which tells them to stop hammering the site, and then redirects them to their original page after 10 seconds

3 The receive a 401 unauthorised message, telling them that they are temporary locked out of the site (since they have been detected as malicious and added to the DeDos locked out cache)

Obviously, it would be to the users advantage to view the friendly warning message before they get locked out of the site for hammering. For this reason the friendly settings should be caught with less page views

For instance, 8 pages (in x seconds).

The second thing to point out, is that you still want to catch spam bots / scrappers. The bots wont care if they see a friendly message, however humans will.

So, to catch the bots and avoid humans, we should use more pages for the malicious settings (greater than 8 for instance 9 pages or more in y seconds). So, now at least a human will always bump into the friendly warning message, before they get locked out with the malicious settings.

Thirdly, you will want to set the malcious settings to be caught within a smaller time range. So by default, this is set up as

Friendly settings: 8 pages in 7 seconds

Malicious settings: 9 pages in 6 seconds

This means, to obsever the friendly warning message, a user with have to hit 8 pages in 7 seconds or less. They could hit 8 pages in 6 seconds or even 5 seconds, and they would be shown the friendly warning message.

However, if they ignore the message, on the 9th page, if they have requested all 9 pages in 6 seconds or less, they will then be added to the cache and locked out of the site.

Once a user is added to the cache they are blocked (it's a cache used for locking IPs out)

I try to prevent human regardless, since as you mentioned, some human can do things quite fast.... for this reason, we're concentrating on stopping spam bots and scrappers, but avoid detecting humans

To avoid detecting humans, there are 3 options:

- Avoid Cache for Logged In Users

- Avoid All DeDos for Logged In Users

- Automatically Remove JavaScript Users From Cache

The 2nd option (Avoid All DeDos for Logged In Users) allows members to avoid getting locked out and also prevents them from getting the friendly warning messages, this means DeDos is just operating on logged out users

The 3rd option is quite important(Automatically Remove JavaScript Users From Cache). Even if your members / users are logged out, and they then some how managed to hammer your site.. they will get added to the cache. This option detects the JavaScript of those users and automatically removes them from the cache and prevents them from getting detected by DeDos for the rest of their session (avoiding the admin ever having to deal with false positives, since humans are automatically removed from the cache). On automatically removing a human from the cache, the human is redirected to their original page (very quickly), and the row in your logs will flash and let you know (so you can adjust your DeDos setting to further avoid humans if need be)

However, it must be kept in mind that DeDos is targeted at avoiding humans... If a human is DOSing your site, you could turn the above 3 options off, but it would be smarter to block that user with htaccess. The reason DeDos is useful for spam bots / scrappers, is that spam bots / scrappers change their IP address so frequently, and DOS sites so often. To manually update your htaccess file to stop spam bots / scrappers would require a lot of effort, time and constant monitoring (it's just not realistic), DeDos solves this issue of spam bots & scrappers, and does everything it can to avoid detecting humans. DOS from individual humans it better managed by yourself rather than automation. DDOS from humans / botnets is better managed with load-balancing / hardware

If you want to be extra cautious, to avoid humans, you could change your settings to something like this:

Friendly settings: 11 pages in 10 seconds

Malicious settings: 16 pages in 13 seconds

Making sure that your Malicious setting has a larger number of "pages" than your friendly settings (so a human should always observe the friendly message 1st). However, more cautious settings may catch less bots (and not as early)

Last edited:

tenants

Well-known member

For the number of requests (pages), yes, it would be an advantage to the users to view the friendly message before getting locked outdoes the friendly settings have to always be lower then the other one?

If you believe this to be true and want to adjust your settings accordingly, then your settings would be something like this:i did a test and i could open around 14-16 pages within 10 seconds

but so can bots and they can do more properly within 10 seconds.

Friendly settings: 14 pages in 10 seconds

Malicious settings: 17 pages in 10 seconds

I always try to catch the bots earlier, so try to use the fewest seconds possible, yet still allowing the users to view a friendly message, so I would adjust your setting to something like this:

Friendly settings: 14 pages in 10 seconds

Malicious settings: 15 pages in 9 seconds

You could adjust it once again, catching them earlier by halving both settings:

Friendly settings: 7 pages in 5 seconds

Malicious settings: 7.5 pages in 4.5 seconds = > round up => 8 pages in 5 seconds

Which brings us fairly close to the default settings:

Friendly settings: 8 pages in 7 seconds

Malicious settings: 9 pages in 6 seconds

XxUnkn0wnxX

Active member

There are 3 things a human might observes with this addon

1 They dont notice anything (since the DeDos setting are fair enough that they never bump into any DeDos messages) - This would be the desired setting

2 They receive a friendly warning message, which tells them to stop hammering the site, and then redirects them to their original page after 10 seconds

3 The receive a 401 unauthorised message, telling them that they are temporary locked out of the site (since they have been detected as malicious and added to the DeDos locked out cache)

Obviously, it would be to the users advantage to view the friendly warning message before they get locked out of the site for hammering. For this reason the friendly settings should be caught with less page views

For instance, 8 pages (in x seconds).

The second thing to point out, is that you still want to catch spam bots / scrappers. The bots wont care if they see a friendly message, however humans will.

So, to catch the bots and avoid humans, we should use more pages for the malicious settings (greater than 8 for instance 9 pages or more in y seconds). So, now at least a human will always bump into the friendly warning message, before they get locked out with the malicious settings.

Thirdly, you will want to set the malcious settings to be caught within a smaller time range. So by default, this is set up as

Friendly settings: 8 pages in 7 seconds

Malicious settings: 9 pages in 6 seconds

This means, to obsever the friendly warning message, a user with have to hit 8 pages in 7 seconds or less. They could hit 8 pages in 6 seconds or even 5 seconds, and they would be shown the friendly warning message.

However, if they ignore the message, on the 9th page, if they have requested all 9 pages in 6 seconds or less, they will then be added to the cache and locked out of the site.

Once a user is added to the cache they are blocked (it's a cache used for locking IPs out)

I try to prevent human regardless, since as you mentioned, some human can do things quite fast.... for this reason, we're concentrating on stopping spam bots and scrappers, but avoid detecting humans

To avoid detecting humans, there are 3 options:

The 1st option speaks for it's self, it simply prevents logged in users from ever getting locked out of your site, however they will still see the friendly warning messages if they hammer your site

- Avoid Cache for Logged In Users

- Avoid All DeDos for Logged In Users

- Automatically Remove JavaScript Users From Cache

The 2nd option (Avoid All DeDos for Logged In Users) allows members to avoid getting locked out and also prevents them from getting the friendly warning messages, this means DeDos is just operating on logged out users

The 3rd option is quite important(Automatically Remove JavaScript Users From Cache). Even if your members / users are logged out, and they then some how managed to hammer your site.. they will get added to the cache. This option detects the JavaScript of those users and automatically removes them from the cache and prevents them from getting detected by DeDos for the rest of their session (avoiding the admin ever having to deal with false positives, since humans are automatically removed from the cache). On automatically removing a human from the cache, the human is redirected to their original page (very quickly), and the row in your logs will flash and let you know (so you can adjust your DeDos setting to further avoid humans if need be)

However, it must be kept in mind that DeDos is targeted at avoiding humans... If a human is DOSing your site, you could turn the above 3 options off, but it would be smarter to block that user with htaccess. The reason DeDos is useful for spam bots / scrappers, is that spam bots / scrappers change their IP address so frequently, and DOS sites so often. To manually update your htaccess file to stop spam bots / scrappers would require a lot of effort, time and constant monitoring (it's just not realistic), DeDos solves this issue of spam bots & scrappers, and does everything it can to avoid detecting humans. DOS from individual humans it better managed by yourself rather than automation. DDOS from humans / botnets is better managed with load-balancing / hardware

If you want to be extra cautious, to avoid humans, you could change your settings to something like this:

Friendly settings: 11 pages in 10 seconds

Malicious settings: 16 pages in 13 seconds

Making sure that your Malicious setting has a larger number of "pages" than your friendly settings (so a human should always observe the friendly message 1st). However, more cautious settings may catch less bots (and not as early)

thanks for putting your time in this detailed explanation

so to just fully clear up:

- Avoid Cache for Logged In Users = only for logged in users they never get prema ban and will always get the warning message if they ever hit the limit (does not apply to logged out members? as in registered logged out members or invisible mode members?)

- Avoid All DeDos for Logged In Users = Applies to both logged in and logged out Members (Both Online/Offline Member) and does the same as above but doesnt show a warning message simply ignores all members both online/offline/invisible

i think the first option Avoid Cache for Logged In Users

having it set to able to Show the friendly warning message and never add to Permanent DeDos Cache should apply of both online and recently logged in members but currently logged out. instead of just logged in members.

so this first option is like in friendly block mode

and the other option the ignore all recently logged in members and currently logged in members with both friendly message and added to cache.

i know much is the same but 1st one should also apply to recently logged out members as well

Last edited:

XxUnkn0wnxX

Active member

and i assume that Turn Off Portal DeDos Detection would work with:

http://xenforo.com/community/resources/*******-thread-live-update.3053/

http://xenforo.com/community/threads/*******-conversation-live-update.70461/

or any other ajax automated script?

i know portal refers to front page in most times and i have a shout box on the front page for that

but since live thread and conversations do not refer to the front page will this same feature effect those two add ones as well? as in not ban/block users when the thread/conversation updates x many times within x many seconds of the DeDos settings?

http://xenforo.com/community/resources/*******-thread-live-update.3053/

http://xenforo.com/community/threads/*******-conversation-live-update.70461/

or any other ajax automated script?

i know portal refers to front page in most times and i have a shout box on the front page for that

but since live thread and conversations do not refer to the front page will this same feature effect those two add ones as well? as in not ban/block users when the thread/conversation updates x many times within x many seconds of the DeDos settings?

tenants

Well-known member

tenants updated DeDos - Anti DOS for spam bot/scrapers with a new update entry:

Dynamically update htaccess to block bots and scrappers

Read the rest of this update entry...

Dynamically update htaccess to block bots and scrappers

This is still 0 query overhead, and uses cache for previous DOS detection.

For blocked bots there is now the option to synchronise the cache, so their IP address are added to the .htaccess file when added to the cache, and removed when removed from the cache.

Using the htaccess file is obviously lower resources (0 queries instead of using 1 query to obtain the cache)

This is optional, since not everyone will want their htaccess file dynamically updated (a back up is created if it does...

Read the rest of this update entry...

Last edited:

tenants

Well-known member

and i assume that Turn Off Portal DeDos Detection would work with:

http://xenforo.com/community/resources/*******-thread-live-update.3053/

http://xenforo.com/community/threads/*******-conversation-live-update.70461/

or any other ajax automated script?

i know portal refers to front page in most times and i have a shout box on the front page for that

but since live thread and conversations do not refer to the front page will this same feature effect those two add ones as well? as in not ban/block users when the thread/conversation updates x many times within x many seconds of the DeDos settings?

It shouldn't do, I can't know exactly without using them, but what I now do for most areas is just detect those areas for DOS where bots hit often.

(the thread page, the forum home.. if ticked as an option, login, registration, posts, forums and rss feed).

Other functional areas shouldn't be detected, let me know if there is an issue and I will look into it (but I'm guessing, no, it should be fine, DeDos tries to only touch areas that spam bots / scrappers hit)

Last edited:

tenants

Well-known member

tenants updated DeDos - Anti DOS for spam bot/scrapers with a new update entry:

Max Friendly Messages & Cache Cookieless Bots Earlier

Read the rest of this update entry...

Max Friendly Messages & Cache Cookieless Bots Earlier

It's annoying when you see that DeDos has logged some bots/scrappers, but they have only been logged with a friendly warning message (and sometimes multiple times, or as a cookieless user). For this reason I have added two new options:

Maximum Friendly Messages

If a user hits the maximum number of friendly warning messages (or more) within the users session, the user will be added to the malicious DeDos cache (and thus locked out)

Cache Cookieless Bots Earlier

Use the new DeDos...

Read the rest of this update entry...

tenants

Well-known member

I'll be concentrating on this type of bot next:

This harvester bot is very interesting

It's using the same IP address, but seems to be starting lots of sessions and changing useragent (so DeDos currently does not catch it).

We know its not a cookieless bots (since we would have caught the IP with our cookieless detection), so it does have a session

* note the frequency of useragent changes

You will notice, as per usual for malicious bots, it's always using user agents that look like browsers:

Within 12 seconds, it changed its user agent 23 times (or less if running multiple sessions at once) and used a total of 8 unique user agents (it then continued to do this for thousands of pages)

Even though it is hammering the site from one IP address, it's not hitting the site very frequently for the same session (or useragent).

It's either running with multiple sessions open (so avoiding detection with the same sessions) or creating a new session each time the useragent changes

What can we do to detect this? We can look for user agent changes using the same IP address within a small time scale

- One possible issue with detecting this, is that if many users all on the same network (for instance a corporate work network) use your forum, it could also look like a many useragent changes in a small time frame

- For this reason, I will add this as an option:

Detect Multiple User Agent Changes from the same IP

I'll be looking at catching this type of bot next

This harvester bot is very interesting

It's using the same IP address, but seems to be starting lots of sessions and changing useragent (so DeDos currently does not catch it).

We know its not a cookieless bots (since we would have caught the IP with our cookieless detection), so it does have a session

* note the frequency of useragent changes

You will notice, as per usual for malicious bots, it's always using user agents that look like browsers:

Code:

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:09 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:11 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:12 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:13 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:13 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:15 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:15 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:18 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.1; rv:27.0) Gecko/20100101 Firefox/27.0" *

68.64.174.5 - - [05/Jul/2014:23:05:18 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 6.1; rv:27.0) Gecko/20100101 Firefox/27.0" *

68.64.174.5 - - [05/Jul/2014:23:05:20 +0100] "url" "Opera/9.80 (Windows NT 6.1; WOW64) Presto/2.12.388 Version/12.15" *

68.64.174.5 - - [05/Jul/2014:23:05:20 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:21 +0100] "url" "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:21 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *Within 12 seconds, it changed its user agent 23 times (or less if running multiple sessions at once) and used a total of 8 unique user agents (it then continued to do this for thousands of pages)

Even though it is hammering the site from one IP address, it's not hitting the site very frequently for the same session (or useragent).

It's either running with multiple sessions open (so avoiding detection with the same sessions) or creating a new session each time the useragent changes

What can we do to detect this? We can look for user agent changes using the same IP address within a small time scale

- One possible issue with detecting this, is that if many users all on the same network (for instance a corporate work network) use your forum, it could also look like a many useragent changes in a small time frame

- For this reason, I will add this as an option:

Detect Multiple User Agent Changes from the same IP

I'll be looking at catching this type of bot next

Last edited:

Eoj Nawoh

Active member

I have lots of these errors.

end_Db_Statement_Mysqli_Exception: Mysqli statement execute error : Field 'proxy_header' doesn't have a default value - library/Zend/Db/Statement/Mysqli.php:214

Generated By: Unknown Account, Yesterday at 10:44 PM

Stack Trace

#0 /home/harpoong/public_html/forum/library/Zend/Db/Statement.php(297): Zend_Db_Statement_Mysqli->_execute(Array)

#1 /home/harpoong/public_html/forum/library/Zend/Db/Adapter/Abstract.php(479): Zend_Db_Statement->execute(Array)

#2 /home/harpoong/public_html/forum/library/Zend/Db/Adapter/Abstract.php(574): Zend_Db_Adapter_Abstract->query('INSERT INTO `sf...', Array)

#3 /home/harpoong/public_html/forum/library/XenForo/DataWriter.php(1624): Zend_Db_Adapter_Abstract->insert('sf_dedos_log', Array)

#4 /home/harpoong/public_html/forum/library/XenForo/DataWriter.php(1613): XenForo_DataWriter->_insert()

#5 /home/harpoong/public_html/forum/library/XenForo/DataWriter.php(1405): XenForo_DataWriter->_save()

#6 /home/harpoong/public_html/forum/library/Tac/DeDos/Model/Log.php(69): XenForo_DataWriter->save()

#7 /home/harpoong/public_html/forum/library/Tac/DeDos/Model/DeDosCache.php(273): Tac_DeDos_Model_Log->logEvent(Array, 1, Object(XenForo_Phrase), false)

#8 /home/harpoong/public_html/forum/library/Tac/DeDos/ControllerPublic/Page.php(33): Tac_DeDos_Model_DeDosCache->checkSessionForDos()

#9 /home/harpoong/public_html/forum/library/XenForo/Controller.php(309): Tac_DeDos_ControllerPublic_Page->_preDispatch('Index')

#10 /home/harpoong/public_html/forum/library/XenForo/FrontController.php(346): XenForo_Controller->preDispatch('Index', 'XenForo_Control...')

#11 /home/harpoong/public_html/forum/library/XenForo/FrontController.php(134): XenForo_FrontController->dispatch(Object(XenForo_RouteMatch))

#12 /home/harpoong/public_html/forum/index.php(13): XenForo_FrontController->run()

#13 {main}

XxUnkn0wnxX

Active member

I'll be concentrating on this type of bot next:

This harvester bot is very interesting

It's using the same IP address, but seems to be starting lots of sessions and changing useragent (so DeDos currently does not catch it).

We know its not a cookieless bots (since we would have caught the IP with our cookieless detection), so it does have a session

* note the frequency of useragent changes

You will notice, as per usual for malicious bots, it's always using user agents that look like browsers:

Code:68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:09 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:11 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:12 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:13 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:13 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:14 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:15 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:15 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:16 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:18 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:17 +0100] "url" "Mozilla/5.0 (Windows NT 6.1; rv:27.0) Gecko/20100101 Firefox/27.0" * 68.64.174.5 - - [05/Jul/2014:23:05:18 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" 68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:19 +0100] "url" "Mozilla/5.0 (Windows NT 6.1; rv:27.0) Gecko/20100101 Firefox/27.0" * 68.64.174.5 - - [05/Jul/2014:23:05:20 +0100] "url" "Opera/9.80 (Windows NT 6.1; WOW64) Presto/2.12.388 Version/12.15" * 68.64.174.5 - - [05/Jul/2014:23:05:20 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:21 +0100] "url" "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.76 Safari/537.36" * 68.64.174.5 - - [05/Jul/2014:23:05:21 +0100] "url" "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/32.0.1700.107 Safari/537.36" *

Within 12 seconds, it changed its user agent 23 times (or less if running multiple sessions at once) and used a total of 8 unique user agents (it then continued to do this for thousands of pages)

Even though it is hammering the site from one IP address, it's not hitting the site very frequently for the same session (or useragent).

It's either running with multiple sessions open (so avoiding detection with the same sessions) or creating a new session each time the useragent changes

What can we do to detect this? We can look for user agent changes using the same IP address within a small time scale

- One possible issue with detecting this, is that if many users all on the same network (for instance a corporate work network) use your forum, it could also look like a many useragent changes in a small time frame

- For this reason, I will add this as an option:

Detect Multiple User Agent Changes from the same IP

I'll be looking at catching this type of bot next

well i just stated having these issues i posted a thread here: - http://www.webhostingtalk.com/showthread.php?p=9198051

and yes the user agents change, ip ranges change, it does it in bursts, it attacks different url links and spoof's the user agent every now and then..

i have endless of logs of these...

and i am finding it hard to see if it is a real user or bad user.. they making nearly impossible to detect...

like this would be easy solution to block bad bots:

SetEnvIfNoCase ^User-Agent$ .*(WordPress) HTTP_SAFE_BADBOT

Deny from env=HTTP_SAFE_BADBOT

http://www.askapache.com/htaccess/blocking-bad-bots-and-scrapers-with-htaccess.html

but this new method using legit user agents and so on, its just something i cannot block.. without effecting normal users..

especially when they use ipv6 it's a pain..

i would very much like to see a method of blocking this.. preferably before it hits my site like at the apache level or before that..

well if you do find a way to block this i hope there could also be a way to pic this up other then ur plugin won't be very helpful if they use this method of attack when they attack my wordpress blog which i use as my front page....

and how does the htaccess thing work when an ip is black listed which htaccess file does it use?

within / or within the forums directory?

because both of my htaccess file already have a avery large rule set and i would prefer to preferably a 2nd htaccess file for such ip list blocking thats if it is possible to have multiple at access files..

atm my system consists of:

this plugin for xenforo

zbblock

cloudflare - no support for ipv6 block

fail2ban - log scanning of bad useraganets and banning ipv4 (no support for ipv6

flare wall which links CSF to cloud flare so all ip's added to CSF ends up in iptables and Cloudflare.

mod time out and a few other things set up for apache and my server..

mod_security

i need a powerful rate limiter that run's alongs side apache or within it since i use cloud flare the mod_cloudflare for apache isn't very effective up to apache not anything running before that since the real visitor ip's don't show beyond apache and setting ip the x-forwarder for the whole server cannot seem to think of a a way...

i can't seem to think of anything else besides fail2ban or some other script/fire wall that could run along side apache to detect these types of bursts of attacks with changing user agents, ip ranges, url's

something that would scan both logs and all incoming traffic, scanning user agents and ip's and how many times they hit a certain link or links x many times within a time frame to detect that and block that ip. would prefer this to talk to CSF firewall or use cloud flare API features to add/deny pi's.

PS should consider adding cloud flare API support. look up flare wall and it's scripting to get an idea of it. it should be simple to implement..

so when ban ip is cached/banned it also alerts cloud flare of that ip and bans it as well..

well people say to try and use this but it seems very complex: http://opensource.adnovum.ch/mod_qos/

Last edited:

XxUnkn0wnxX

Active member

also one other note as you quoted in your log

[05/Jul/2014:23:05:10 +0100] keeps changing for you. for me its more of big bursts 1-half a page full of the same ip but

[05/Jul/2014:23:05:10 +0100] does not change so i would get a big list something like this:

but user agents, and the url being attacked would still change..

and then like 1-2 min later then the time frame is sometimes random going every few seconds.

but they tend to like to use bursts same time frame for a while before changing it up a bit later on.

and i would strongly recommend you take a look into https://www.cloudflare.com/docs/client-api.html

and

http://flarewall.net

"

in the flarewall.sh within the cron jobs folder

edit the:

curl -A "Flarewall Script/1.0" -d "a=wl&tkn=$TOKEN&email=$EMAILkey="$iphttps://www.cloudflare.com/api_json.html

to

curl -A "Flarewall Script/1.0" -d "a=wl&tkn=$TOKEN&email=$EMAIL&key="$iphttps://www.cloudflare.com/api_json.html

author made a syntax error

that should fix the allow to cloudflare when you allow and ip in CSF

"

if you plan on playing with flare wall..

so that any ip that gets banned, auto added to cloud flare block list would improve server load greatly having it blocked before slamming the server would increase performance and efficiency..

Code:

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:09 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:10 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:11 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:12 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *[05/Jul/2014:23:05:10 +0100] keeps changing for you. for me its more of big bursts 1-half a page full of the same ip but

[05/Jul/2014:23:05:10 +0100] does not change so i would get a big list something like this:

Code:

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36"

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.146 Safari/537.36" *

68.64.174.5 - - [05/Jul/2014:23:05:08 +0100] "url" "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/33.0.1750.117 Safari/537.36" *but user agents, and the url being attacked would still change..

and then like 1-2 min later then the time frame is sometimes random going every few seconds.

but they tend to like to use bursts same time frame for a while before changing it up a bit later on.

and i would strongly recommend you take a look into https://www.cloudflare.com/docs/client-api.html

and

http://flarewall.net

"

in the flarewall.sh within the cron jobs folder

edit the:

curl -A "Flarewall Script/1.0" -d "a=wl&tkn=$TOKEN&email=$EMAILkey="$iphttps://www.cloudflare.com/api_json.html

to

curl -A "Flarewall Script/1.0" -d "a=wl&tkn=$TOKEN&email=$EMAIL&key="$iphttps://www.cloudflare.com/api_json.html

author made a syntax error

that should fix the allow to cloudflare when you allow and ip in CSF

"

if you plan on playing with flare wall..

so that any ip that gets banned, auto added to cloud flare block list would improve server load greatly having it blocked before slamming the server would increase performance and efficiency..

Last edited:

Ridemonkey

Well-known member

DRE

Well-known member

Awesome!StopBotResources is no longer needed when using FBHP and DeDos together (it has been removed).

StopBotResources relied on an API for every single visitors.. That means the more forums that used the plugin, the more the API became saturated with requests (which is why I only allowed small forums to use that resource and asked admins to remove the plugin once they no longer met certain conditions)...

Using FoolBotHoneyPot & DeDos together we have a better approach

FoolBotHoneyPot detects spam bots registering (currently 100% of spam bots)

DeDos detects spam bots hitting your site heavily

Once detected, they both use the local cache... so we have a very low query approach (and low bandwidth) of locking these spam bot / scrapers out

- There is no need for an API, so yes, using both together they can help reduce bot resources on servers with limited resources / high amount of spam bots, and they can be used on any size forum

MattW

Well-known member

I've started to get a lot of those proxy_header errors as well on my site.I have lots of these errors.

tenants

Well-known member

Interesting, I turned on the JavaScript detection to avoid false positives and the first one that got deactivated is clearly some kind of bot:

I suspect that they botted, then went back as a real user to see why they couldn't bot ... What I try to do as a priority, is avoid false negatives

Last edited: