FredC

Well-known member

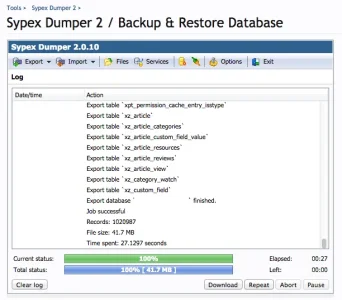

My Server is copying the database nightly at around 3am for a backup and dumping it i dont know where.. the problem (besides not knowing where the backups are being stored) is that this puts quite a strain on my server and causes a half dozen server errors every single night and sometimes more depending on the activity levels on the forum itself that time of night..

So id be interested in knowing my options in regards to backing up my DB that would be less resource intensive??

I know it would help if you knew how i was currently backing up my data but i dont really know that either..

I am on a Dedicated Apache CENTOS 6.5 x86_64 standard server with WHM/cPanel

These backups have rendered my forum 90% useless for an hour each night and has destroyed my international traffic.. I really need a better solution. Preferably Free!!

HELP!!

So id be interested in knowing my options in regards to backing up my DB that would be less resource intensive??

I know it would help if you knew how i was currently backing up my data but i dont really know that either..

I am on a Dedicated Apache CENTOS 6.5 x86_64 standard server with WHM/cPanel

These backups have rendered my forum 90% useless for an hour each night and has destroyed my international traffic.. I really need a better solution. Preferably Free!!

HELP!!