lazer

Well-known member

Hey

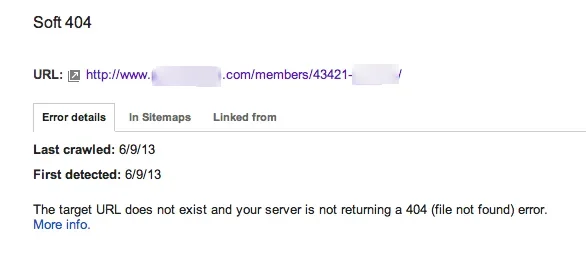

So, I was looking at GWT today and noticed a steady increase in crawl errors. The weird thing is that the URL's it is "failing" on, haven't been physically present on my server for over a year (converted from vB to xF in April 2012).

Also, since installing the Resources add-on, we closed off the forum that was doing the job up until then. Google is still trying to index that forum but is listing 1,000's of "Access Denied" errors, as the forum is no longer open to the public.

Can you spot when we installed RM and closed the related forum?

Why is Google still looking at these URL's (and subsequently returning a Soft404 or "access denied" errors) and how do I tell them to stop?

Cheers!

So, I was looking at GWT today and noticed a steady increase in crawl errors. The weird thing is that the URL's it is "failing" on, haven't been physically present on my server for over a year (converted from vB to xF in April 2012).

Also, since installing the Resources add-on, we closed off the forum that was doing the job up until then. Google is still trying to index that forum but is listing 1,000's of "Access Denied" errors, as the forum is no longer open to the public.

Can you spot when we installed RM and closed the related forum?

Why is Google still looking at these URL's (and subsequently returning a Soft404 or "access denied" errors) and how do I tell them to stop?

Cheers!