FoP

Member

A month ago we were overrun by the Bytespider bot and I added it to the robots.txt file. It worked for a month but a few days ago they came back in full force. We are on cloud hosting and as such have no access to the .htaccess file, so I decided to put the entire IP range the robot is using in discouragement mode, redirecting them 100% to some search engine. That worked for a day and a half. Now they're back and I don't know how this is possible.

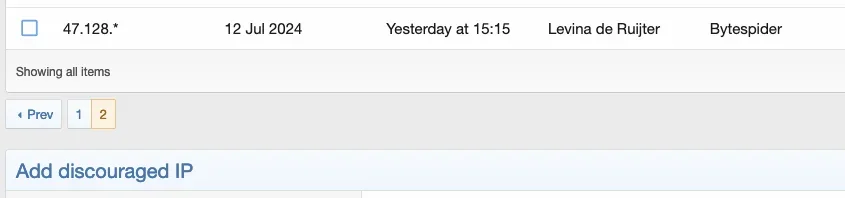

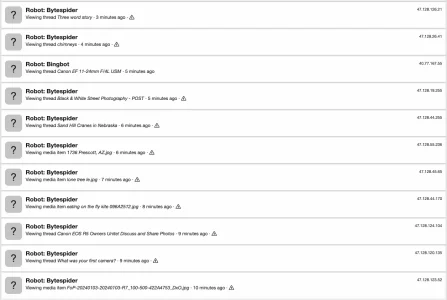

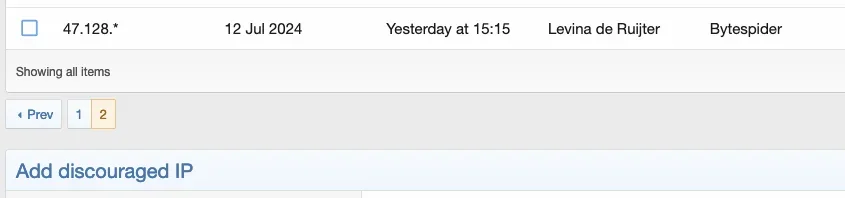

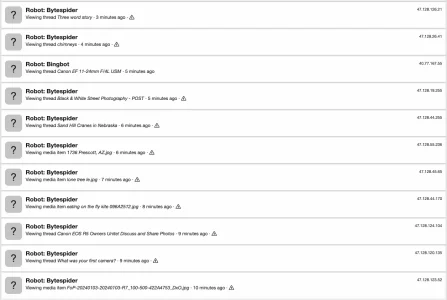

Looking at the log, it says the discouragement mode for the IP range was last triggered yesterday at 15:15 hrs. But the Robots tab in Current Visitors is filling up with them as I type these words. So why is the discouragement mode not working? It did at first, but it's like they found a workaround.

The good thing is every bot has the "viewing an error" sign to its name, so I am assuming they're not viewing any content at all, but I really want them gone altogether.

Looking at the log, it says the discouragement mode for the IP range was last triggered yesterday at 15:15 hrs. But the Robots tab in Current Visitors is filling up with them as I type these words. So why is the discouragement mode not working? It did at first, but it's like they found a workaround.

The good thing is every bot has the "viewing an error" sign to its name, so I am assuming they're not viewing any content at all, but I really want them gone altogether.