Yeah. My forum (

https://endless-sphere.com ) is within 1% of the post count of Xenforo so it's a good comparison.

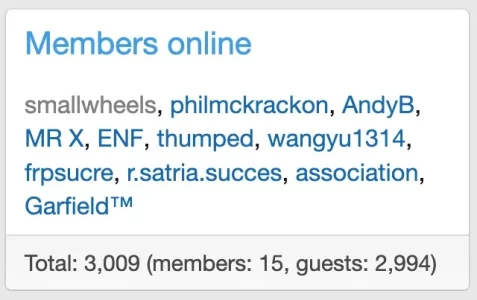

Here's whatever Xenforo is using versus my set of fail2ban rules:

My number tends to be in the 2000's lately, but it was 20000+ a month ago before the last round of tuning.

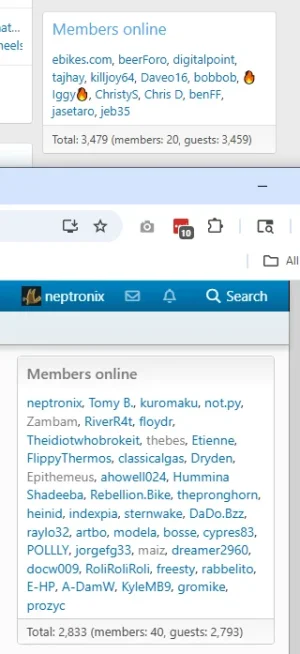

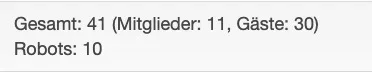

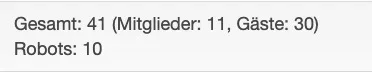

My forum is a tiny baby in comparison, the most guests I ever had was 1800 back in April this year. This was because of a scraping attack coming from Hetzner. In March this year I had started a quite intensive journey of investigations, experiments and counter measures against scrapers, costing endless hours. As I am on shared hosting and do not use cloud flare (and do not want to) my points of leverage are pretty limited. But sucessful, as it seems. Typically my statistic now looks like that:

I hardly ever have more than 80 guests and/or at best 15 robots at peak and the ratio members/guests is barely ever beyond 1:5. Before that I had a couple of hundreds of guests quite regularly along with 40-100 robots.

It was a lot of work over the first weeks and months and still needs a limited level of continuous maintenance and adjustment, but overall it is pretty stable now. I've described the earlier parts of my journey here:

I've recently spent some time getting rid of unwanted traffic on my forums and thought maybe the learnings might have value for someone else, so I am writing them up. This is not intended as a tutorial or even advice - it is just a couple of finding that you may find useful or not. Also, there are many ways to Rome, depending from your situation, needs and abilities. So take it with a grain of salt.

Important race conditions for my actions: My forum is pretty small (currently ~2.000 registered users), runs on shared hosting (which limits my possibilities in terms of configuration), I...

A lot has happened since then and an update is overdue. However, as a rough conclusion: While being far from perfect and a lot of time involved it is not all too difficult to bring down bots massively. There clearly is a limit to my attempt the more clever the bots behave - so a certain degree of them surely are undetected now already.

However: Having the luck to have started my attempts kind of early enough when the high amount of reports about a massive rise in guests/scrapers/AI bots in the forum here started in about early July (and has not stopped ever since) I do not see anything like that on my forum. It may be just luck, but maybe also part of the measures I implemented.

Someone really should sell a bot protection package. Or at least describe a working cloudflare setup.

Something that can give non-expert server administrators a headstart.

Absolutely. Too many forum administrators still focus on registrations and so do most of the add ons. In the meantime, guests aka scraping bots have become the bigger threat in my opinion. It should be relatively easy to create an add on that can be configured to block access to the forum (and not just to the registration) on a country, ASN or IP level (and possible other criteria as well) along with the option of proper logging and filtering the logs. This would help a lot already w/o any need for intelligence in the add on needed - it would ease up what I did on the shell with traditional unix tools dramatically.

Obviously, there are many ways for a more fancy and more intelligent solution, but XF does lack even the plain basics, so having an easy to access toolkit for them would already improve the situation dramatically.