Anatoliy

Well-known member

They appeared after I upgraded to 2.3.2 about a month ago.

All of them are these types:

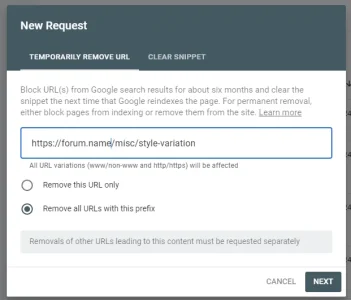

domain/misc/style-variation?reset=1&t=1724763460%2Cad52e9ce6de9c6b628dd7feaac79357a

or

domain/misc/style-variation?variation=default&t=1724762134%2C9ac39b17ff1a324c9d0afeefa19a8472

or

domain/misc/style-variation?variation=alternate&t=1724762133%2Cea0e99e67f692418bd180af747492fed

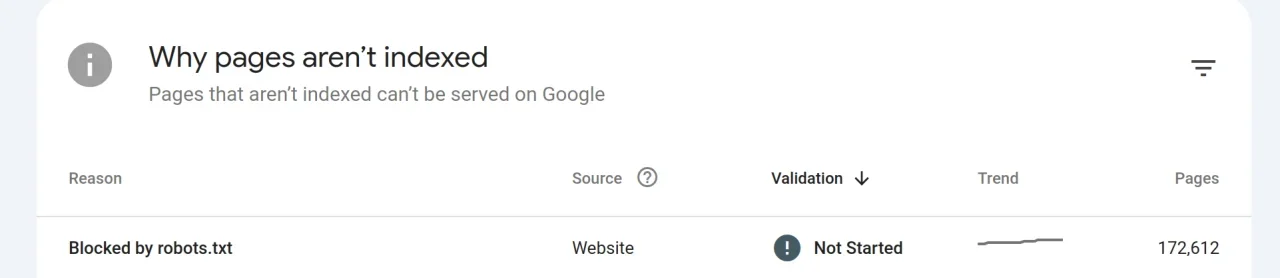

Where are those links? Pages are not indexed, so Google doesn't show referring pages.

Why those links are there if they lead to closed doors?

How to fix that?

All of them are these types:

domain/misc/style-variation?reset=1&t=1724763460%2Cad52e9ce6de9c6b628dd7feaac79357a

or

domain/misc/style-variation?variation=default&t=1724762134%2C9ac39b17ff1a324c9d0afeefa19a8472

or

domain/misc/style-variation?variation=alternate&t=1724762133%2Cea0e99e67f692418bd180af747492fed

Where are those links? Pages are not indexed, so Google doesn't show referring pages.

Why those links are there if they lead to closed doors?

How to fix that?