digitalpoint

Well-known member

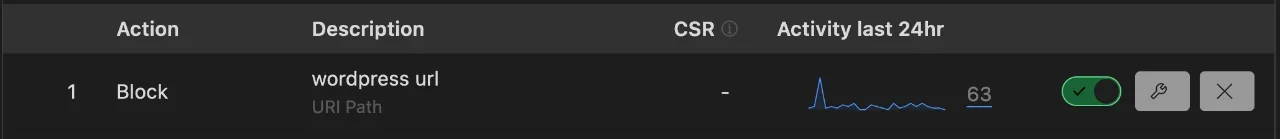

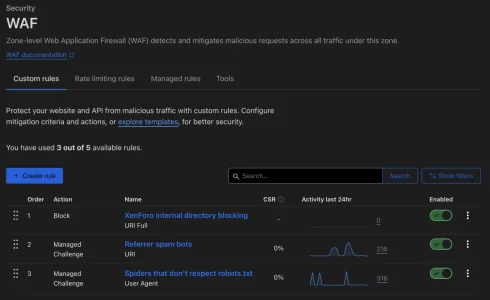

There aren't any universal WAF rules you need for XenForo. But this is what it looks like for one of my XenForo sites:

The first one was setup by my Cloudflare addon, the other ones are just ones I added manually to deal with referrer bot spam and certain spiders (both of which are going to be unique to my site).

The first one was setup by my Cloudflare addon, the other ones are just ones I added manually to deal with referrer bot spam and certain spiders (both of which are going to be unique to my site).