What compute device(s) are you using? I'm wondering what size system(s) would be required to support my services, without losing out user experience and performance. Linode compute and block storage looks more expensive than my current system, to match performance/size.

I use a Shared CPU plan - which is their most cost effective. I've not found CPU stealing to be much of a problem on the shared CPU, especially given I've got 4 of them available.

I'm on an 8GB Linode so I have enough space to allocate more RAM to the innodb_buffer_pool_size than I have innodb data (4G) - which costs US$40 pm + $10 pm for backups

I have 160GB of storage available on that plan - of which I allocate only half so that I can restore a disk from backup if required without removing an existing disk.

So of the 80GB storage allocated, I'm using around 50GB for the site and DB minus attachments.

I then have a 240GB block storage volume assigned wich is where my attachments folder is mounted - currently utilising 193GB. This costs $24 pm.

So total space used on disk is 243GB.

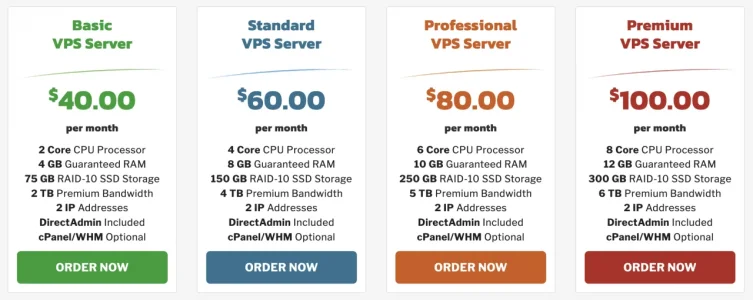

Cost is:

- 8GB shared CPU Linode: $40pm

- backups for 8GB Linode: $10pm

- 240GB block storage: $24pm

- Total: US$74pm

I don't run dev on the same server - I do my dev work on a locally hosted Hyper-V server built from the same build script I use to build my production servers. If I need a live test server that other people can access - I would fire up a cheap low powered VPS for testing purposes so it doesn't consume resources on my prod server.

For me, the most important performance enhancement you can make (other than running on SSD instead of HDD drives) is to ensure you can cache all of your innodb data in your buffer pool - so try and make your innodb_buffer_pool_size larger than your innodb data size. Make sure you allocate sufficient RAM for other caching and resources the server may require though!

RAM is the most critical component for production servers IMO.

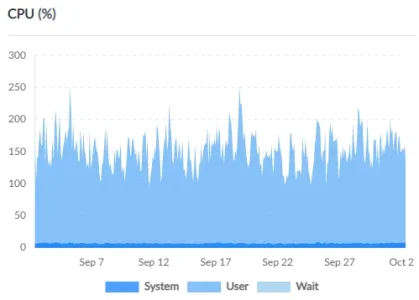

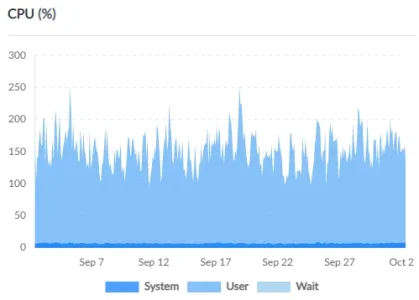

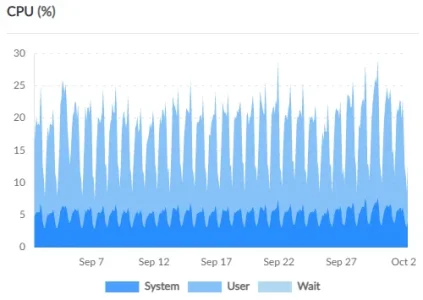

This server has some pretty hinky customisations to my XenForo which use a lot of CPU resources - I'm still running a very heavily modified version of XF 1.5 - will be interesting to see what kind of optimisations I can get when I rebuild those customisations for XF 2.x

So I'm using a lot more CPU than I should be on this server:

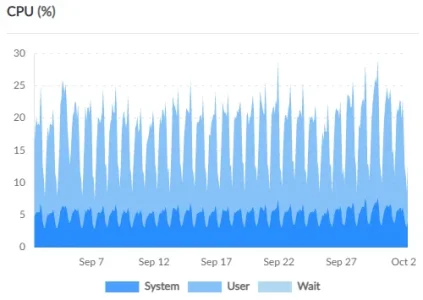

... when you compare it to my other larger site running an identical setup as listed above (minus the additional block storage - it doesn't have a large photo gallery), you'll see how high the CPU usage on that site is compared to my other site, which barely idles.

If it weren't for the memory requirements for the DB, I would be more than happy to run this other site on a 2 shared CPU Linode or even a 1 shared CPU Linode - it simply doesn't use much CPU resource.

You certainly don't need a dedicated CPU VPS in my opinion - shared is good enough unless you have a dodgy VPS provider where you get a lot of CPU steal.

When I need to upgrade I'm more inclined to go for a high memory Linode - 24GB RAM, 2 (dedicated) CPUs, 20GB of storage + a bunch of additional block storage added, $60 pm for the VPS and probably another $30 pm for block storage and I get 3x as much RAM to play with.

Or I set up a dedicated DB server on the high memory Linode and then run a bunch of smaller compute-only Linodes for my various sites which all connect to that server. XenForo doesn't require much CPU power (my dodgy XF1.5 server above notwithstanding!), so upgrading to more powerful VPS machine just to get more RAM or storage doesn't really make much sense from a value perspective.