Ok looking at the Child Risk Assessment stuff again - I think it's going to be really hard to comply. Have extracted a few things from the CRA Guidance.

You have to

assess the risk factors in Appendix A1 near the bottom of the linked page.

Services with direct messaging, or that allow posting of photos or videos, re-posting or sharing content, searching for user generated content, tagging, services with features that increase engagement (eg "likes" and comments, alerts and notifications), come under risk factors.

You also need to assess risk factors for the different age groups

0-5, 6-9, 10-12, 13-15, 16-17

Assessing how optimising revenue for the site can increase risks to children (ads, recommender systems etc)

"

Your commercial profile may increase the risk that children encounter different kinds of CHC.

For example, we would expect you to consider:

- How low capacity or early-stage businesses may pose heightened risk if they have

more limited technical skills and financial resources to introduce effective risk

management. For instance, they may have insufficient resources to adopt

technically advanced automated content moderation processes, or to hire a large

number of paid-for moderators."

(So that's small sites too risky then is it?!)

You then have to make a note of all your risk factors and use these as part of your risk assessment in Step 2.

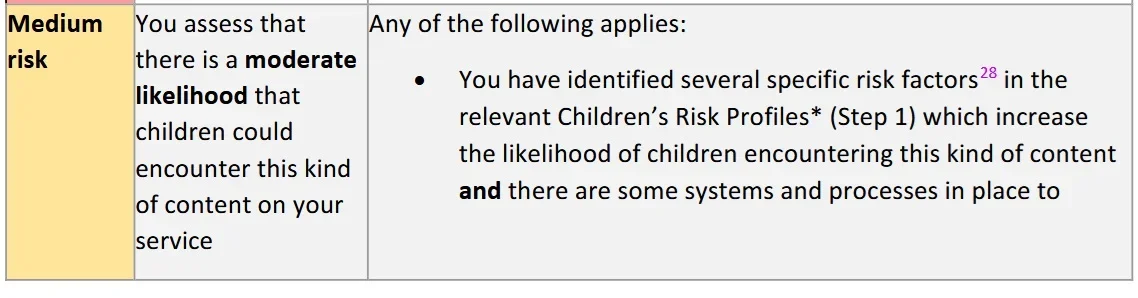

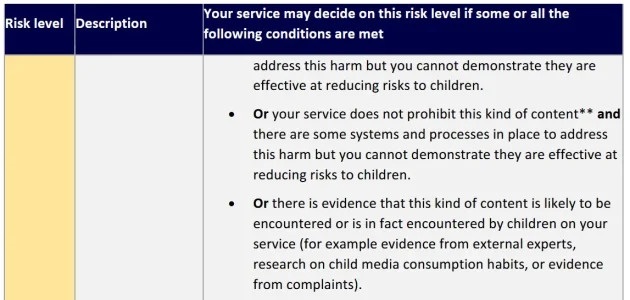

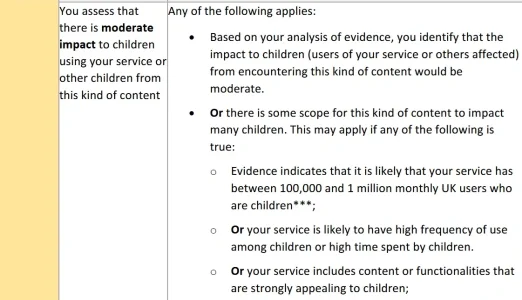

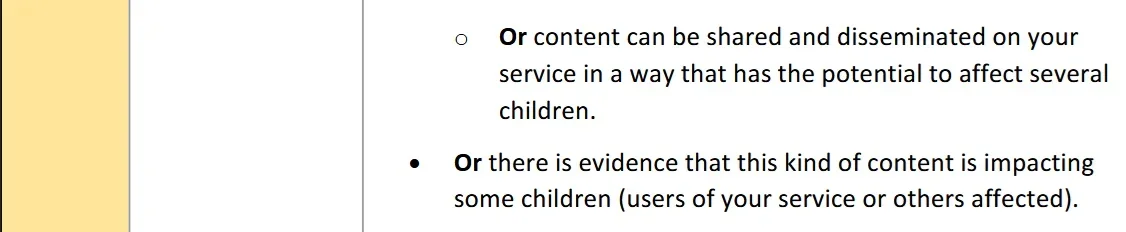

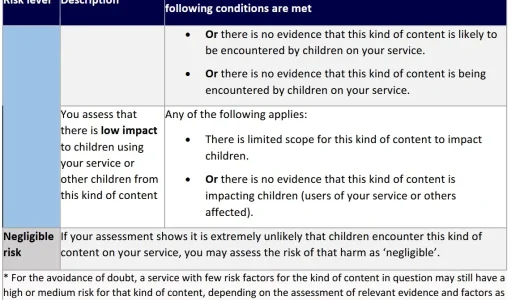

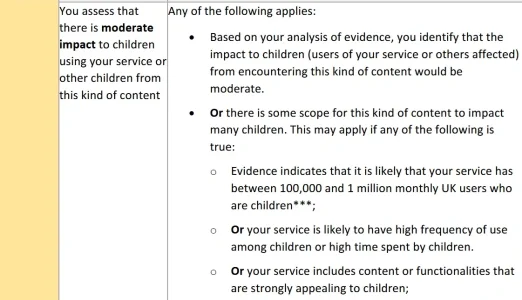

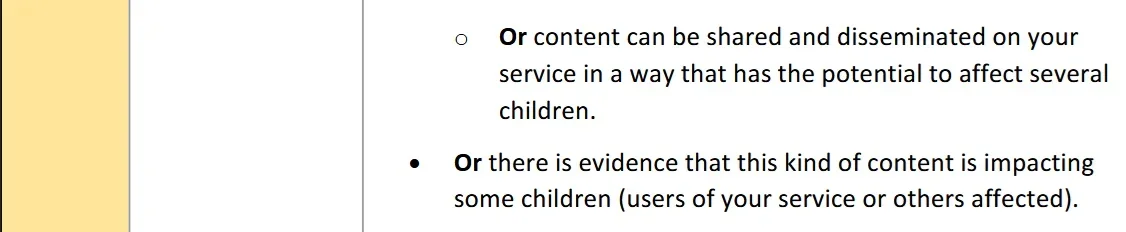

Under the risk levels table, you then have to assess - your risk factors. As well as the impact of the specific harms on a child and whether you assess the impact as High Moderate or Low. So the impact of a child seeing any of the harms, forms part of determing whether it's low, medium or high risk. Eg can you say the harm would be low impact?

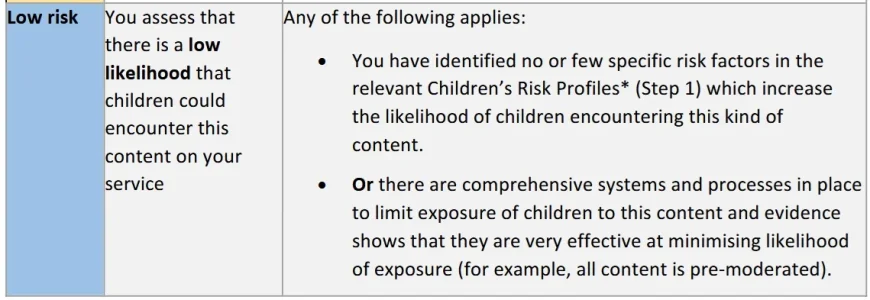

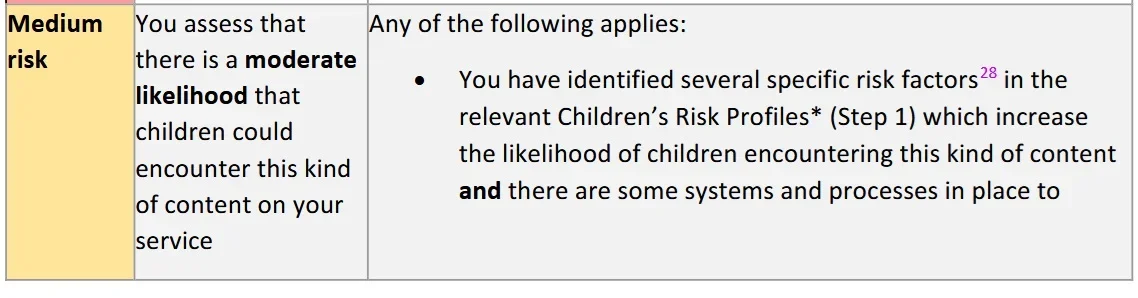

So below are the tables for Medium and Low Risk. Noting that you can't just "think" you're low risk - you have to assess your level risk by looking through the "risk factors" mentioned above.

Also noting the little point 28 under medium risk

"

28 Some risk factors, while distinct, may have a similar effect on your service (such as a situation where your

service allows users to post videos, and is also a video-sharing service). In these situations, you may choose to

consider the risk factors together. Separately, some distinct risk factors may combine or intersect and increase

risk, as noted in the Risk Profiles (such as livestreaming intersecting with the group messaging and

commenting functionalities of a service). "

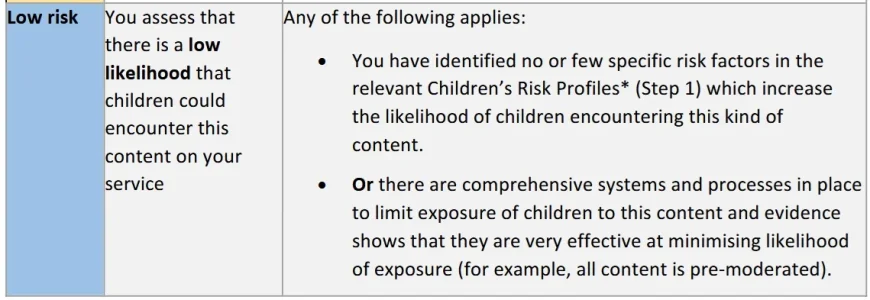

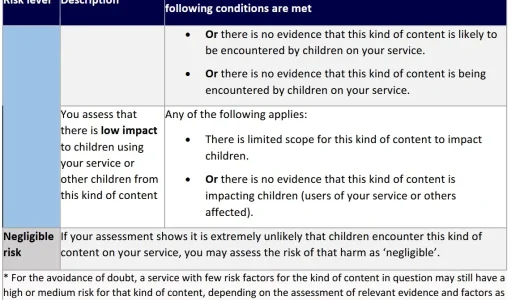

But just looking at Low Risk for now .....

To assess low risk, you have to assess that are no or few of the "risk factors" that apply in Appendix 1 (eg personal profiles, direct messaging, posting photos and videos, advertising, user encouragement ......)

Or that there are comprehensive systems in place to prevent these risks (eg pre-moderation).

Note: I've established that even AI scanning of links doesn't count as pre-moderation - it is post-moderation. Pre-moderation is either a) all links go for manual moderation or b) scanning of links BEFORE they are actually posted.

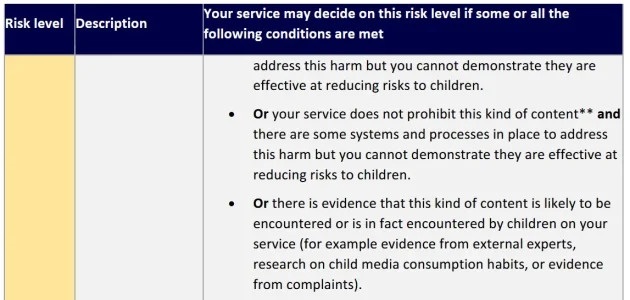

Which to me suggests most people would be MEDIUM risk. As I wouldn't want to manually pre-moderate all links. Half the posts would end up being manually moderated then!

What it doesn't tell us yet is - if you are medium risk for a CRA - what additional measures you would also need to take.