I just saw the 500-page risk register published by OFCOM. That means we have to identify control measures for all those.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

UK Online Safety Regulations and impact on Forums

- Thread starter lazy llama

- Start date

-

- Tags

- regulation

The risk of any of these things being discussed on my forum are 0. But can you have key words, so:We need better than that. Look at the crime list. Is saying we have to control everything.

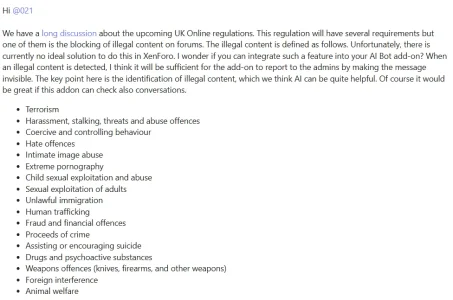

There can be some additions to the above addon maybe to review the content and report it to the admins.

NOTES TO EDITORS

- The Online Safety Act lists over 130 ‘priority offences’, and tech firms must assess and mitigate the risk of these occurring on their platforms. The priority offences can be split into the following categories:

- Terrorism

- Harassment, stalking, threats and abuse offences

- Coercive and controlling behaviour

- Hate offences

- Intimate image abuse

- Extreme pornography

- Child sexual exploitation and abuse

- Sexual exploitation of adults

- Unlawful immigration

- Human trafficking

- Fraud and financial offences

- Proceeds of crime

- Assisting or encouraging suicide

- Drugs and psychoactive substances

- Weapons offences (knives, firearms, and other weapons)

- Foreign interference

- Animal welfare

Time for tech firms to act: UK online safety regulation comes into force

People in the UK will be better protected from illegal harms online, as tech firms are now legally required to start taking action to tackle criminal activity on their platforms, and make them safer by design.www.ofcom.org.uk

Assisting or encouraging suicide - keywords of "go kill yourself" would be flagged and could be checked?

So I recommend AI implementation. you can censor some words in XenForo, but it is easy to bypass.The risk of any of these things being discussed on my forum are 0. But can you have key words, so:

Assisting or encouraging suicide - keywords of "go kill yourself" would be flagged and could be checked?

/admin.php?options/groups/censoringOptions/

For example, "Go kill yourself" is your identification but if someone write "Go kll yrslf" it will not be captured. But people will understand what is the meaning.

How can AI Imp be done in Xenforo?So I recommend AI implementation. you can censor some words in XenForo, but it is easy to bypass.

/admin.php?options/groups/censoringOptions/

For example, "Go kill yourself" is your identification but if someone write "Go kll yrslf" it will not be captured. But people will understand what is the meaning.

It's more the other "harms" that Ofcom fear children might run into. There does however seem to be a general requirement in the OSA for being able to filter content (lest you be exposed to thing!) and there is little harm in having content your forum might consider "eyebrow raising" or risque hidden from immediate viewing. We use the https://xenforo.com/community/resources/mmo-hide-bb-code-content-system.7752/ system for doing that already with a preference opt into the group that can view content within the appropriate tags. Although Ozz/Painbaker's solution looks more automated and polished than our DIY approach.https://xenforo.com/community/resources/ozzmodz-adult-content-filter.8401/ - would this work to stop porn?

How can AI Imp be done in Xenforo?

This is an XenForo addon with different features. I am sure content check can be integrated into this addon. We should discuss this with the developer.

This is completely ridiculous. There are no 100% reliable ways to verify the age of a person that registers on your forum and absolutely no ways to ensure that the person that is using an account is the person that once registered. All forums would have to implement an age check as it is used by banks when opening an account - video ID, or postal ID - which is very expensive, very complex and absolutely not feasible for online forums. The more as it contradicts the right to be anonymous as well as GDPR. Plus probably barely anyone would be willing to go down that route just to register at an online forum, wanting i.e. to find out more about fish or bonsai trees.Age verification details released now and not good news

Age checks to protect children online

Children will be prevented from encountering online pornography and protected from other types of harmful content under Ofcom’s new industry guidance which sets out how we expect sites and apps to introduce highly effective age assurance.www.ofcom.org.uk

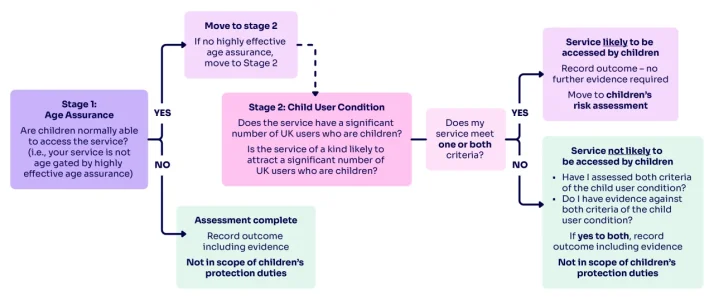

- All user-to-user and search services – defined as ‘Part 3’ services[4] – in scope of the Act, must carry out a children’s access assessment to establish if their service – or part of their service - is likely to be accessed by children.

- Unless they are already using highly effective age assurance and can evidence this, we anticipate that most of these services will need to conclude that they are likely to be accessed by children within the meaning of the Act.

- any age-checking methods deployed by services must be technically accurate, robust, reliable and fair in order to be considered highly effective;

- confirms that methods including self-declaration of age and online payments which don’t require a person to be 18 are not highly effective;

This regulation seems like using a sledge hammer to clean the dishes - an idea born by buerocrats that are as incompetent as irresponsible. They won't solve the problem that they want to solve but the collateral damage is immense and they don't care (and possibly don't even realize it due to the extend of their ignorance and incompetence). What they may achieve - if their plans work - is in the end that the only platforms that continue to exist are huge commercial international cooperations that can afford the risk and can afford to implement measures. I feel sad for people in the UK.

Hey, are you able to contact them to find out?

This is an XenForo addon with different features. I am sure content check can be integrated into this addon. We should discuss this with the developer.

Whoa just a minute.

The age checks are primarily if you have adult content from the off - if you have pr0n, you must have age verification. If not, I don’t see where you need age gating. (Do correct me if I’m wrong, though.)

I predict that will last just long enough for some politicians to realise they need to hand over ID to watch pr0n.

The age checks are primarily if you have adult content from the off - if you have pr0n, you must have age verification. If not, I don’t see where you need age gating. (Do correct me if I’m wrong, though.)

I predict that will last just long enough for some politicians to realise they need to hand over ID to watch pr0n.

Child abuse, sexual harassment and messages.Whoa just a minute.

The age checks are primarily if you have adult content from the off - if you have pr0n, you must have age verification. If not, I don’t see where you need age gating. (Do correct me if I’m wrong, though.)

I predict that will last just long enough for some politicians to realise they need to hand over ID to watch pr0n.

we have two way communication in our forums. So there is always possibility.

Whoa just a minute.

The age checks are primarily if you have adult content from the off - if you have pr0n, you must have age verification. If not, I don’t see where you need age gating. (Do correct me if I’m wrong, though.)

I predict that will last just long enough for some politicians to realise they need to hand over ID to watch pr0n.

That isn't how I am reading it.

It says "All user-to-user and search services – defined as ‘Part 3’ services[4] – in scope of the Act,"

Which says all user to user services likely to be accessed by children, and they qualify that by saying f you don't have an age gate it IS likely to be accessed by children.

I'd love you to be right though.

That is great, by all means add me to the convo if you want.

Last edited:

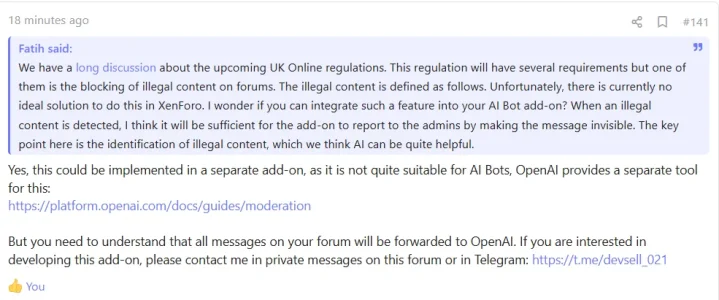

So a user would send a post, it would then go through OpenAI, if Open AI deems it ok, the post goes ahead? If it fails to comply it is rejected or sent to admin to aprove?What is your thought regarding forwarding messages to OpenAI? Is it OK if the addon assures the privacy?

View attachment 317542

I think we need to be seen to be doing something. Maybe this would be short term until Xenforo do something?

The worst bits in that are all the "state of mind" things, so things depend on the "state of mind" of the poster. It even says something might be okay in one case, but reposted by another user with a different "state of mind" might fall foul. Fun, fun, fun. Luckily I just finished my telepathy add-on for XF ...I just saw the 500-page risk register published by OFCOM. That means we have to identify control measures for all those.

Pr0n requires age checks.Whoa just a minute.

The age checks are primarily if you have adult content from the off - if you have pr0n, you must have age verification. If not, I don’t see where you need age gating. (Do correct me if I’m wrong, though.)

I predict that will last just long enough for some politicians to realise they need to hand over ID to watch pr0n.

Age checks might be useful for everyone else.

The workflow is illustrated by Ofcom:

So basically if you can't be sure it's only adults accessing (and to be sure you need to be using one of the approved age checks - which very few will do - "tick I'm 18+" checkboxes or T&C statements are not good enough) then you have to assume children may be accessing the service/site.

Assuming children may be accessing the site then you need to make a further judgement to decide if children are accessing the site, the documentation leans heavily towards the "if you can't prove kids are not using it then kids are using it". Anyhow if you feel you can confidently say kids are not using the site. Job done, just the general huge risk assessment to do. If you think children might possibly lay eyes on your site then you need to do the risk assessment for children. As to the outcome of that and the features you may or may not need on your forum to meet those risks ... well hard to say.

So no fundamentally if you're not a smut forum you don't need to make use of any fancy age checking stuff if you are either sure any risks can be managed/mitigated. If your content is a bit more "mixed" then one "easy way out" might be the robust age checking to exclude everyone under 18. You've still got all the risk assessment stuff to do, but you can skip the children's risk assessment.

Everyone is focusing on children. But this regulation is not only for children. This is covering adults as well.The worst bits in that are all the "state of mind" things, so things depend on the "state of mind" of the poster. It even says something might be okay in one case, but reposted by another user with a different "state of mind" might fall foul. Fun, fun, fun. Luckily I just finished my telepathy add-on for XF ...

Pr0n requires age checks.

Age checks might be useful for everyone else.

The workflow is illustrated by Ofcom:

View attachment 317543

So basically if you can't be sure it's only adults accessing (and to be sure you need to be using one of the approved age checks - which very few will do - "tick I'm 18+" checkboxes or T&C statements are not good enough) then you have to assume children may be accessing the service/site.

Assuming children may be accessing the site then you need to make a further judgement to decide if children are accessing the site, the documentation leans heavily towards the "if you can't prove kids are not using it then kids are using it". Anyhow if you feel you can confidently say kids are not using the site. Job done, just the general huge risk assessment to do. If you think children might possibly lay eyes on your site then you need to do the risk assessment for children. As to the outcome of that and the features you may or may not need on your forum to meet those risks ... well hard to say.

So no fundamentally if you're not a smut forum you don't need to make use of any fancy age checking stuff if you are either sure any risks can be managed/mitigated. If your content is a bit more "mixed" then one "easy way out" might be the robust age checking to exclude everyone under 18. You've still got all the risk assessment stuff to do, but you can skip the children's risk assessment.

The Online Safety Act 2023 (the Act) is a new set of laws that protects children and adults online.

The strongest protections in the Act have been designed for children. Platforms will be required to prevent children from accessing harmful and age-inappropriate content and provide parents and children with clear and accessible ways to report problems online when they do arise.

The Act will also protect adult users, ensuring that major platforms will need to be more transparent about which kinds of potentially harmful content they allow, and give people more control over the types of content they want to see.

Online Safety Act: explainer

www.gov.uk

We should discuss the scenario with the developer. I think this can depend on our preference. I guess you can approve the message first and then show it on the forum, or you can show it on the forum first and then get it published. For the first scenario, messages that pass the filter are published, and messages that do not pass are left to admin control for approval.So a user would send a post, it would then go through OpenAI, if Open AI deems it ok, the post goes ahead? If it fails to comply it is rejected or sent to admin to aprove?

I think we need to be seen to be doing something. Maybe this would be short term until Xenforo do something?

Or the developer can add both options as addon mode.

I have Telegram, if you wanna take that chat to that with the dev. Or add me to the chat.We should discuss the scenario with the developer. I think this can depend on our preference. I guess you can approve the message first and then show it on the forum, or you can show it on the forum first and then get it published. For the first scenario, messages that pass the filter are published, and messages that do not pass are left to admin control for approval.

How far OFCON take this is anyone's guess. With SO MANY small forums, they may just take the stance on they will take action if any content is reported to them and found to be "harmful" or they could just go to town on us all!