bburton

Member

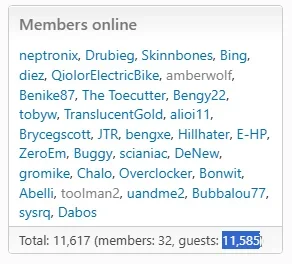

What might cause the xf_session_activity table to become repeatedly full resulting in the contents of forum pages not loading and an error message displaying instead?

The 'hourly clean up' cron job is running, so that's not the reason. This is occurring between those hourly clean ups - about every 20 minutes or so. Running the 'hourly clean up' manually temporarily 'fixes' the problem, then 20 minutes or so later the xf_session_activity table fills up again and this error occurs repeatedly:

XF\Db\Exception: MySQL query error [1114]: The table 'xf_session_activity' is full

src/XF/Db/AbstractStatement.php:230

There is not an unusual number of logged in users. Nothing appears unusual with the number of logged in registered users.

Thanks,

The 'hourly clean up' cron job is running, so that's not the reason. This is occurring between those hourly clean ups - about every 20 minutes or so. Running the 'hourly clean up' manually temporarily 'fixes' the problem, then 20 minutes or so later the xf_session_activity table fills up again and this error occurs repeatedly:

XF\Db\Exception: MySQL query error [1114]: The table 'xf_session_activity' is full

src/XF/Db/AbstractStatement.php:230

There is not an unusual number of logged in users. Nothing appears unusual with the number of logged in registered users.

Thanks,