tenants

Well-known member

I've written this plugin, but need people that get lots of bots, but do not necessarily have many forum users or server resources. (Forums that are on a shared host, new, but getting lots of bots)

I had to write this, since I my self was in this situation for a new forum and noticed spam bots (not search engine spiders) were taking up gigs per day on a forum that has one or two real users!

This kills start up forums

Bots generally visit the index page, look for thread content, go to login/login to get the cookie then register/register ... all of this takes up a fair chunk of bandwidth (particularly if you have thousands of bots)

This plugin checks the bot against one API that has a high % proxies used by bots on XenForo

If it's a proxy used by a spam bot, the spam bot is sent a 404 for forums, threads, index, registration and login (this does not happen for spiders or humans, just spam bots)

This significanltly reduces CPU (only 1 lookup needs to be done, rather than 10 -14 queries.

This significantly reduces bandwidth for that page (a few bytes rather than 40-80kb)

Overall, this means spam bots take up significantly less resources... this is fairly essential for any start up forum, finding they are running out of server resources.

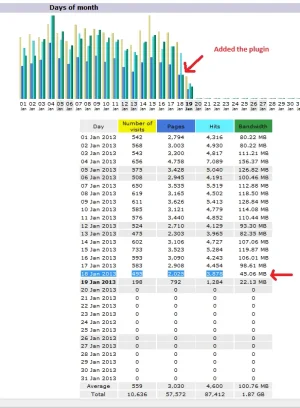

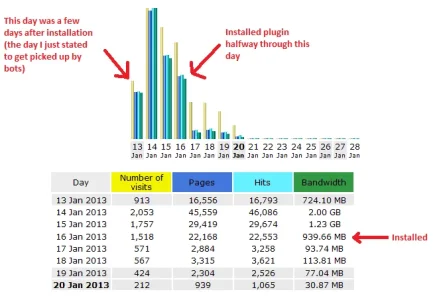

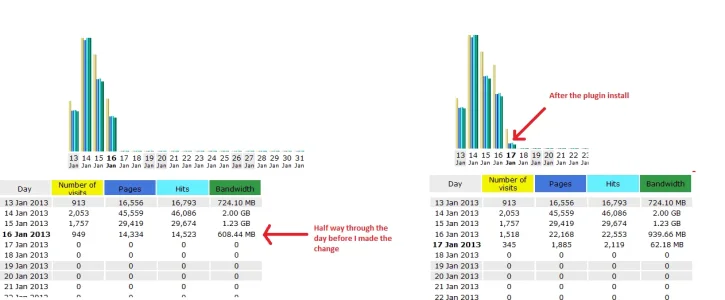

I've only just installed it and found he following

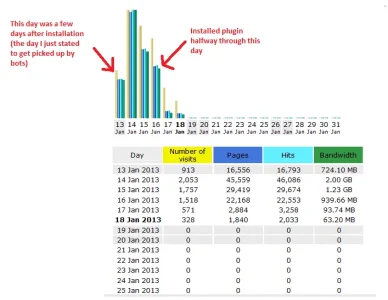

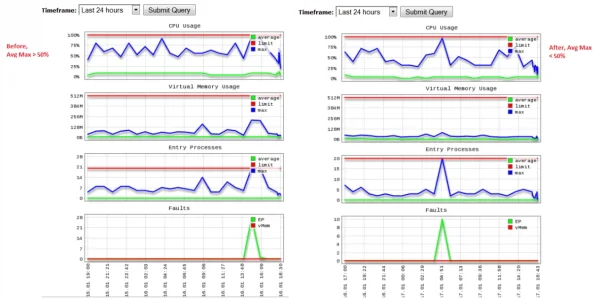

CPU Before and after:

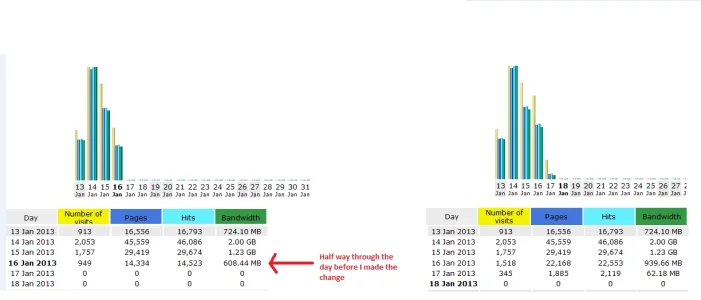

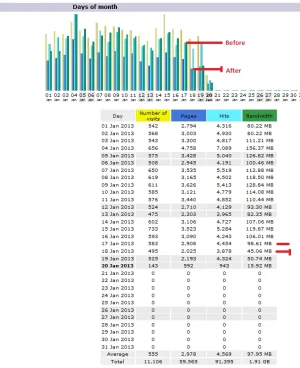

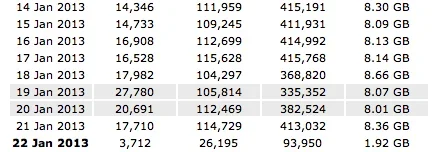

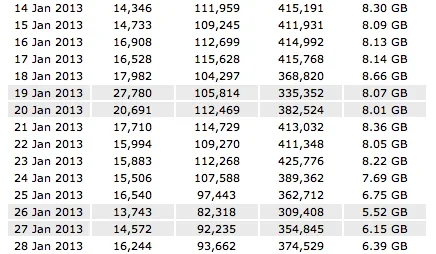

Bandwidth Before / After

I would like to know if it works on other forums as well, if you can PM me your email, I'll send you the plugin, but I need screen caps like the above... I believe this should be tested for more than a week to know the impact (server resources can really fluctuate daily)

There are no plugin logs (creating logs would increase the CPU, so it would defy the point), but you can see the 404's from your server access logs, and watch the bandwidth and CPU drop via CPanel tools / awstats

I still need to keep track of bandwidth and CPU my self, since I've only just installed it, but it would be good if a few others to try it before I release it