From my understanding, you can still use disallow in robots.txt and that it’s still being read.

Disallow in robots.txt: Search engines can only index pages that they know about, so blocking the page from being crawled usually means its content won't be indexed. While the search engine may also index a URL based on links from other pages, without seeing the content itself, we aim to make such pages less visible in the future.

The above is from

here

Bit of a mixed message though:

A noindex tag can block Google from indexing a page so that it won't appear in Search results. Learn how to implement noindex tags with this guide.

developers.google.com

Important: For the noindex rule to be effective, the page or resource must not be blocked by a robots.txt file, and it has to be otherwise accessible to the crawler. If the page is blocked by a robots.txt file or the crawler can't access the page, the crawler will never see the noindex rule, and the page can still appear in search results, for example if other pages link to it.

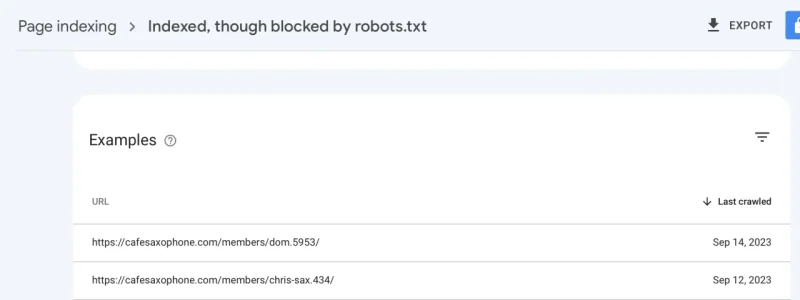

So what they are also saying is if they can't crawl the page due to a disallow in robots.txt, they can't know it has a noindex meta tag. And if that is true, it may mean that

@AndyB 's tip above in post #2 also means that if the Google cannot see the page, but knows it is there due to a link to it, they may still index it.

See here: (that report is crawled with both robots.txt

Disallow: /members/ as well as denied permission to guess.

This makes me think the ideal answer is to not block but to have no index meta tag (and make sure NOT in sitemap)

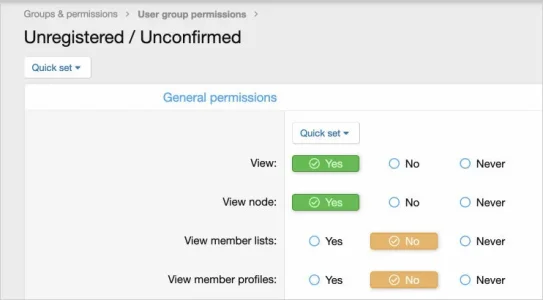

So I have removed the disallow in robots text. Just wondering now about giving guest permission back and conditionally noindexing with meta tag

However I'm confused by the conditional in member_view that

@JoyFreak mentions above

Code:

<xf:if is="!$user.isSearchEngineIndexable()">

<xf:head option="metaNoindex"><meta name="robots" content="noindex" /></xf:head>

</xf:if>

I took the conditional to apply to users are excluded from sitemap and/or denied permissions, but that doesn't seem to work - if I do exclude users via sitemap and/or permissions, there is still not a noindex meta tag.

So what does this actually mean and what is the condition that will show it?