You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Help optimizing mysql high server load!

- Thread starter NeoCHI

- Start date

Are you hosting other sites on the VPS? It looks like you are running suPHP from the htop output.

Only one xenforo site and it's on a dedicated not VPS. My first post has a link to the specs.

Where do you see suPHP and what is it?

MattW

Well-known member

Sorry, forgot it was dedicated. The fact that your index.php file is being run as the user suggests it's running suPHP.Only one xenforo site and it's on a dedicated not VPS. My first post has a link to the specs.

Where do you see suPHP and what is it?

Ic, is that a possible issue?Sorry, forgot it was dedicated. The fact that your index.php file is being run as the user suggests it's running suPHP.

MattW

Well-known member

It's a very inefficient way of serving your PHP files. It also means you can use any form of opcaching. If it's only your site on the dedicated server, I'd swap apache to use DSO as the handler. It will mean you need to make the data and internal_data directory 777 permissions with chmod. BUT, you can then use Xcache/APC/ZendOpcache.Ic, is that a possible issue?

m1ne

Well-known member

Made the changes and apparently I have KeepAlive off (found it set in my WHM).

Btw, do I have to worry about:

Joins performed without indexes: 362760

No, don't worry about that. How is MySQL load now?

No, don't worry about that. How is MySQL load now?

It's ok, but it usually is until BAM it's suddenly spiking over 30+.

Hopefully, that never happens again.

It's a very inefficient way of serving your PHP files. It also means you can use any form of opcaching. If it's only your site on the dedicated server, I'd swap apache to use DSO as the handler. It will mean you need to make the data and internal_data directory 777 permissions with chmod. BUT, you can then use Xcache/APC/ZendOpcache.

That sounds complicated....I'm not great with server stuff. Anyway I can possibly just change some settings and optimize what I'm currently using?

m1ne

Well-known member

It's ok, but it usually is until BAM it's suddenly spiking over 30+.

Hopefully, that never happens again.

That sounds complicated....I'm not great with server stuff. Anyway I can possibly just change some settings and optimize what I'm currently using?

Doesn't cPanel allow you to change that easily? There should be a FastCGI option.

Doesn't cPanel allow you to change that easily? There should be a FastCGI option.

Setting FastCGI does?:

- swap apache to use DSO as the handler.

- make the data and internal_data directory 777 permissions with chmod.

- use Xcache/APC/ZendOpcache.

MattW

Well-known member

No, it doesn't do any of that.Setting FastCGI does?:

- swap apache to use DSO as the handler.

- make the data and internal_data directory 777 permissions with chmod.

- use Xcache/APC/ZendOpcache.

http://docs.cpanel.net/twiki/bin/view/EasyApache/Php/RequestHandling

Sigh, server is still having issues. Here's what mysql tuner is showing:

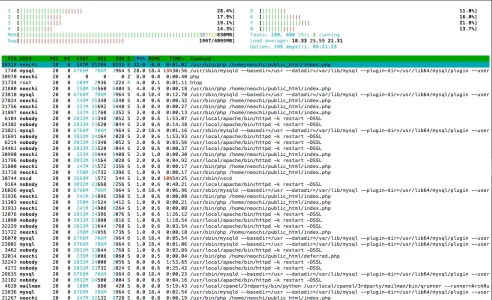

I've also uploaded a screenshot of htop during it going crazy.

Admitedly last time I didn't add:

Cause I read online type 1 sometimes is harder on CPU but I've changed it now. Here's my current my.cnf:

Also, it seems like whenever this starts to happen it lingers until I restart mysql. I got the following email 10 hours ago and before I had just restart mysql it was still kind of going berzerk:

Code:

>> MySQLTuner 1.3.0 - Major Hayden <major@mhtx.net>

>> Bug reports, feature requests, and downloads at http://mysqltuner.com/

>> Run with '--help' for additional options and output filtering

[OK] Currently running supported MySQL version 5.5.36-cll

[OK] Operating on 64-bit architecture

-------- Storage Engine Statistics -------------------------------------------

[--] Status: +ARCHIVE +BLACKHOLE +CSV -FEDERATED +InnoDB +MRG_MYISAM

[--] Data in MyISAM tables: 411M (Tables: 26)

[--] Data in InnoDB tables: 1G (Tables: 361)

[--] Data in PERFORMANCE_SCHEMA tables: 0B (Tables: 17)

[--] Data in MEMORY tables: 9M (Tables: 8)

[!!] Total fragmented tables: 65

-------- Security Recommendations -------------------------------------------

[OK] All database users have passwords assigned

-------- Performance Metrics -------------------------------------------------

[--] Up for: 1d 11h 56m 57s (30M q [236.376 qps], 1M conn, TX: 1229B, RX: 8B)

[--] Reads / Writes: 70% / 30%

[--] Total buffers: 2.3G global + 2.8M per thread (200 max threads)

[OK] Maximum possible memory usage: 2.8G (75% of installed RAM)

[OK] Slow queries: 0% (32K/30M)

[!!] Highest connection usage: 100% (201/200)

[OK] Key buffer size / total MyISAM indexes: 32.0M/350.3M

[OK] Key buffer hit rate: 100.0% (4B cached / 1M reads)

[OK] Query cache efficiency: 69.2% (15M cached / 22M selects)

[!!] Query cache prunes per day: 44314

[OK] Sorts requiring temporary tables: 0% (385 temp sorts / 758K sorts)

[!!] Joins performed without indexes: 277136

[!!] Temporary tables created on disk: 48% (316K on disk / 653K total)

[OK] Thread cache hit rate: 99% (2K created / 1M connections)

[OK] Table cache hit rate: 92% (1K open / 2K opened)

[OK] Open file limit used: 5% (255/4K)

[OK] Table locks acquired immediately: 99% (18M immediate / 18M locks)

[!!] Connections aborted: 12%

[OK] InnoDB buffer pool / data size: 2.0G/1.7G

[OK] InnoDB log waits: 0

-------- Recommendations -----------------------------------------------------

General recommendations:

Run OPTIMIZE TABLE to defragment tables for better performance

Reduce or eliminate persistent connections to reduce connection usage

Adjust your join queries to always utilize indexes

When making adjustments, make tmp_table_size/max_heap_table_size equal

Reduce your SELECT DISTINCT queries without LIMIT clauses

Your applications are not closing MySQL connections properly

Variables to adjust:

max_connections (> 200)

wait_timeout (< 10)

interactive_timeout (< 10)

query_cache_size (> 128M)

join_buffer_size (> 128.0K, or always use indexes with joins)

tmp_table_size (> 128M)

max_heap_table_size (> 128M)I've also uploaded a screenshot of htop during it going crazy.

Admitedly last time I didn't add:

Code:

query_cache_type = 1

query_cache_limit=2MCause I read online type 1 sometimes is harder on CPU but I've changed it now. Here's my current my.cnf:

Code:

[mysqld]

# Settings user and group are ignored when systemd is used.

# If you need to run mysqld under different user or group,

# customize your systemd unit file for mysqld according to the

# instructions in http://fedoraproject.org/wiki/Systemd

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

open_files_limit=3072

query_cache_size=128M

max_connections=200

max_user_connections=100

wait_timeout=10

interactive_timeout=10

tmp_table_size=128M

max_heap_table_size=128M

thread_cache_size=64

key_buffer_size=32M

max_allowed_packet=16M

table_cache=2048

table_definition_cache=3072

#delayed_insert_timeout=20 # Turn on if max_connections being reached due to delayed inserts

#delayed_queue_size=300 # Turn on if max_connections being reached due to delayed inserts

myisam_sort_buffer_size=2M # can be increased per sessions if needed for alter tables (indexes, repair)

query_cache_type = 1

query_cache_limit=2M # leave at default unless there is a good reason

#join_buffer=2M # leave at default unless there is a good reason

#sort_buffer_size=2M # leave at default unless there is a good reason

#read_rnd_buffer_size=256K # leave at default unless there is a good reason

#read_buffer_size=2M # leave at default unless there is a good reason

collation_server=utf8_unicode_ci

character_set_server=utf8

#general_log=1

slow_query_log=1

log-output=TABLE # select * from mysql.general_log order by event_time desc limit 10;

long_query_time=5 # select * from mysql.slow_log order by start_time desc limit 10;

innodb_file_per_table=1

innodb_flush_method=O_DIRECT

innodb_buffer_pool_size=2000M # check mysql -e "SHOW GLOBAL STATUS LIKE 'Innodb_buffer_pool%';" - free vs total

innodb_additional_mem_pool_size=29M

innodb_log_buffer_size=29M

innodb_thread_concurrency=8 # Number of physical + virtual CPU's, preset when server is provisioned to have correct # of cores

[mysqld_safe]

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pidAlso, it seems like whenever this starts to happen it lingers until I restart mysql. I got the following email 10 hours ago and before I had just restart mysql it was still kind of going berzerk:

The chkservd sub-process with pid 24794 ran for 787 seconds. This sub-process was terminated when it exceeded the time allowed between checks, which is 300 seconds. To determine why, you can check /var/log/chkservd.log and /usr/local/cpanel/logs/tailwatchd_log.

You likely received this notification as a symptom of a larger problem. If your server is experiencing a high load, we recommend investigating the cause. If you continue to receive this notification, it is likely that your system is unable to handle demand or a misconfiguration is delaying restarts.

If you are sure that no misconfigurations exist, you should consider gradually increasing the following options in WHM's "Tweak Settings" feature: "The number of times ChkServd will allow a previous check to complete before terminating the check" and/or "The number of seconds between ChkServd service checks".

Server:app.appinvasion.com

Primary IP:162.144.57.127

Service:chkservd

Notification Type:hang

Memory Information:

Load Information:242.58 200.97 184.10

- Used: 3134MB

- Available: 509MB

- Installed: 3830MB

Uptime:195 days, 3 hours, 51 seconds

IOStat Information:

avg-cpu: %user %nice %system %iowait %steal %idle

16.44 0.06 5.50 2.66 0.00 75.34

Device: tps Blk_read/s Blk_wrtn/s Blk_read Blk_wrtn

vda 65.69 859.79 1677.32 14497663460 28282789592

vdb 2.57 36.26 20.83 611423808 351299104

ChkServd Version:15.3

Attachments

I strongly recommend changing your handler to FastCGI. You will need to check it off in Easy Apache and then rebuild Apache in WHM. I would also consider upgrading default MySQL to Percona and if GD offers it, moving MySQL to a SSD.

I'm not great with server stuff so I try to keep it simple encase I mess something up and don't have the know how to roll it back.

So what exactly do I need to do to change it to FastCGI aswell as what other settings do I need to change to actually make it better?

So my host is helping switch to FastCGI with APC cache. Is there anything I need to do settings or config wise to make this work after they've helped me switch?

I've read I need to add some code to my config.php http://xenforo.com/community/threads/apc-and-caching.30041/

I've read I need to add some code to my config.php http://xenforo.com/community/threads/apc-and-caching.30041/

Jattitude

Member

You have a server which is fully managed and you should not be fiddling around with things that are being suggested here in my opinion. Get your managed server provider to sort it out for you. If they say that it is ouf of the scope of service they provide then I would say you are over paying for that box.

Get a new box and get php 5.5 with DSO as handler if yours is the only websites on the server. Get zend opcache and memcached with it. keep mod security off. Cache cache cache cache cache is the answer. All this is supported in WHM. You can also use an nginx pluging (works as a proxy to apache) which will speed things up for you in ways you cannot imagine if you havent tried it yet.

I use this page cache plugin someone has in the resources section. I cant remember how much it was, may be $5-10 I cannot remember but it is worth every penny. Use that and your website should fly

Dont forget to get a good dsn service along with a decent CDN.

I would personally not recomend changing the php handler in production environment. Get a new box and do everything from scratch and move your site there and dump the old one.

Get a new box and get php 5.5 with DSO as handler if yours is the only websites on the server. Get zend opcache and memcached with it. keep mod security off. Cache cache cache cache cache is the answer. All this is supported in WHM. You can also use an nginx pluging (works as a proxy to apache) which will speed things up for you in ways you cannot imagine if you havent tried it yet.

I use this page cache plugin someone has in the resources section. I cant remember how much it was, may be $5-10 I cannot remember but it is worth every penny. Use that and your website should fly

Dont forget to get a good dsn service along with a decent CDN.

I would personally not recomend changing the php handler in production environment. Get a new box and do everything from scratch and move your site there and dump the old one.

Similar threads

- Replies

- 4

- Views

- 485

- Replies

- 1

- Views

- 721

- Replies

- 13

- Views

- 3K

- Replies

- 9

- Views

- 1K