You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

[DigitalPoint] App for Cloudflare® 1.9.8.1

No permission to download

- Thread starter digitalpoint

- Start date

digitalpoint

Well-known member

It's just the index, so a workaround for now is to go to any other admin page like: admin.php?add-ons/I've disabled all addons to get back in, and manually disabled this, and the admin panel loads again.

digitalpoint

Well-known member

Seems like Cloudflare got their API sorted out now. I did figure out where an API call is being made for the stats block, so still going to do away with that. It's making an API call to determine the domain/zone for building the direct link into your Cloudflare account for the button in the stats block header (in case anyone is curious).

Deciding now if we should cache the domain so it doesn't need to make an API call or if that deeplink into your Cloudflare account is even worth the effort (maybe remove it).

Deciding now if we should cache the domain so it doesn't need to make an API call or if that deeplink into your Cloudflare account is even worth the effort (maybe remove it).

digitalpoint

Well-known member

digitalpoint updated [DigitalPoint] App for Cloudflare® with a new update entry:

Ability to create firewall rule for ASNs

Read the rest of this update entry...

Ability to create firewall rule for ASNs

- Fixed issue with creating Turnstile site via API (Cloudflare updated schema for API call)

- Added ASN support when creating IP address rules

- Cache Cloudflare zone/domain (makes it so an API call is not necessary on the admin index page to build deeplink to your zone in your Cloudflare account)

View attachment 287190

Read the rest of this update entry...

Maybe it has something to do with 1M attachments we have?The fact that this thread isn't flooded with people seeing the same thing makes me think it's not something on Cloudflare's side.

The situation is significantly better after migrating back from R2 to local drive.

Now seeing 20 errors grouped together regarding purging the cache:

Code:

Error: Call to a member function getBody() on array src/addons/DigitalPoint/Cloudflare/Traits/XF.php:146

Generated by: ... 15.06.2023. u 06:48

Stack trace

#0 src/addons/DigitalPoint/Cloudflare/Api/Cloudflare.php(618): DigitalPoint\Cloudflare\Api\Cloudflare->parseResponse(Array)

#1 src/addons/DigitalPoint/Cloudflare/Api/Cloudflare.php(501): DigitalPoint\Cloudflare\Api\CloudflareAbstract->makeRequest('POST', 'zones/......', Array)

#2 src/addons/DigitalPoint/Cloudflare/Repository/Cloudflare.php(1014): DigitalPoint\Cloudflare\Api\CloudflareAbstract->purgeCache('......', Array)

#3 src/addons/DigitalPoint/Cloudflare/XF/Entity/Post.php(52): DigitalPoint\Cloudflare\Repository\CloudflareAbstract->purgeCache(Array)

#4 src/addons/DigitalPoint/Cloudflare/XF/Entity/Post.php(10): DigitalPoint\Cloudflare\XF\Entity\Post->purgeCloudflareCache()

#5 src/addons/XFES/XF/Entity/Post.php(9): DigitalPoint\Cloudflare\XF\Entity\Post->_postSave()

#6 src/XF/Mvc/Entity/Entity.php(1277): XFES\XF\Entity\Post->_postSave()

#7 src/XF/Service/Thread/Replier.php(214): XF\Mvc\Entity\Entity->save(true, false)

#8 src/XF/Service/ValidateAndSavableTrait.php(42): XF\Service\Thread\Replier->_save()

#9 src/XF/Pub/Controller/Thread.php(616): XF\Service\Thread\Replier->save()

#10 src/XF/Mvc/Dispatcher.php(352): XF\Pub\Controller\Thread->actionAddReply(Object(XF\Mvc\ParameterBag))

#11 src/XF/Mvc/Dispatcher.php(259): XF\Mvc\Dispatcher->dispatchClass('XF:Thread', 'AddReply', Object(XF\Mvc\RouteMatch), Object(SV\ElasticSearchEssentials\XF\Pub\Controller\Thread), NULL)

#12 src/XF/Mvc/Dispatcher.php(115): XF\Mvc\Dispatcher->dispatchFromMatch(Object(XF\Mvc\RouteMatch), Object(SV\ElasticSearchEssentials\XF\Pub\Controller\Thread), NULL)

#13 src/XF/Mvc/Dispatcher.php(57): XF\Mvc\Dispatcher->dispatchLoop(Object(XF\Mvc\RouteMatch))

#14 src/XF/App.php(2487): XF\Mvc\Dispatcher->run()

#15 src/XF.php(524): XF\App->run()

#16 index.php(20): XF::runApp('XF\\Pub\\App')

#17 {main}

Request state

array(4) {

["url"] => string(127) "/threads/.../add-reply"

["referrer"] => string(145) "https://.../threads/....976266/"

["_GET"] => array(1) {

["/threads/...976266/add-reply"] => string(0) ""

}

["_POST"] => array(10) {

["_xfToken"] => string(8) "********"

["message_html"] => string(178) "..."

["attachment_hash"] => string(32) "..."

["attachment_hash_combined"] => string(88) "..."

["last_date"] => string(10) "..."

["last_known_date"] => string(10) "..."

["load_extra"] => string(1) "1"

["_xfRequestUri"] => string(118) "/threads/....976266/"

["_xfWithData"] => string(1) "1"

["_xfResponseType"] => string(4) "json"

}

}digitalpoint

Well-known member

No, it’s not the number of attachments, it’s connectivity issues between your server and Cloudflare.

I hate to say it, but you probably want to disable guest page caching. Your server is having connectivity problems to Cloudflare (it’s not an R2 issue, it’s a connectivity issue to Cloudflare for your server). Looks to me like you are getting errors with any API call (that’s the API call for purging the cache). Trying to run it async isn’t going to solve the problem, it’s only going to hide that there is a problem.

Like I said in a previous post, if this was a widespread issue with Cloudflare, this thread would be completely filled with everyone seeing the same thing. But it seems to just be your server for whatever reason.

It might be useful to see what your server is getting as a response to the API (like maybe it’s something from a firewall or proxy somewhere) to help you figure it out. I could give you some code to add to (maybe) help your server administrator debug where the problem is if you want.

I hate to say it, but you probably want to disable guest page caching. Your server is having connectivity problems to Cloudflare (it’s not an R2 issue, it’s a connectivity issue to Cloudflare for your server). Looks to me like you are getting errors with any API call (that’s the API call for purging the cache). Trying to run it async isn’t going to solve the problem, it’s only going to hide that there is a problem.

Like I said in a previous post, if this was a widespread issue with Cloudflare, this thread would be completely filled with everyone seeing the same thing. But it seems to just be your server for whatever reason.

It might be useful to see what your server is getting as a response to the API (like maybe it’s something from a firewall or proxy somewhere) to help you figure it out. I could give you some code to add to (maybe) help your server administrator debug where the problem is if you want.

Firewall log doesn't show anything.

WordPress CF plugin reports on the other server (in the same DC): "cURL error 28: Operation timed out after 30001 milliseconds with 0 bytes received" and "502 Bad Gateway" couple of times a day, so that might be it.

It would be nice to have more descriptive error reports.

Also I didn't mean to hide this error, but to do CF communication async, because currently when this happens users see the dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." and we get complaints immediately.

Disabled guest page caching for now.

WordPress CF plugin reports on the other server (in the same DC): "cURL error 28: Operation timed out after 30001 milliseconds with 0 bytes received" and "502 Bad Gateway" couple of times a day, so that might be it.

It would be nice to have more descriptive error reports.

Also I didn't mean to hide this error, but to do CF communication async, because currently when this happens users see the dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." and we get complaints immediately.

Disabled guest page caching for now.

digitalpoint

Well-known member

It's not necessarily a firewall on your server, I'm more talking about firewalls that your entire server is behind, not a firewall on the server itself. It literally could be one of a million things, most of which are outside your physical server. Every hop, every switch and gateway the network traffic passes through in it's route to Cloudflare, etc. any single one of those could have network configuration issues (for example if they are using a bad MTU for network packets), any of those hops could be passing through their own firewall or proxy that could be a problem. It could be a problem is DNS misconfiguration even.Firewall log doesn't show anything.

Ya, it's probably not something with your physical server itself, rather the network connection of that server. So chances are high than other servers on the same network are going to give you the same sort of response. The fact that you have a different server in the same DC also giving you timeout errors on API calls just gives more data showing it's an underlying network connectivity issue... something upstream from both of the servers.WordPress CF plugin reports on the other server (in the same DC): "cURL error 28: Operation timed out after 30001 milliseconds with 0 bytes received" and "502 Bad Gateway" couple of times a day, so that might be it.

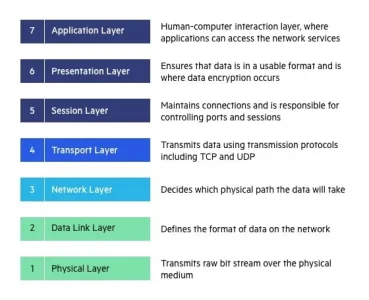

It would be nice, but you are kind of running blind with network connectivity issues because you are simply at a lower OSI layer that isn't able to give feedback and send down error messages. If you are having issues at the Network, Transport or Session layer (which is my guess)... all of that is happening with the network equipment between your server and Cloudflare.It would be nice to have more descriptive error reports.

For example let's say there's a network layer issue where an MTU packet size was defined by a switch your traffic passes through and a different switch in the network path can't handle network packets of that size, the device that can't handle it can't send a human readable error message back as a packet response, it just fails because there's no place or mechanism to send an error to. The device itself is probably generating error logs on that device, but if no one is monitoring them, they just get lost).

Network connectivity is a tough problem to sort out because you are dealing with so many different devices in the network path and some settings (like MTU) will still work most of the time when the are set incorrectly.

Well, the issue with trying to do them with async requests is you start making everything a lot more complicated. Let's say a user replies to a thread... that's already done with an AJAX request. So do we really want an AJAX request result to trigger another AJAX request after that one loads in the new content (post)? You are then relying on a user's browser to actually fire the request (you are reliant on a user's browser being cooperative to get backend server tasks done properly). In the case of a post, there's also numerous ways a post can be edited/deleted (user edits, sometimes with AJAX, sometimes without, inline moderation, spam cleaner, etc.) so instead of just hooking into the underlying entity (what is happening now) and doing something based on the entity changes, you would need to figure out all the ways an entity could change and account for all of those ways. It would also make it not work if a third-party addon ever edits a post. You just start going down a rabbit hole that's going to cause so many problems it's crazy.Also I didn't mean to hide this error, but to do CF communication async, because currently when this happens users see the dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." and we get complaints immediately.

Disabled guest page caching for now.

It would kind of be like if you wanted to count the number of cars passing over a specific bridge... the best way to do it is count them at the bridge, because we don't care about how they passed over the bridge, just that they did. Not doing it at the entity level would be similar to trying to figure out all the ways a vehicle could theoretically pass over a bridge and then account for all those ways individually. It would be a never ending issue of figuring out new ways a post could be edited since we no longer are doing it at the entity level (currently we don't care how it was edited, just that it was edited).

If you have shell access to your server, you might want to do some simple ping/packet loss monitoring as a first step... Over the course of an hour or two, see if the server is getting packet loss to Cloudflare servers. If it is, at least it's something to go on and show your hosting company.

If WordPress plugin can log specific error code, why can't we? Am I missing something?

There has to be some way to avoid displaying error dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." to users. It doesn't make any sense to bother them with CF connectivity.

Nice idea regarding ping. Should I use api.cloudflare.com?

There has to be some way to avoid displaying error dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." to users. It doesn't make any sense to bother them with CF connectivity.

Nice idea regarding ping. Should I use api.cloudflare.com?

digitalpoint

Well-known member

No, you are right on that... in fact, I'm already testing something on my end to see if we can at least get the HTTP error code logged. It usually does, but not always (when it gets that error about the getBody() method not being a thing). Working on building a test setup on my end that can artificially cause API calls to fail so I can at least do some testing. But yes, you are correct in that at the very least it would be nice to get the HTTP error code (and I'm already working on that).If WordPress plugin can log specific error code, why can't we? Am I missing something?

There has to be some way to avoid displaying error dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console." to users. It doesn't make any sense to bother them with CF connectivity.

Ya, that would be a good place to start. Maybe it won't yield anything useful... it's not going to be a definitive answer that nothing is wrong if pings are coming back properly, but it's an easy thing to check (if there's packet loss, there's definitely something wrong).Nice idea regarding ping. Should I use api.cloudflare.com?

digitalpoint

Well-known member

Okay, so I did figure out why that getBody() error was happening instead of logging the underlying HTTP error code. It's been fixed for the next version, but if you want to fix it now, if you go to the

to this:

That should at least have it logging the underlying HTTP code as intended.

It has to do with the addon trying to handle a couple error codes automatically behind the scenes, so if the request fails twice (it always does an automatic retry), it will parse the response and look for a couple different error codes related to invalid Cloudflare account and zone IDs. That getBody() error pops up when a request failed twice, the response is parsed looking for the error, but then later down the road it tries to parse the response normally (can't parse an already parsed response).

DigitalPoint\Cloudflare\Api\Cloudflare.php file and change this:

PHP:

$response = $this->parseResponse($response);to this:

PHP:

if (!is_array($response))

{

// Response already parsed if it failed > 1 times. Won't be parsed normally (if it failed 0 or 1 time).

$response = $this->parseResponse($response);

}That should at least have it logging the underlying HTTP code as intended.

It has to do with the addon trying to handle a couple error codes automatically behind the scenes, so if the request fails twice (it always does an automatic retry), it will parse the response and look for a couple different error codes related to invalid Cloudflare account and zone IDs. That getBody() error pops up when a request failed twice, the response is parsed looking for the error, but then later down the road it tries to parse the response normally (can't parse an already parsed response).

Great. Will that cover "Operation timed out" error, too?

And the most important is to avoid displaying any error to the end user, such as dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console."

If you can make it happen, then I can safely enable guest page caching and nobody but ourselves would know about any CF related error.

And the most important is to avoid displaying any error to the end user, such as dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console."

If you can make it happen, then I can safely enable guest page caching and nobody but ourselves would know about any CF related error.

digitalpoint

Well-known member

It should cover any HTTP related errors (which includes the request timing out).Great. Will that cover "Operation timed out" error, too?

Ya, that was always the intent (to log the error to the server error log, but don't present an exception to the end user). Was a related but different issue I explained in previous post (where in some cases [if the request failed twice], it would have already parsed the response looking for certain errors). It rarely pops up because it's rare for a request to ever fail twice under normal circumstances. But yes... that's been fixed for next release.And the most important is to avoid displaying any error to the end user, such as dialog "Oops! We ran into some problems. Please try again later. More error details may be in the browser console."

Well, the user wouldn't get an error, but if the server is timing out on API requests, that long time is going to pass through to the user's request. If the server takes 30 seconds to do an API call, the user is going to have +30 seconds for the AJAX request that triggered it (like in the case of them posting in a thread that needs to have some cached URLs purged). So it's not really a full solution for everything. You are best off still trying to get to the bottom of the underlying network connectivity issues.If you can make it happen, then I can safely enable guest page caching and nobody but ourselves would know about any CF related error.

digitalpoint

Well-known member

It still doesn't fix the root of the problem though and the shorter you make the timeout, the higher chance you have problems because you caused the API to fail by not waiting long enough. In a normal scenario and API call shouldn't take longer than 0.1 seconds. But if it does, is it better to force the API call to terminate (not be called) because it was being temporarily slow, or is it better to let it run and actually complete the API call? There's a lot of things happening via API that is beyond purging pages from cache (not catastrophic if it doesn't complete). Things like adding or removing IP address blocks from the firewall. In that case, it's pretty important to actually let it run/complete even if the API is slow.Maybe we should have smaller timeout (i.e. 5 seconds for CF API), so in case that something is wrong user won't notice the difference?

If it was an API sitting on a random guy's computer at their home, I'd be more inclined to agree. But we are talking about one of the most reliable and fastest APIs I've ever dealt with personally (I've dealt with a lot).

Trying to work around underlying network connectivity issues isn't the best way to address it. The network connectivity issues needs to be addressed (at least first). If there are still issues after that's resolved, then maybe move on to working around whatever the next issue is. But really, the network connectivity stuff needs to be fixed first and foremost. There are literally many hundreds of sites using this addon and no one else is reporting any API issues, so I'd bet any amount of money someone would be willing to bet that it's an issue specific to your server's network.

That being said, you could manually do it if you want, but I still think it's a bad idea. If you go into the

DigitalPoint\Cloudflare\Traits\XF.php file, and add the option in the first line of the request() method like so, it would do it:

PHP:

protected function request($method, $url, $options)

{

$options['timeout'] = 5;Get to the bottom of the network connectivity issues (oh ya, I said that already).

digitalpoint

Well-known member

Even if you set it to 5 seconds, do you really want the post request to hang for 5 seconds? 5 seconds feels like an eternity for a post to go through for an end user. I'd probably just disable guest page caching until the network connectivity thing is sorted out. Anything more than a fraction of a second is going to cause UX problems for existing users making posts.Yeah yeah, already trying to find solution for network connectivity issues.

But really for purging guest caching we could have smaller timeout. Don't need to alter it for other things.

So I would set it to 0.2 seconds, or even better to have it configurable.In a normal scenario and API call shouldn't take longer than 0.1 seconds.

Better to have guest caching and see 100 connectivity errors in the log than don't have guest caching at all.

Even if/when sort this network issue, there will be new one at some point in the future, which would cause forum to malfunction for no reason.

digitalpoint

Well-known member

I don’t disagree, but lowering the timeout is the easy solution, not the best one. A better solution involves completely decoupling the purge cache API call from the http request triggering it.So I would set it to 0.2 seconds, or even better to have it configurable.

Better to have guest caching and see 100 connectivity errors in the log than don't have guest caching at all.

Even if/when sort this network issue, there will be new one at some point in the future, which would cause forum to malfunction for no reason.

Do that and it doesn’t matter what the timeout is set to because the user doesn’t wait for it in any scenario. And even if the API is functioning normally, the user’s post is done faster for them because they aren’t waiting even 0.1s for the cache to be purged on the backend.

I’d rather do it the better/right way than the easy way, so…

Similar threads

- Replies

- 12

- Views

- 1K