Bad news: We should be prepared for a huge wave of a new category of AI powered scraping bots and spambots in possibly very near future. Background: Two weeks ago an open source project named Open Claw started to get a lot of hype within the AI nerd scene. It was first released in Nov. last year, got renamed a couple of times and finally, at the beginning of Feb 26 got a lot of traction all of a sudden. It is basically a kind of orchestrator, that can work with many different AI models and integrates them into our digital life by automating a lot of tasks thorugh AI agents that are powered by add ons, so called skills. The idea behind it is to act as an autonomously acting digital assistent - very much as if you had a human personal assistent. The difference to AI as it was common up until now is (somewhat oversimplified) four fold:

1. It makes the use of not only AI but automated AI workflows easy and simple

2. it can run on basically any device, locally as well as on a VPS or similar as Couldbot itself is just an orchestrator, tking up just a couple of MB of space. With a basic mac Mini you can even run the whole model (like i.e. Olama) locally.

3. It has - in opposite to usual use of chatGPT and such - a memory about what it did and what it's basic character is and this way is able to work in iterations and to improve itself

4. It is able to communicate actively via things like mail, telegram, whatsapp and even voice for both: input and output.

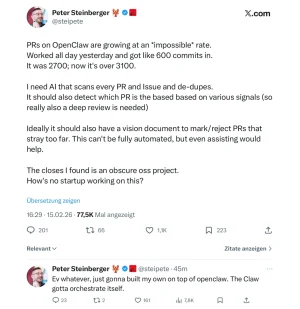

There is way more to it, but these are the basic relevant elements. Within the last two weeks the thing got improved massively - thousands of commits to the repository and thousands of new skills added to the list of options. It is the fastest growing and fastest developing AI project t of all times.

People quickly claimed to run their whole company based on it and to replace (or not need) loads of staff because of that. Obviously nobody knows wether all that is true and there's a lot of hype and made up calims but it is clear that this thing seems to be a milestone already for the use of AI. The author (a guy from Austria who has coded the thing alone with AI) announounced yesterday evening that he will work for OpenAI in future and that OpenClaw will be transferred into a foundation (and shall stay open source).

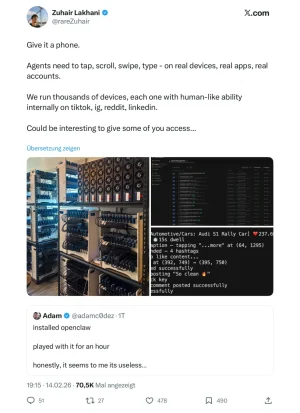

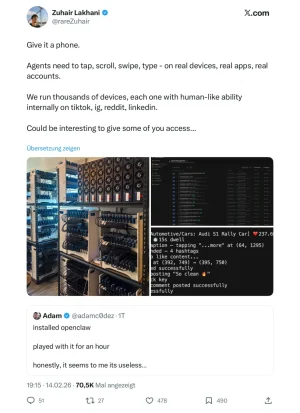

The bad news for us is: The agent model of open claw massively depends from the ability to access all kinds of information on the web. It is able to use all possible ways of authentification, can act on behalf of it's "owner" and is even able to create and use accounts on it's own and autonomously. It has the need and the ability to scrape informations from the web and to overcome all sorts of bot-detection. As this is needed for it's purpose this gets developed further constantly.

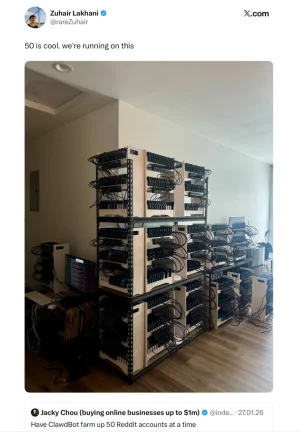

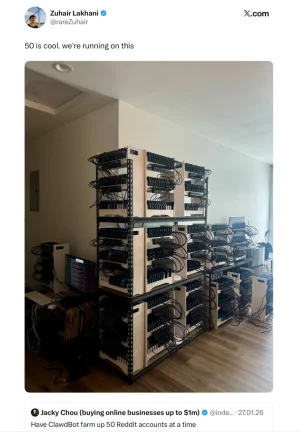

This alone would be bad enough - but it get's worse: As to be expected shady marketing people jumped on the train quickly to (mis)use the tool for their practices. They claim to have i.e. set up their farms to create and use reddit accounts automaticlly and post their messages - with a claimed rejection rate of just 0,5%.

In the meantime, these abilities can be bought. And - as to be expected - there's already a skill to use networks of resident proxies:

While the whole thing is still in it's early stages the speed of development and adoption seems crazy. So it is probably only a question of time when this tool will hit forums as well to massive extend - by normal users as well as by spammers and scrapers (and those will be first). Plus one can assume that this is just the beginning and more and more tools of this category will be developed in near future and lower the amount of abilities needed to use something like that further. In fact, yesterday an Ai company announced to have Open Claw integrated so far for their customers that it simply runs in a browser tab - no setup though the user needed at all.

So we better should prepare for the wave that is probably going to hit us any time soon.