You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Crazy amount of guests

- Thread starter Levina

- Start date

-

- Tags

- bot swarms guests

chillibear

Well-known member

There have been a few Markov chain type babblers done that generate realistic junk pages to tarpit scrapers and so forth you could investigate and see if any are more than idle experiments. I recall reading about a few some time back.poison the content. Send identified scrapers into a huge mess of false, halftrue or completely made up information and let they scrape it to poison the AI models

lazy llama

Well-known member

Funnily enough I'd just been looking at Markov chain generators.

e.g. this PHP one - https://github.com/hay/markov - demo at https://projects.haykranen.nl/markov/demo/

So a starter might be a tarpit add-on which picks a few random thread titles/posts from the forum as the input text, and simulates pages of thread titles and posts based on that text. As well as wasting the rogue scrapers' time, it would poison their input.

Put it behind some no-follow links, hide it from humans and use robots.txt to keep legit search scrapers out of the tarpit.

e.g. this PHP one - https://github.com/hay/markov - demo at https://projects.haykranen.nl/markov/demo/

So a starter might be a tarpit add-on which picks a few random thread titles/posts from the forum as the input text, and simulates pages of thread titles and posts based on that text. As well as wasting the rogue scrapers' time, it would poison their input.

Put it behind some no-follow links, hide it from humans and use robots.txt to keep legit search scrapers out of the tarpit.

ES Dev Team

Well-known member

I got hit with a mega-wave last night.. they kept consuming all my tcp/ip ports ( my current limit is 2048, double the linux default ).. i kept the site online for others only by restarting apache repeatedly. The blast lasted 15 minutes and it totally cut through my protection.

I'm not sure if i should tune linux' max TCP/IP ports and apache's max request workers into the stratosphere or leave it the way it is. I do have lots of available cpu/ram.

Something about how the bots work on the other end has the bots holding the TCP/IP port open for much longer than usual. It resembles a slow loris attack. I already have mitigations against that, but the number of simultaneous IPs is too high and too unique.

I'm not surprised if they cut right through cloudflare. I think the pool of residential proxies is enormous and rotates too often for something like cloudflare or some other algorithm to catch them.

Anubis is a good idea. It may result in a worsening of stuck tcp/ip ports. Same with a markov generator, etc.

I'm not sure if i should tune linux' max TCP/IP ports and apache's max request workers into the stratosphere or leave it the way it is. I do have lots of available cpu/ram.

Something about how the bots work on the other end has the bots holding the TCP/IP port open for much longer than usual. It resembles a slow loris attack. I already have mitigations against that, but the number of simultaneous IPs is too high and too unique.

I'm not surprised if they cut right through cloudflare. I think the pool of residential proxies is enormous and rotates too often for something like cloudflare or some other algorithm to catch them.

Anubis is a good idea. It may result in a worsening of stuck tcp/ip ports. Same with a markov generator, etc.

ES Dev Team

Well-known member

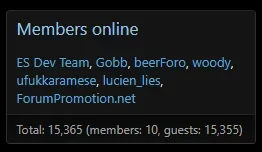

We have enormous guest amounts now ( 7.7k ) but only 50-100 tcp/ip ports being used.

Last night i saw up to 17k and the tcp/ip ports being used was 1800+ ( apache starts choking at 1000/sec with )

So yeah last night's rush was a different kind of traffic..

Last night i saw up to 17k and the tcp/ip ports being used was 1800+ ( apache starts choking at 1000/sec with )

So yeah last night's rush was a different kind of traffic..

Last edited:

ES Dev Team

Well-known member

Looks like it's mostly died down.

Found a recent article about one of these residential proxy botnets and how google took one of them down:

https://cloud.google.com/blog/topic.../disrupting-largest-residential-proxy-network

Found a recent article about one of these residential proxy botnets and how google took one of them down:

https://cloud.google.com/blog/topic.../disrupting-largest-residential-proxy-network

VN has been quite a bugger lately lol.I have a small forum, usually a few hundred guests at most. The last days the number of guests have been unusually high, around 3000 and climbing. We now have over 4800 guests. That's not normal. Many "Viewing unknown page" or "Latest content", with a warning sign. IP addresses are from all corners of the world. Many from south America, Brazil mostly. Also saw quite a number from Vietnam of all places, but everywhere else too.

We're on Cloud and I don't know what to do. Could we be under attack? We have been in the past, a few times I think. For reasons I cannot possibly fathom; we're a small photography forum for crying out loud.

I use cloudflare, and zone-level block (for free) 4 countries and llm bots. (which means traffic from blocked countries never get past resolving the dns). They never see your server.

Unless you are marketing to those countries, you will not miss them. VN has been bad lately and so I put a zone-level block on them.

If you are using digitalpoint's Cloudflare addon (here at xf), it interfaces wonderfully with cloudflare.

Configure and manage your Cloudflare account from within XenForo.

Now, I do block llm bots as they act as non-attributing scrapers.

But all told, 4 countries I do no business with, and llm bots I do not give my web data to, amounts to a significant amount of "guest" traffic that no longer loads my server. I did do a comparison before and after as my site was new, and it works as desired with no detectable unexpected blocks or issues.

And now my guest count is more real and lots less fake.

Last edited:

ES Dev Team

Well-known member

smallwheels

Well-known member

ES Dev Team

Well-known member

That's what i'm experiencing too. But it's slower to tail off.

It might be because my 'members online timeout' is set higher than theirs.

Wonder if other cloudflare users also experience this periodic blast.

It might be because my 'members online timeout' is set higher than theirs.

Wonder if other cloudflare users also experience this periodic blast.

Last edited:

I have. But mostly mitigated by cloudflare directly.That's what i'm experiencing too. But it's slower to tail off.

It might be because my 'members online timeout' is set higher than theirs.

Wonder if other cloudflare users also experience this periodic blast.

I do look at the pass/fails, and blocking certain rogue countries has been very effective in combatting these particularly useless bots.

smallwheels

Well-known member

I'd say you have an unknown-unknown problem: You see what you have blocked - but you don't see the bots within the traffic that you haven't blocked. Four blocked countries will only block parts of the bot traffic and - as has been said numerous times in this thread - Cloudflares bot detection does only detect a very small fraction of the resident proxies.I have. But mostly mitigated by cloudflare directly.

Well, those that come from these countries - but not those that come from other countries. Geoblocking is indeed very effective - probably for the moment the most effective thing one can do if it does not hurt genuine traffic. However: Locking the front door while leaving the backdoor and the windows open does not create safety. So you feel safe (falsely) b/c something is blocked - while in fact your doors are still wide open.I do look at the pass/fails, and blocking certain rogue countries has been very effective in combatting these particularly useless bots.

Last edited:

smallwheels

Well-known member

This is a different kind of issue. These kind of scanning for word press does usually come via big cloud providers, in my forum it was for the most part coming from Microsoft Azure. These do no harm but eat your resources and spoil the 404 logs with garbage which makes proper 404 management basically impossible. You could get rid of those by filtering out these regquests via some regular expressions in .htaccess (which you can't as you are on XF Cloud) or by blocking the ASNs where those requests come from (which I think is possible via Cloudflare). This could bring down those things considerable.Just to laugh it off, I look at my 404's to try to see why,..

And so many of my 404 requests have all kinds of wordpress directories and files in the request string looking for hits on wp files.

I am on a cloud at XF. There is no wordpress on my site.

However: This has absolutely nothing to do with the topic of the tread and with what we are discussing here as it is a whole different issue.

Of course. Thats why I used the word mitigated, and not the word eliminated. In my attempts to reduce the number of useless bots proving successful, I only focus on the worst offenders. I am not isolating every single bot I dont want. It would be futile.You see what you have blocked - but you don't see the bots within the traffic that you haven't blocked.

And those bots get captured in a 404 on my XF instance, and I see them in my logs. But again, they get blocked locally on the server through XF. (The only unblocked indexers I reject are the likes of ByteDance, etc. using robots.txt. All others flow through. )but you don't see the bots within the traffic that you haven't blocked.

I agree. Like I said, 4 blocked countries representing the significant portion of requests from countries that I do not market to anyway. It is mitigation. And my former useless visitor count has now trimmed substantially and more real now and I do not mind it being a more representative number. (which is the OP's topic point)Four blocked countries will only block parts of the bot traffic and - as has been said numerous times in this thread - Cloudflares bot detection does only detect a very small fraction of the resident proxies.

Last edited:

smallwheels

Well-known member

but you don't see the bots within the traffic that you haven't blocked.

Absolutely not. 404s are requests for something that isn't there. What you see as 404 are denied requests for content that only logged in member would see like i.e. full size images if you have set the permissions to that. Personally I think these should rather be a 403 and opened even a bug for that but was stated that 404 would be totally fine and the better way.And those bots get captured in a 404 on my XF instance,

However: We have been talking about resident proxies over the last pages and how to deal with them. They will pass unnoticed as long as they target content that is publically available.

See: Maybe. Identify: Hard. Deal with them: Even harder. And very timeconsuming.and I see them in my logs.

They only get blocked if your content is behind the registration wall. So the only "safe" way against the KI scrapers ATM is to put everything behind the loging - which will then affect Google ranking and annoy legitimate visitiors (but bring a bunch of additional registrations short hand). You can however not be shure that modern KI would not have already registred in your forum.But again, they get blocked locally on the server through XF. (The only unblocked indexers I reject are the likes of ByteDance, etc. using robots.txt. All others flow through. )

ES Dev Team

Well-known member

And so many of my 404 requests have all kinds of wordpress directories and files in the request string looking for hits on wp files.

I am on a cloud at XF. There is no wordpress on my site.

I like to refer to this as a free vulnerability scan, provided by independent and state sponsored hackers.

They always go after wordpress first because that's like 60% of the internet.

I have seen scans such as these try to pick up database/file backups in the root folder, which i see most sysadmins unfortunately leave hanging about.

So these are actually nastier than you think and you should tune your protection to mitigate against this, because if you don't, and you slip by making ANYTHING remotely security-sensitive be web available, you will have a very bad time.

Similar threads

- Replies

- 6

- Views

- 141

- Replies

- 8

- Views

- 188

- Replies

- 0

- Views

- 282

- Question

- Replies

- 0

- Views

- 652