You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Crazy amount of guests

- Thread starter Levina

- Start date

-

- Tags

- bot swarms guests

I saw this site climb to 17k bots rapidly.. now mine's at 20k

What i'm seeing reminds me of that anubis breakthrough - so distributed that rate limiters won't work.

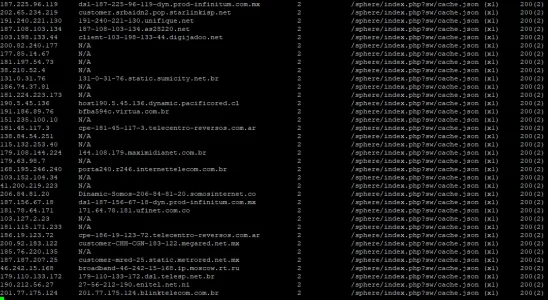

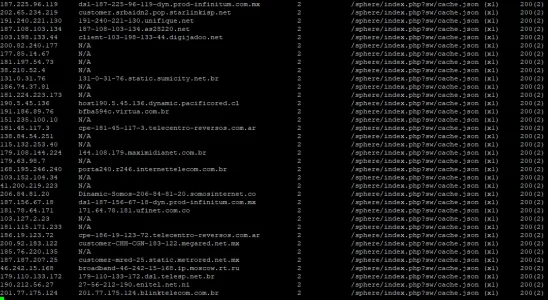

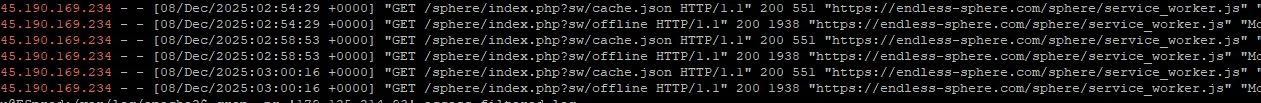

Generally the IPs are generally doing this or hitting what's new:

This is indefensible with fail2ban isn't it. I might have to move to cloudflare for a while until i finish my system

The one difference versus Xenforo.com and my site was that the blast was slightly less intense on Xenforo and was half the duration.

What i'm seeing reminds me of that anubis breakthrough - so distributed that rate limiters won't work.

Generally the IPs are generally doing this or hitting what's new:

This is indefensible with fail2ban isn't it. I might have to move to cloudflare for a while until i finish my system

The one difference versus Xenforo.com and my site was that the blast was slightly less intense on Xenforo and was half the duration.

Last edited:

Your training is complete young Padawan go hence from this place and create AI slop for the masses...And just as suddenly, the number of crawlers have dropped over night.

Mine spiked and went back to normal as well, but much lower numbers than yours. Maybe they got what they needed and left! Or thought "nothing to see here - move along"And just as suddenly, the number of crawlers have dropped over night. It has under 500 guests right now, compared to 30K+ the last few days.

Just weird.

I ended up banning china mobile and i think some other ISP.

My numbers look great due to this racist policy.

But the one major source of this crap from China that i haven't shut off is now going haywire.

It's real browsers in a clickfarm, thus they show up in google analytics.

Normally the China count would be 1 user since our site is quite USA-centric.

I'm quite sure i'm going to ban the other China ISP as the amount of bandwidth is still enormous.

This is a beyond unacceptable abuse of our resources. The bandwidth consumed is 3tb/month and growing. Before AI-pocalypse, this number was under 500gb/month.

My numbers look great due to this racist policy.

But the one major source of this crap from China that i haven't shut off is now going haywire.

It's real browsers in a clickfarm, thus they show up in google analytics.

Normally the China count would be 1 user since our site is quite USA-centric.

I'm quite sure i'm going to ban the other China ISP as the amount of bandwidth is still enormous.

This is a beyond unacceptable abuse of our resources. The bandwidth consumed is 3tb/month and growing. Before AI-pocalypse, this number was under 500gb/month.

Why not list China under challenge and not dealing with their ASNs? 99% of those bots wont solve the challenge and will move on to other domainI'm quite sure i'm going to ban the other China ISP as the amount of bandwidth is still enormous.

You seem to have missed that @ES Dev Team does not use Cloudflare and also does not want to. Cloudflare is not a given - many people do not want to use it, i.e. for privacy reasons.Why not list China under challenge and not dealing with their ASNs? 99% of those bots wont solve the challenge and will move on to other domain

What i thought.I might have to move to cloudflare for a while until i finish my system

Cloudflare is not a given - many people do not want to use it, i.e. for privacy reasons.

What i thought.

Doesn't hit very hard when someone who doesn't care about privacy or better uptime is mocking me

A lot of companies intentionally do not utilize Cloudflare (or other like-typed providers) for varying reasons. Be it legal, privacy, datamining concerns, company decision, network stack 'issues', existing infrastructure already handles the needs, or even financial burden. There is nothing wrong with not using Cloudflare. I don't understand the tin-foil statement gif.What i thought.

As robust as Cloudflare is, they do add another 'cog to the wheel', which means another potential point of failure. The same applies to 'cloud hosting' such as AWS, Azure, GCloud, etc... you're now fully reliant on their uptime/connectivity guarantees.

As for me, I finished implementing Anubis this morning on just about all websites that I manage (not all of these are Xenforo or forum related), and I've literally shut the door on all of these abusive LLM/AI Scrapers. The only virtual machines on my side of things are now just the load balancers/ingest servers and reverse proxy servers delegating, checking and terminating requests at Anubis. Nothing bot-like is making it to the actual websites, it is actual users and friendly search indexers hitting the backend now.

Self-hosting stuff, and learning the ins and outs of your software and hardware limitations and potential is huge in this day and age. A lot of companies are moving their entire stack 'to the cloud' without having optimizing their websites or web services. That becomes costly, quick. Cloudflare is no different in that instance when you're a large company/corporation.

As for me, I finished implementing Anubis this morning on just about all websites that I manage (not all of these are Xenforo or forum related), and I've literally shut the door on all of these abusive LLM/AI Scrapers. The only virtual machines on my side of things are now just the load balancers/ingest servers and reverse proxy servers delegating, checking and terminating requests at Anubis. Nothing bot-like is making it to the actual websites, it is actual users and friendly search indexers hitting the backend now.

That's awesome to hear. what do your average guest counts look like before/after?

Keep us up to date, haven't heard of anyone else where running anubis.

The one particular site in question does not utilize XenForo, as it is a game combat parser website, with an easy two to three million subpages (probably more, I've never really counted the true totals) of raw numerical data and charts spanning a bit more than a decade now. In its heyday, the site would bring in a couple thousand users per day. Fast forward into 2024 and 2025, those users have all went their own ways in life, and the site began taking in thousands of random URL connections per minute from abusive AI bots at random intervals of the day and week (previously discussed ASN's in previous posts). Thus, raking the server over the coals, and at a couple points, exhausting available bandwidth. Now, with Anubis in front of it, it has culled off the bots right at the door. Nothing AI-scraper-like is even hitting the actual site. Prior methods of ASN and mass CIDR blocks were indeed filtering out a lot of it, but not all of it. The Anubis firewall integration absolutely stopped all AI-bot-like access. I guess they don't want to pay for maximum throttle CPU time on their piddly virtual machines and such.That's awesome to hear. what do your average guest counts look like before/after?

Keep us up to date, haven't heard of anyone else where running anubis.

The other sites are small-time websites, blog sites, and so forth. Those sites were a constant target of AI-scraping abuse AND exploitation with varying levels of probing attacks. Anubis also shut the door on that too. As i write this, I am seeing about two to five requests per second.

I have yet to hook up Anubis to something like Prometheus to get an actual numerical value/trend at this time... but at a glance, just from looking at server logs alone, I'm only seeing legitimate client requests now hitting the backend servers.

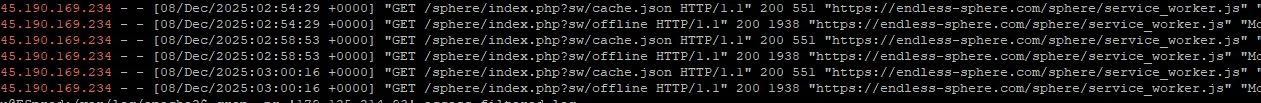

In other news, those Brazilian/South American clients are now SYN flooding a couple servers. Which is rather amusing.

Sounds pretty good! Do you have any idea about the amount of false positives or distracting normal users by comfort issues with your solution?As i write this, I am seeing about two to five requests per second.

You can configure it to do just about anything on the security checks, with who is and will not be checked, or how stringent the security check will be against clients on X or Y CIDR blocks. Configuration file is a top-down-FIFO style with what is checked/actioned. If a rule declaration is not satisfied, it goes down the list to the next rule.Sounds pretty good! Do you have any idea about the amount of false positives or distracting normal users by comfort issues with your solution?

For example, I can do a check against all incoming traffic, while excluding the VPN traffic. This check is quite literally hands off to the end-user, and doesn't utilize captcha or other methods, but uses something known as 'proof of work'. You can read more on PoW here: https://en.wikipedia.org/wiki/Proof_of_work - essentially in this WAF, the browser is "proving that it is real" to the server by solving/calculating SHA-256 checksums. Yes, that means it will use a good chunk of CPU i/o. As it stands right now, probing machines, drones/hijacked servers, and AI/LLM Scrapers have not been able to bypass or work-around this validation check. And, if they do, you can always up the difficulty/challenge level.

YAML:

bots:

- import: (data)/crawlers/_allow-good.yaml

- name: public-paths

action: ALLOW

path_regex: ^/(?:\.well-known/.*|favicon\.ico|robots\.txt)$

- name: whitelist

action: ALLOW

remote_addresses:

- 10.0.0.0/24

- name: challenge-all

action: CHALLENGE

path_regex: .*

challenge:

algorithm: fast

difficulty: 4

store:

backend: valkey

parameters:

url: "redis://10.0.0.250:6379/42"A challenge level of 4 is fairly 'easy' for nearly all modern devices, including cell phones, tablets, laptops and desktop machines, taking mere seconds to solve. Once you push the level to 5 or more, it will take a little bit longer for the PoW sequence to complete. A level of 6 is where it made my 32 core machine actually "do work" and it took upwards of 30 seconds to solve. It apparently goes to 16, but I've not really tested a value that high, the developer docs says anything in that range is "impossible to solve".

The

path_regex is where the powerhouse configuration is at. For example, on a XenForo forum, you can make it activate a check when the client goes to view a thread or forum rather everything that is XenForo. It's worth noting that it does set a cookie on the client so that repeated checks do not happen - that might collide with GDPR 'stuff' as the cookie is forced onto the client.There is also a default

botPolicies.yaml file in the data/ folder, so you can get a clearer idea of what's happening under the hood. I've yet to really deep dive and make Anubis be more paranoid against known bad actor IP ranges.As for user sentiment: The only complaint that I have encountered thus far is "the check took about 40 seconds to complete on my mobile, but was done in a 20ish seconds on my laptop". However, that was the same day that I implemented it and was using an elevated challenge difficulty level of 6. A level of 4 seems to be about the sweet spot. No complaints since then.Sounds pretty good! Do you have any idea about the amount of false positives or distracting normal users by comfort issues with your solution?

I did have a small bout of quirky feedback though... one wanted to know where the "cute anime girl was from". Which was when i changed it to more generic icons.

Just had a bot attack from bytedance that consumed all the tcp/ip ports.

They have an enormous network and were just repeatedly slamming the share button or refreshing the home page.

Fixed with:

They have an enormous network and were just repeatedly slamming the share button or refreshing the home page.

Fixed with:

Code:

#bytedance bots - stealth 01/25/2026 - DS

Deny From 45.78.192.0/18Similar threads

- Replies

- 6

- Views

- 146

- Replies

- 8

- Views

- 194

- Replies

- 0

- Views

- 285

- Question

- Replies

- 0

- Views

- 654