I guess you could enable the I’m Inder Attack mode in Cloudflare and force a captcha on every page view?Just thinking here.

For members with similar issues, is there another option to deal with this when they are not aware of this addon and using cloudflare? I imagine a less technical site owner would just not be able to figure out the issue nor the solution.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Crazy amount of guests

- Thread starter Levina

- Start date

-

- Tags

- bot swarms guests

I got over 1000 visitors online at the same time. Most of them are from USA and Singapore. I imagine mostly bots collecting info for AI training. My site is small, I used to see a lot less traffic than this.

Sure. There are countless threads and postings about it. Even in this thread @ES Dev Team mentioned his approach with fail2ban, just a couple of postings above your question.Just thinking here.

For members with similar issues, is there another option to deal with this when they are not aware of this addon and using cloudflare?

It may sound harsh but: Who has no technical clue how it works should not be running a public service on the internet or pay someone to deal with it (or learn very fast yourself). As with everything that needs knowledge. Pretty simple and straightforward. Other than that this forum here is full of problem-reports and solution approaches regarding bot traffic, loads of them very recent, dating over the last ~six months. Just people seem to be unable or unwilling to read - it is hard to explain why else every couple of days a new thread with the very same topic "thousands of bots on my site" is openend. Obviously people are too lazy to use the forum search to dig for solutions to their problem or simply to scroll through the last 2-3 pages of existing topics before opening a new thread. Somewhat ironic that this is a common issue in forums that mods and admins are somewhat annoyed about - and yet in a forum that is almost exclusively used by forum admins they themselves show the very same behavior.I imagine a less technical site owner would just not be able to figure out the issue nor the solution.

Save a record for this.I got over 1000 visitors online at the same time. Most of them are from USA and Singapore. I imagine mostly bots collecting info for AI training. My site is small, I used to see a lot less traffic than this.

Clearly bots stealing your data.

There will be a data stealing lawsuit someday.

Read this today..

https://www.techspot.com/news/110432-alibaba-bytedance-moving-ai-training-offshore-bypass-china.html

Alibaba and ByteDance are among the companies routing training jobs for their latest large language models to data centers in countries such as Singapore and Malaysia, according to The Financial Times, citing people with direct knowledge of the deployments. These sources say there has been a steady shift toward offshore clusters since April, when Washington moved to tighten controls on Nvidia's H20 accelerator, a chip designed specifically for the Chinese market.

That ByteDance statement is rather interesting. Unless those clients are spoofing user agents, that's news to me with that company in particular. From my experience, the two most notable Chinese-based abusers are Alibaba and TenCent - all via their cloud datacenter servers. Huawei is a close third, the article seems to make note of them, too.

The whack-a-mole game continues...

To be honest, I don't think many folks are saving raw server logs with long-term in mind with these abusive botnets. In my point of view, AI/LLM scrapers at this point are modern day botnets.Save a record for this.

Clearly bots stealing your data.

There will be a data stealing lawsuit someday.

Exactly. Those should be banned right away. Excessive scraping, ignoring rules.most notable Chinese-based abusers are Alibaba and TenCent - all via their cloud datacenter servers. Huawei is a close third, the article seems to make note of them, too.

It may sound harsh but: Who has no technical clue how it works should not be running a public service on the internet or pay someone to deal with it (or learn very fast yourself). As with everything that needs knowledge.

Harsh but true. Xenforo doesn't equip users with what they need to defend their site.

AI bots have raised the minimum amount of technical skill and Xenforo has not re-lowered it.

But they are not alone, i don't know of a single web app that does a good job of defending itself out of the box.

So the server management skills or cloudflare configuration skills must be learned, otherwise your small website is going to be a lot more expensive to run than you thought it would be.

I'm slowly writing something that should be more effective than cloudflare and plug into any PHP application on my own time. I'm almost at the end of what state of the art technology can handle.

That ByteDance statement is rather interesting. Unless those clients are spoofing user agents, that's news to me with that company in particular. From my experience, the two most notable Chinese-based abusers are Alibaba and TenCent - all via their cloud datacenter servers. Huawei is a close third, the article seems to make note of them, too.

90% of these scraper bots are falsifying their user agents and quite possibly using real browsers, fooling even google analytics. I see enormous spikes from chinese traffic in google traffic.

Honestly, it's pretty bad out there!

fail2ban!I'm slowly writing something that should be more effective than cloudflare and plug into any PHP application on my own time. I'm almost at the end of what state of the art technology can handle.

Barely anyone would think about running an atomic power plant in his backyard, most people don't know how to maintain their cars and pay someone to do it. Yet, despite the same lack of knowlege, they run a service on the internet and expect it to work magically w/o any own effort and learning. Basically they then post on an internet forum "My atomic powerplant in my backyard that I bought cheaply has started to make strange noises and some red lights are blinking. What does that mean? No, I did not read the manual, why should I? What is an atom btw?"Harsh but true.

Yup. I know that I have a minority opinion here but in my eyes that is the biggest fail that XF did and does.Xenforo doesn't equip users with what they need to defend their site.

Very interested to learn about it once you are ready!I'm slowly writing something that should be more effective than cloudflare and plug into any PHP application on my own time. I'm almost at the end of what state of the art technology can handle.

Wouldn't that still be limited to the resources the server has if the logic is running on the server? What happens if a rogue bot hits your server with 10,000,000 requests per second? Obviously I don't know anything about it, but seems to me, the better option would be to handle the problem upstream of your origin server so the requests aren't taking network bandwidth or CPU cycles of your server.I'm slowly writing something that should be more effective than cloudflare and plug into any PHP application on my own time. I'm almost at the end of what state of the art technology can handle.

I can't think of anything that would run inside the PHP stack (or even upstream within the web server) on the server that would realistically be able to stop the sort of bad traffic my servers see without the servers themselves being wrecked from just needing to run that logic. I see at least 10,000 HTTP requests that need to be blocked per second on my servers (that's at the lowest end of the spectrum).

Obviously.Wouldn't that still be limited to the resources the server has if the logic is running on the server?

The server will go down.What happens if a rogue bot hits your server with 10,000,000 requests per second?

It obviously depends from what the goal is. If you want to be prepared for a massive DDOS-attack you need separeted systems (and can hope that they are strong enough). If you "just" want to block AI bots (that your server currently serves already w/o crashing) a software on the machine itself may do the trick.Obviously I don't know anything about it, but seems to me, the better option would be to handle the problem upstream of your origin server so the requests aren't taking network bandwidth or CPU cycles of your server.

This is clearly a load situation that is lightyears above mine.I see at least 10,000 HTTP requests that need to be blocked per second on my servers (that's at the lowest end of the spectrum).

Yup. I know that I have a minority opinion here but in my eyes that is the biggest fail that XF did and does.

Yeah.. i can't think of a single software today that doesn't require bolting on aftermarket software on.

It's a shame, we are going to lose the indie internet over it if someone doesn't intervene.

Very interested to learn about it once you are ready!

Thanks, it would be great to have some people interested in it once i've proven it out on our forum

Install requirements should be:

- Install clickhouse ( we need a hyperfast database that has excellent compression and this is it )

- Install fail2ban ( we use fail2ban to coordinate IPtables bans, which are very fast ) <-- this requirement will be removed in future versions

- Include a .php file ahead of your application's bootloader

- Edit a configuration file that's in PHP array format

Should be super easy to setup, we would provide a fail2ban config file to make that part of the install easier.

Wouldn't that still be limited to the resources the server has if the logic is running on the server? What happens if a rogue bot hits your server with 10,000,000 requests per second? Obviously I don't know anything about it, but seems to me, the better option would be to handle the problem upstream of your origin server so the requests aren't taking network bandwidth or CPU cycles of your server.

I can't think of anything that would run inside the PHP stack (or even upstream within the web server) on the server that would realistically be able to stop the sort of bad traffic my servers see without the servers themselves being wrecked from just needing to run that logic. I see at least 10,000 HTTP requests that need to be blocked per second on my servers (that's at the lowest end of the spectrum).

- the database is extraordinarily fast and PHP doesn't have to wait for writes to complete, and PHP can also analyze the traffic on a background thread. If there is some overhead on the application, it's only a few milliseconds per hit.

- the analysis engine is very fast because we get to lean heavily on clickhouse's fast C++ database.

- with php2ban, you could trap malicious requests much faster and then refer them down to fail2ban which uses the Linux Kernel's IPTABLES system to deal the ban. This is computationally extremely fast, so the limit of what kind of attack you can create is more the amount of bandwidth your server has access to.

- most webhosts have a ~10gb/sec bandwidth pipe and so the system can theoretically withstand somewhere around that size of attack.

So far i have lots of servers running fail2ban with 2 core CPUs and fail2ban is very light.

Only on our Xenforo site, endless sphere, do i see fail2ban consuming a few % of the CPU. This is interesting because fail2ban is written in python and uses sqlite as the database ( which has fast reads but slow writes ), so in theory our system could be more performant than fail2ban since PHP is a few times faster than python and clickhouse should be a few times faster than sqlite in rapid read/write situations.

it helps that our weblogs are prefiltered before they go into fail2ban to isolate for only hits that generate a server load. If you were to feed all the .js/.css/.jpg/etc hits, you'd have a worse time.

Cloudflare is a solid first-layer shield, but the recent wave of AI scraping traffic can slip past CDN-level checks. Blocking abusive ASNs (like Alibaba/Tencent), adding geo challenges, and applying rate limits help in the short term. Modern bots behave like real browsers, so the more reliable long-term protection is the approach @ES Dev Team outlined.

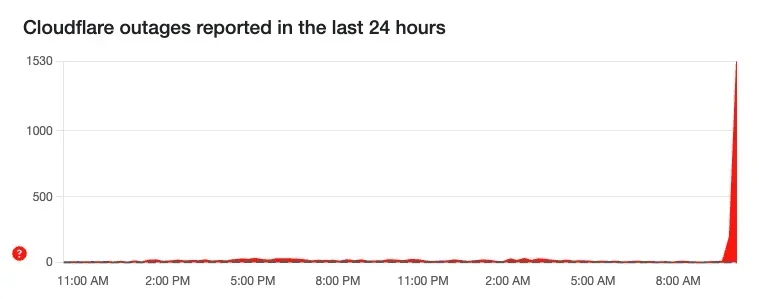

Poor timing on Cloudflare's part this morning. Although I guess that effectively stops the attack!Cloudflare is a solid first-layer shield

Some of our monitoring for client sites that use Cloudflare (not all of them) just went off (0849 UTC) and it even seems to be impacting their own site. Hopefully not a repeat of the last snafu, that would be embarrassing.

Yeah, down here too.

I discovered the outage when trying to log in to Plex on my TV - got an error, when I went to investigate, I found the Cloudflare error.

This is not doing well for their reputation.

So far I've only found plex.tv and cloudflare.com to be offline ... but I also can't upload images to Slack, which may or may not be related.

I discovered the outage when trying to log in to Plex on my TV - got an error, when I went to investigate, I found the Cloudflare error.

This is not doing well for their reputation.

So far I've only found plex.tv and cloudflare.com to be offline ... but I also can't upload images to Slack, which may or may not be related.

They are back and their status page has a note. I think the most amusing bit was "down detector" was, well down.

Poor timing on Cloudflare's part this morning. Although I guess that effectively stops the attack!

Yes, I saw the Dashboard/API outage as well. Since it didn’t affect the edge network, overall access remained intact. These brief interruptions happen from time to time. The scraping wave however is a completely separate issue even when Cloudflare is fully stable modern bots can slip past CDN-level checks. That’s exactly why the application-layer approach outlined by ES Dev Team is becoming increasingly important.

Same here at around 10:00 German time for websites using cloudflare. My DSL went down at the same time - which typically happens once a year at best for a couple of minutes only, so somewhat an unusual correlation. Mobile data still working, always good to have a fallback...Cloudflare (not all of them) just went off (0849 UTC)

Seems Downdetector is using cloudflare as well...They are back and their status page has a note. I think the most amusing bit was "down detector" was, well down.

Similar threads

- Replies

- 6

- Views

- 146

- Replies

- 8

- Views

- 193

- Replies

- 0

- Views

- 285

- Question

- Replies

- 0

- Views

- 654