Let's face it. There will be fake users joining our forums in the near future to do all kinds of things, surely more than I can imagine:

So what is to be done...

Some of my ideas:

- Harvest content

- Make friends with our users, and scam them

- Find ways to post backlinks or promote something

- maybe even sabotage our communities in some way.

So what is to be done...

Some of my ideas:

- Require login for a user to visit more than X content pages (maybe 10)

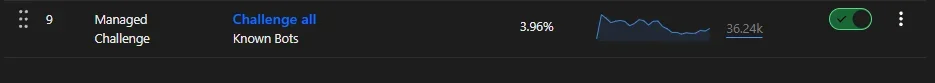

- Cloudflare and any other tools that will help to identify and stop bot behavior

- Potentially closing off some portion of the site to the public (just how to get ad revenue on non-public content). One strategy would be to identify the pages that are rarely visited and require login to see those pages.