You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Advanced Traffic Statistics: From Insight to Active Defense 1.7.3

No permission to download

- Thread starter Supergatto

- Start date

Supergatto

Active member

Supergatto updated Advanced Traffic Statistics: From Insight to Active Defense with a new update entry:

Advanced Traffic Statistics 1.7.3 - Performance & Visual Update

Read the rest of this update entry...

Advanced Traffic Statistics 1.7.3 - Performance & Visual Update

Changelog:

- NEW: Top Blocked Bots Chart: Added a new horizontal bar chart to the main report page. It displays today's "Top 10 Blocked Bots," giving you immediate visual feedback on exactly who is attacking your forum and being stopped by the AI/Junk Shields.

- PERFORMANCE: Core Optimization: Implemented an optimized version of the core file (Listener.php) featuring a smart cache limiting system. This reduces database write operations by...

Read the rest of this update entry...

Looks and works great so far. I did a full re-install.

Noted that the widget "Robots:" always shows a zero count. It shows correctly on the report page. But both the widget and the report page show the number of guests as the same and when added to the total on the reports page, the totals does not reflect the bots added on, but just members and guests.

So I cant really decide which is accurate.

I did put up the XF widget showing guests for comparison, and robots do not count there also. Just the total of members online and guests.

Noted that the widget "Robots:" always shows a zero count. It shows correctly on the report page. But both the widget and the report page show the number of guests as the same and when added to the total on the reports page, the totals does not reflect the bots added on, but just members and guests.

So I cant really decide which is accurate.

I did put up the XF widget showing guests for comparison, and robots do not count there also. Just the total of members online and guests.

Last edited:

smallwheels

Well-known member

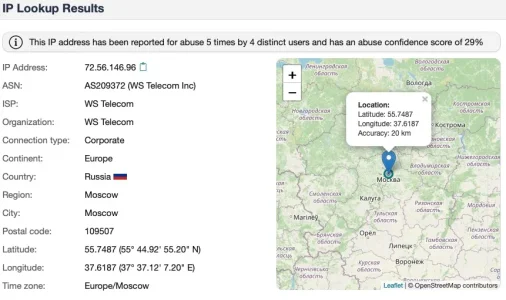

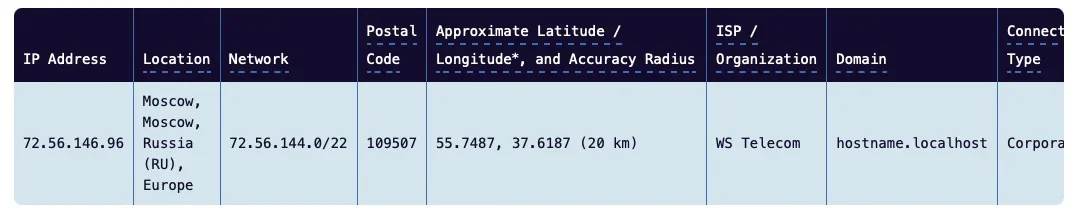

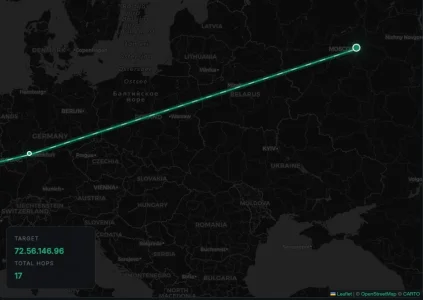

I would not buy that. The IPs belong to AS209372 (WS Telecom) which is well known for all kinds of malicious activity coming from there. An ASN well worth blocking - you won't miss anything but have less trouble. Their Website looks legit on first sight and creates the impression of an American company but if you click on their choice of currencies it is pretty obvious that this is a Russian undertaking:Technically, the IP is assigned to Germany (which is why the add-on flags it as DE), but the actor behind it is in Russia.

Their ASN spans a lot of countries, but the IPs in question are clearly located in Russia as I understand it.

https://bgp.tools/as/209372#prefixes

Maxmind (the geoDB of whome you are using in their free lite version) also say that the IP would be in Moskow:

GeoIP web services demo | MaxMind

Demo MaxMind’s GeoIP web services by entering up to 25 IP addresses. Instantly access IP geolocation data from our most accurate API solution.

www.maxmind.com

and a traceroute shows indeed the same:

So how do you come to the conclusion the IP would be in Germany?

smallwheels

Well-known member

I am wondering how you do that, given that at the moment most of the bot traffic comes from resident proxies and until now no solution on the market is able to identify them reliably and to full extend (inc. massive corps like Couldflare taht do this for a living)? Could explain this?The Bot Advantage:A key strength of our system compared to standard analytics is our Bot Intelligence. The system doesn't just count hits; it actively recognizes, classifies, and separates human traffic from bot traffic (crawlers, spiders, etc.). This provides you with clean, realistic statistics that other tools often struggle to distinguish effectively.

Supergatto

Active member

Hi smallwheels,I am wondering how you do that, given that at the moment most of the bot traffic comes from resident proxies and until now no solution on the market is able to identify them reliably and to full extend (inc. massive corps like Couldflare taht do this for a living)? Could explain this?

You raise a technically excellent point. You are absolutely right: residential proxies are currently the "End Boss" of bot detection, and even massive infrastructures like Cloudflare face challenges with them because the IP itself looks clean and legitimate.

However, our "Bot Intelligence" doesn't rely solely on IP reputation (which, as you noted, is easily bypassed by residential proxies). We use a multi-layered approach specifically designed for the XenForo environment:

- Behavioral Heuristics: Since we run at the application level, we can see what the visitor is doing. Bots often follow specific navigation patterns, request pages at superhuman speeds, or ignore typical session cookies in ways that real browsers don't.

- Honeypots (Traps): This is one of our most effective tools against scrapers. The system injects invisible links or fields that human users never see or click. If a "visitor" interacts with these traps, they are instantly flagged as a bot, regardless of whether their IP is residential, mobile, or data center.

- User Agent & Fingerprinting: While easily spoofed, we cross-reference the User Agent with expected headers.

- Known Signatures: We maintain an internal database of known crawler signatures that aren't always caught by standard logs.

In our tests, this combination drastically reduces the "fake" traffic counts compared to standard raw access logs.

I hope this explains the logic behind the system!

Supergatto

Active member

"Hello and thanks for the feedback!I am wondering how you do that, given that at the moment most of the bot traffic comes from resident proxies and until now no solution on the market is able to identify them reliably and to full extend (inc. massive corps like Couldflare taht do this for a living)? Could explain this?

What you are seeing is actually intended behavior and follows standard XenForo logic. Here is the technical explanation:

- The "Total Online" Calculation:In XenForo (and in this add-on), the formula for "Total Online" is strictly: Members + Guests.Robots are tracked separately and are excluded from the total count by design. This is to prevent inflating your community statistics with artificial traffic. If we added bots to the total, you might see "500 users online" when only 10 are real humans, which would be misleading.

- Why the Widget shows 0 Robots vs. Report Page:

- The Widget relies on XenForo's standard "Session Activity" (lighter and cached) to minimize load on every page view. If XenForo's native system doesn't flag a visitor as a robot (or if the session cache hasn't updated yet), it might show 0 or count them as Guests.

- The Report Page uses our add-on's Advanced Tracking Engine. This engine performs a deep analysis of User Agents in real-time. That is why the Report page is much more accurate and detects bots that standard XenForo might miss or group under "Guests".

In summary: The Report page is your "Source of Truth" for detailed analysis, while the Widget provides a quick, lightweight snapshot based on standard sessions."

smallwheels

Well-known member

Fully agreed that the bot identification built into XF leaves a lot to be desired to say it politely. As far as I understand it is solely based on the submitted user agent plus if this user agent is marked as bot in the database. So it dates back to way friendlier and more honest times a couple of years ago and is not at all adequate for today's world. "/o the "known bots" add on if will find even less.

In today's world things have become way more complex and the user agent is nothing to be relied on. Clearly, fingerprinting and behavioral tracking are better ways and for the latter a systems that is integrated in XF as an add on takes advantage over external firewalls that do not know the application and cannot see the behavior.

I get the honeypot approach (that's what the spaminator addons do as well for many years successfully) and am curious about the signature database - I had expected this would be way over the top for a XF add on, let alone a free one. Heuristics - I am no so sure. While it sounds impressive it is in fact guessing, based on criteria which by nature leads to false postives as well as to false negatives, depending from the mechanism. Probably most people remember that from virus scanners on windows that went berzerk for no reason.

If I get this right this means overall that you system needs constant updating of signatures, either via an update mechanism or by a central infrastructure that is accessed via an API in real time. An on the server side, to provide the data an infrastructure that constantly monitors, analyzes and creates new signatures. Sounds like a lot of effort.

In today's world things have become way more complex and the user agent is nothing to be relied on. Clearly, fingerprinting and behavioral tracking are better ways and for the latter a systems that is integrated in XF as an add on takes advantage over external firewalls that do not know the application and cannot see the behavior.

I get the honeypot approach (that's what the spaminator addons do as well for many years successfully) and am curious about the signature database - I had expected this would be way over the top for a XF add on, let alone a free one. Heuristics - I am no so sure. While it sounds impressive it is in fact guessing, based on criteria which by nature leads to false postives as well as to false negatives, depending from the mechanism. Probably most people remember that from virus scanners on windows that went berzerk for no reason.

If I get this right this means overall that you system needs constant updating of signatures, either via an update mechanism or by a central infrastructure that is accessed via an API in real time. An on the server side, to provide the data an infrastructure that constantly monitors, analyzes and creates new signatures. Sounds like a lot of effort.

Supergatto

Active member

You are absolutely spot on regarding the current state of XF's native detection; relying solely on User Agent strings in 2024 is indeed like bringing a knife to a gunfight.Fully agreed that the bot identification built into XF leaves a lot to be desired to say it politely. As far as I understand it is solely based on the submitted user agent plus if this user agent is marked as bot in the database. So it dates back to way friendlier and more honest times a couple of years ago and is not at all adequate for today's world. "/o the "known bots" add on if will find even less.

In today's world things have become way more complex and the user agent is nothing to be relied on. Clearly, fingerprinting and behavioral tracking are better ways and for the latter a systems that is integrated in XF as an add on takes advantage over external firewalls that do not know the application and cannot see the behavior.

I get the honeypot approach (that's what the spaminator addons do as well for many years successfully) and am curious about the signature database - I had expected this would be way over the top for a XF add on, let alone a free one. Heuristics - I am no so sure. While it sounds impressive it is in fact guessing, based on criteria which by nature leads to false postives as well as to false negatives, depending from the mechanism. Probably most people remember that from virus scanners on windows that went berzerk for no reason.

If I get this right this means overall that you system needs constant updating of signatures, either via an update mechanism or by a central infrastructure that is accessed via an API in real time. An on the server side, to provide the data an infrastructure that constantly monitors, analyzes and creates new signatures. Sounds like a lot of effort.

To address your concerns about the infrastructure and the "Virus Scanner" effect:

You are right that a real-time, cloud-based signature database requires massive infrastructure. We made a conscious design choice not to rely on external API calls. Why? Privacy and Performance.We don't want the forum to hang while waiting for a 3rd-party server to validate a visitor, nor do we want to send user data to an external cloud.Instead, our "signatures" are delivered via add-on updates. They are a curated list of known bad actors and scrapers specific to the XenForo ecosystem, not a universal antivirus database. It’s lightweight and runs locally.

Your comparison to old virus scanners is valid. Heuristics is indeed a form of probability. To mitigate false positives, our system uses a Scoring Model rather than a binary "Block Immediately" trigger for heuristics.

- The Honeypot is the "hard" trap (high certainty).

- Heuristics (behavior/fingerprinting) add "risk points."

- We tune the system to be conservative: we would rather let a sophisticated bot pass as a "Guest" than accidentally block a real human. The goal is to give the Admin a clear picture of likely bot traffic that standard logs miss, so they can take action (like the "Emergency Block") if they see a pattern, rather than having the system go "berserk" automatically.