021

Well-known member

021 submitted a new resource:

[021] ChatGPT Bots - Bot framework for ChatGPT API.

Read more about this resource...

[021] ChatGPT Bots - Bot framework for ChatGPT API.

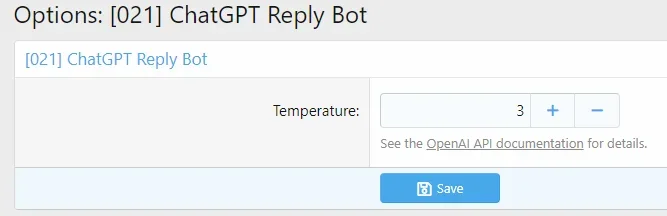

This add-on provides helper functions for working with ChatGPT.

It allows you to set an API key for add-ons that work with ChatGPT and avoid loading duplicate dependencies.

Developer usage guide

Get the OpenAI API key

PHP:$apiKey = \XF::options()->bsChatGptApiKey;

Get OpenAI API

PHP:/** \Orhanerday\OpenAi\OpenAi $api */ $api = \XF::app()->container('chatGPT');

Get reply from ChatGPT

PHP:use...

Read more about this resource...