Anomandaris

Well-known member

- Affected version

- 2.2.13

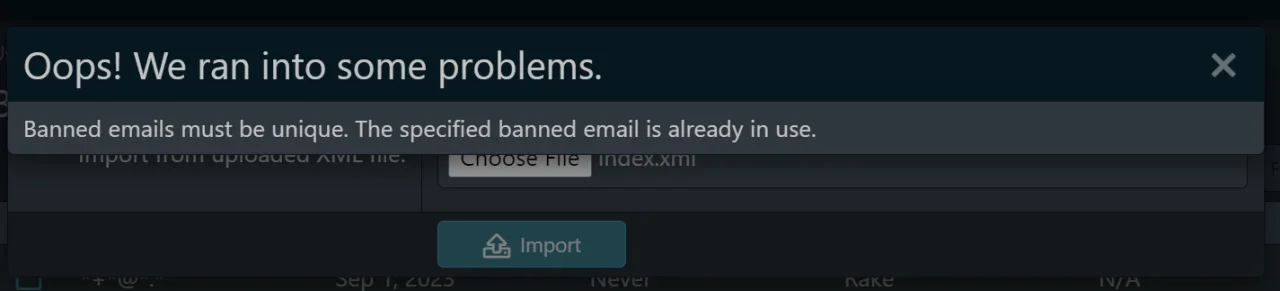

When importing an XML file of banned emails, when a duplicate is found it just stops the import. Any unique emails following the duplicate are skipped.

XF v2.2.13

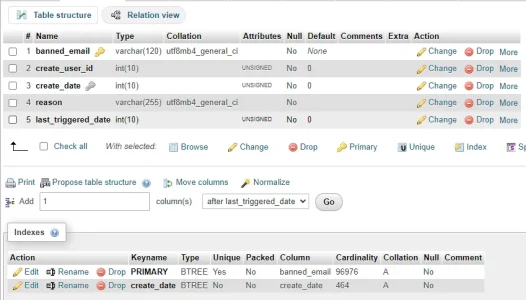

/admin.php?banning/emails

If a duplicate is found, it should just ignore it and continue importing the rest of the list.

Right now how it works: if an email that is already on the list, is found on line 5, it just stops there and displays the error. The 30,000 lines following that duplicate are not imported. It should just ignore the duplicates and continue to the next line.

XF v2.2.13

/admin.php?banning/emails

"Banned emails must be unique. The specified banned email is already in use."

If a duplicate is found, it should just ignore it and continue importing the rest of the list.

Right now how it works: if an email that is already on the list, is found on line 5, it just stops there and displays the error. The 30,000 lines following that duplicate are not imported. It should just ignore the duplicates and continue to the next line.