Phid

Member

Dear Sir/Madam,

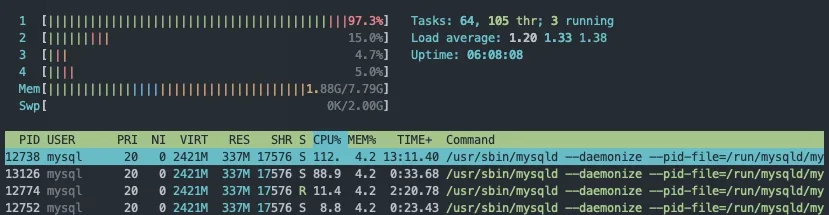

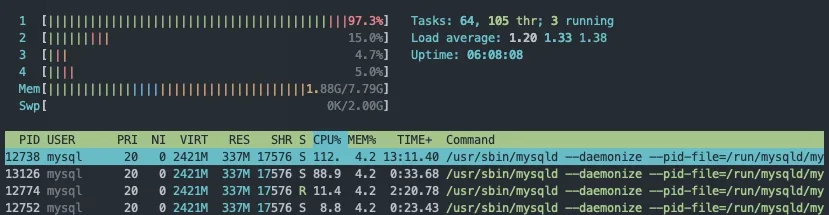

We recently upgraded to XF 2.2 and today we noticed the forum was running very slow and checking the server we noticed a high CPU usage coming from MySQL, checking the db process list we only found 3 processes running the same query which is:

Any idea how to make it stop?

We recently upgraded to XF 2.2 and today we noticed the forum was running very slow and checking the server we noticed a high CPU usage coming from MySQL, checking the db process list we only found 3 processes running the same query which is:

SQL:

UPDATE (

SELECT content_id FROM xf_reaction_content

WHERE content_type = ?

AND reaction_user_id = ?

) AS temp

INNER JOIN xf_post AS reaction_table ON (reaction_table.`post_id` = temp.content_id)

SET reaction_table.`reaction_users` = REPLACE(reaction_table.`reaction_users`, ?, ?)

Any idea how to make it stop?