Alteran Ancient

Well-known member

I gave this a try on one of my test installs on an otherwise clean CentOS6.5 install with Percona DB with an install of xF1.2.1 (I still need to renew that particular license).

Off the bat, page generation times were noticeable faster compared to php-fpm running on the same server through socket config - and this was without giving HHVM a chance to "warm up" or to start using JIT. I would love to be able to use it as my daily-driver, but there was some un-desirable behaviour that I couldn't shake and cannot put up with on a production server...

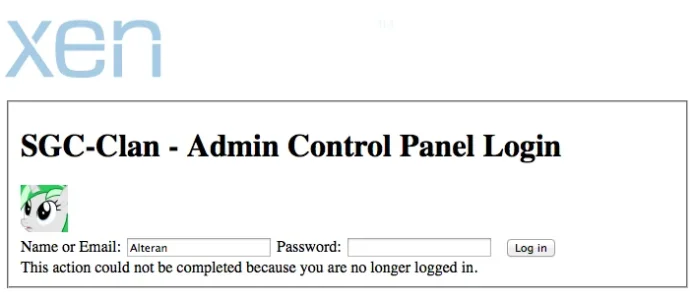

Taigachat stopped working. Fairly trivial but it would annoy my community. More importantly for me, though, is that the ACP became in-accessible. The CSS just plain wouldn't load, and any attempt to log in would result in "This action could not be completed because you are no longer logged in."

So, it's almost there. Just needs a bit of bug-fixing.

Code:

hhvm --version

HipHop VM 3.0.1 (rel)

Compiler:

Repo schema: e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

nginx -v

nginx version: nginx/1.5.13Off the bat, page generation times were noticeable faster compared to php-fpm running on the same server through socket config - and this was without giving HHVM a chance to "warm up" or to start using JIT. I would love to be able to use it as my daily-driver, but there was some un-desirable behaviour that I couldn't shake and cannot put up with on a production server...

Taigachat stopped working. Fairly trivial but it would annoy my community. More importantly for me, though, is that the ACP became in-accessible. The CSS just plain wouldn't load, and any attempt to log in would result in "This action could not be completed because you are no longer logged in."

So, it's almost there. Just needs a bit of bug-fixing.