Sim

Well-known member

Just thought I'd share some interesting data from an (unexpected!) server move last week.

I've been hosting my forums in Linode's Singapore DC for quite a few years now - primarily because it was the closest (network-wise) to Australia where most of my audience is for PropertyChat.

However, ZooChat now has a very international audience, with around 40% of users coming from the US and 25% from the UK, with Australia coming in only 3rd with 5% of the users (overall, North America is 43%, Europe 36%, Asia 10%, Oceania 7%).

So Singapore is a long way from the majority of our users.

I had been planning on relocating the server for quite some time - but there were higher priorities, so it never got done. However, a tricky server issue last week which took my main server offline forced me to build a new server and I figured that was as good a time as any to move the site. I chose Linode's Newark DC on the east coast of the US on the basis that it should be close enough to most US users and much closer to Europe than the US west coast.

Things went well (took quite a few hours to transfer the image galleries from Singapore to Newark!), and then yesterday I discovered some interesting data when reconfiguring StatusCake to monitor the new servers - I had forgotten I set up StatusCake pagespeed monitoring a while back and you can see the impact the server move had!

(Note that the site uses Cloudflare - not sure how much impact that has, if any)

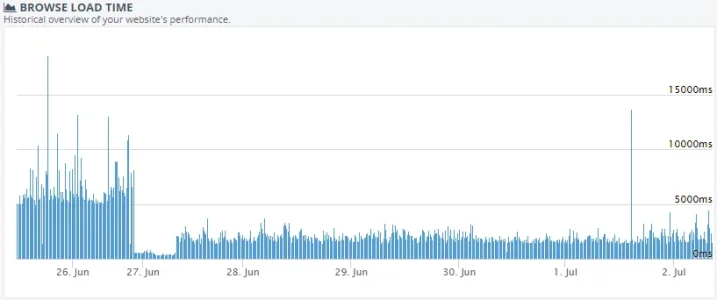

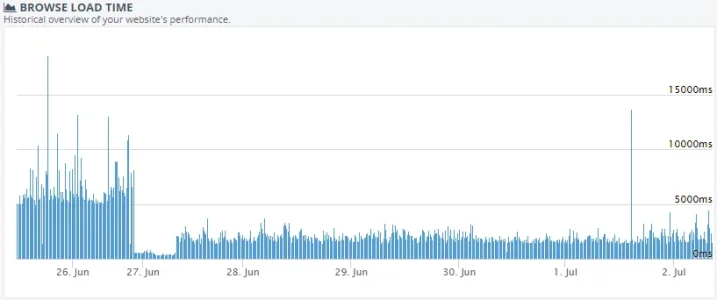

From the UK:

... I estimate roughly 2x page speed boost for my UK audience.

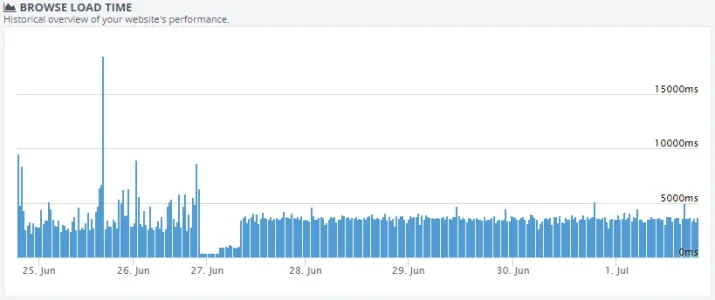

From the US:

... I estimate as much as a 3x page speed boost for my US audience!

Naturally there was always going to be a penalty from moving the server further away from Australia - but given the US-centric nature of the internet, most countries should have pretty decent connectivity to there, so I'm hoping the impact is not as bad as the gains for most other users.

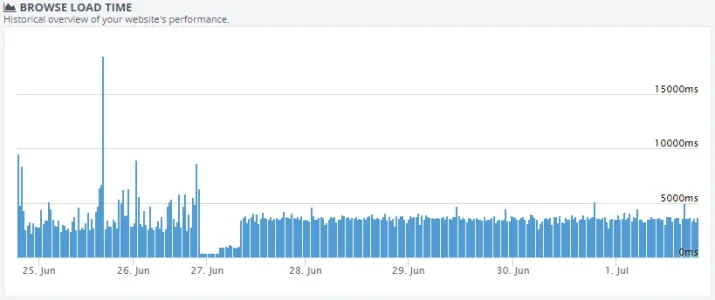

From Australia:

I estimate approximately 40-50% page speed penalty from Australia - not as bad as I had feared it would be!

It's interesting to see how much more stable the page speed performance is on the new server! The site is now on its own server - so doesn't have to contend for resources with PropertyChat like it did previously.

So, I'm going to call that mission accomplished - the performance boost (for the majority of my audience) from relocating the server to the east coast of the US is significant and worthwhile.

I've been hosting my forums in Linode's Singapore DC for quite a few years now - primarily because it was the closest (network-wise) to Australia where most of my audience is for PropertyChat.

However, ZooChat now has a very international audience, with around 40% of users coming from the US and 25% from the UK, with Australia coming in only 3rd with 5% of the users (overall, North America is 43%, Europe 36%, Asia 10%, Oceania 7%).

So Singapore is a long way from the majority of our users.

I had been planning on relocating the server for quite some time - but there were higher priorities, so it never got done. However, a tricky server issue last week which took my main server offline forced me to build a new server and I figured that was as good a time as any to move the site. I chose Linode's Newark DC on the east coast of the US on the basis that it should be close enough to most US users and much closer to Europe than the US west coast.

Things went well (took quite a few hours to transfer the image galleries from Singapore to Newark!), and then yesterday I discovered some interesting data when reconfiguring StatusCake to monitor the new servers - I had forgotten I set up StatusCake pagespeed monitoring a while back and you can see the impact the server move had!

(Note that the site uses Cloudflare - not sure how much impact that has, if any)

From the UK:

... I estimate roughly 2x page speed boost for my UK audience.

From the US:

... I estimate as much as a 3x page speed boost for my US audience!

Naturally there was always going to be a penalty from moving the server further away from Australia - but given the US-centric nature of the internet, most countries should have pretty decent connectivity to there, so I'm hoping the impact is not as bad as the gains for most other users.

From Australia:

I estimate approximately 40-50% page speed penalty from Australia - not as bad as I had feared it would be!

It's interesting to see how much more stable the page speed performance is on the new server! The site is now on its own server - so doesn't have to contend for resources with PropertyChat like it did previously.

So, I'm going to call that mission accomplished - the performance boost (for the majority of my audience) from relocating the server to the east coast of the US is significant and worthwhile.