You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Using DigitalOcean Spaces or Amazon S3 for file storage in XF 2.1+

No permission to download

- Thread starter Chris D

- Start date

BubbaLovesCheese

Active member

I personally am using two S3 buckets, the default data bucket is public, and the internal is restricted.

But thats not neccesary, afaik the System sets the restriction for internal files that they are not visible to the public.

So, that looks correct, yes. But check if the internal-data is really protected from the public.

Sorry for the german screenshot. But only the top entry should have access.

View attachment 253080

That is a good idea, but I already placed both folders in to the same bucket. The problem I have is that all my folders and files are restricted, both

data and internal_data. Even though I un-blocked public assess for the whole bucket. I still can not see them.I think it may be the way I imported the folder from xenforo. All the individual files (objects) are set to private, so I guess my questions is:

- Do I have to go to my

s3://xf-bucket/data/and make all the folders/files public that way? - Because there are 1 million files in the

avatarsfolder, and that will take a long time. - Or is there another global setting I can use?

BubbaLovesCheese

Active member

I personally am using two S3 buckets, the default data bucket is public, and the internal is restricted.

But thats not neccesary, afaik the System sets the restriction for internal files that they are not visible to the public.

So, that looks correct, yes. But check if the internal-data is really protected from the public.

Sorry for the german screenshot. But only the top entry should have access.

View attachment 253080

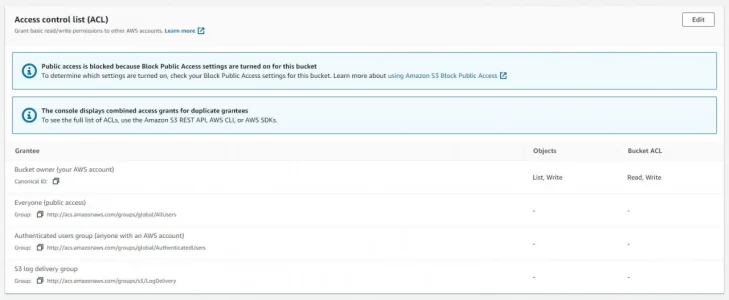

Or would I change something here instead?

these are the settings i use:

Block all public access

Off

Block all public access

Off

- Block public access to buckets and objects granted through new access control lists (ACLs)

Off - Block public access to buckets and objects granted through any access control lists (ACLs)

Off - Block public access to buckets and objects granted through new public bucket or access point policies

On - Block public and cross-account access to buckets and objects through any public bucket or access point policies

On

Hoffi

Well-known member

I assume you copied both folders the same way? That may the reason. You need to copy the data folder with enabling public access.

You need to change this. Esiest and fastes way is, to use the command line tool.Note: When copying your existing data files across, they will need to be made public. You can do this by setting the ACL to public while copying:

So I've implemented this tutorial on my forums just to find out that attachments are served through PHP at a url like attachments/name.123 instead of a static file thus negating the benefits of a CDN.

Is there a way to allow users to attach images and videos on the forums on remote storage and have it served as a static file instead of PHP?

Is there a way to allow users to attach images and videos on the forums on remote storage and have it served as a static file instead of PHP?

It doesn't exactly negate the benefits of a CDN if cheaper disk storage is your main concern.

Attachments are served like that so we can apply the permission checks before streaming the content from the CDN. There's no way to change that as it stands but it is a feature we have discussed for inclusion in the software at some point.

Attachments are served like that so we can apply the permission checks before streaming the content from the CDN. There's no way to change that as it stands but it is a feature we have discussed for inclusion in the software at some point.

Cheaper disk storage is a benefit of course, but if you are using something like s3 that bills you per download (counts as an API request), then having the webserver re-download the attachment from remote storage to serve every page view is going to rack up the bills that way.

I suppose I didn't think about the use case of private attachments, but there really needs to be something to serve public static assets so users don't have to upload to imgur then paste the link.

I suppose I didn't think about the use case of private attachments, but there really needs to be something to serve public static assets so users don't have to upload to imgur then paste the link.

Chromaniac

Well-known member

Formina Sage

Member

It’s the AWS IAM console"7. You now need to go to the "IAM" console."

Where is this?

Literally lost already, nothing says IAM

beerForo

Well-known member

Thanks! I'm now lost here:

Go back to the previous "Add user" page, click the "Refresh" button and search for the policy you just created.

Click "Next", followed by "Create user".

This will give you a key and a secret. Note them down.

I can see the policy under Policies but no Add User on that page. And the Add User page does not show the policy.

EDIT: Figured it out. Confusion, as to, would be easier to create policy from Policy section then Add User. Starting in Users is confusing.

Go back to the previous "Add user" page, click the "Refresh" button and search for the policy you just created.

Click "Next", followed by "Create user".

This will give you a key and a secret. Note them down.

I can see the policy under Policies but no Add User on that page. And the Add User page does not show the policy.

EDIT: Figured it out. Confusion, as to, would be easier to create policy from Policy section then Add User. Starting in Users is confusing.

Last edited:

beerForo

Well-known member

I have the forbidden errors when tying an avatar.

EDIT:

Caused by pasting in the code blocks from the tutorial with the "xftest" data in them not entirely my fault, it doesn't say to plug in your info. But my admin did have a strange orange smilie avatar for sec.

not entirely my fault, it doesn't say to plug in your info. But my admin did have a strange orange smilie avatar for sec.

I think it's good now! Uploaded an avatar.

EDIT:

Caused by pasting in the code blocks from the tutorial with the "xftest" data in them

I think it's good now! Uploaded an avatar.

Last edited:

Kevin

Well-known member

Heads-up before you try that, you'd need an S3 compatible client instead of just an FTP client. The free version of FileZilla, for example, doesn't support S3 connections but the paid "Prod" version does.If I don't have confidence using S3cmd can I ftp in binary mode to my computer and then to AWS? Seems it is possible but just want to check it's okay to do.

Depending on the amount of files you need to transfer, s3cmd may prove to be the most efficient method (since then it'd be a server-to-server transfer instead of a server-to-client-to-server transfer).

Chromaniac

Well-known member

winscp should also work. cyberduck has a very clunky interface from what i remember of it.

beerForo

Well-known member

Using S3cmd and connected, now, trying to figure out the command in linux.

So I cd to the data directory correct?

Done.

And I run this code?

"your folder" I put data?

Want to make sure I don't do data/data

So I cd to the data directory correct?

Done.

s3cmd put * s3://yourfolder --acl-public --recursiveAnd I run this code?

"your folder" I put data?

Want to make sure I don't do data/data

Last edited: