Tested this out with DigitalOcean and it works great! The only point of feedback would be to add support for DigitalOcean's Spaces CDN endpoint. CDN comes for free when you use DigitalOcean Spaces, but when you try to use it with this system it gives a 403 error.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Using DigitalOcean Spaces or Amazon S3 for file storage in XF 2.1+

No permission to download

- Thread starter Chris D

- Start date

Formina Sage

Member

I had this issue as well but I think it just takes a while for the CDN to propagate all the file permissions or something. I used the normal endpoint and then after a few days I tried switching it to the CDN endpoint (a custom domain) again and it worked.Tested this out with DigitalOcean and it works great! The only point of feedback would be to add support for DigitalOcean's Spaces CDN endpoint. CDN comes for free when you use DigitalOcean Spaces, but when you try to use it with this system it gives a 403 error.

Make sure you used the public ACL option when you used S3cmd to copy your files.

aws n00b...

everything seems to be working as far as i can see from the xf side but i'm getting the 403 on the write to s3. I'm struggling with applying the policy to the bucket. I've made the bucket and the IAM but i can't figure out the correct way to attach it VS the public/private bucket selectors.

Is there a quick ref guide to help guide me? I ran the instructions 4 times and i'm still broken so i'm not getting it... I realize this is a bit outside of supported realm here...

everything seems to be working as far as i can see from the xf side but i'm getting the 403 on the write to s3. I'm struggling with applying the policy to the bucket. I've made the bucket and the IAM but i can't figure out the correct way to attach it VS the public/private bucket selectors.

Is there a quick ref guide to help guide me? I ran the instructions 4 times and i'm still broken so i'm not getting it... I realize this is a bit outside of supported realm here...

Last edited:

OkanOzturk

Active member

Hello @Chris D,

Can you help backblaze configuration?

xenforo.com

xenforo.com

Can you help backblaze configuration?

XF 2.1 - BackBlaze adapter error

I was working on Backblaze integration, but when I try to upload files I get the error below. BackBlaze api: https://github.com/RunCloudIO/flysystem-b2 $config['fsAdapters']['data'] = function() { return new \RunCloudIO\FlysystemB2\BackblazeAdapter('xx', 'xx', 'xx'); }; ErrorException...

Chromaniac

Well-known member

so can anyone confirm if there is any alternative to keeping bucket public? this query seems to remain unresolved at the moment.

zaja

Member

so can anyone confirm if there is any alternative to keeping bucket public? this query seems to remain unresolved at the moment.

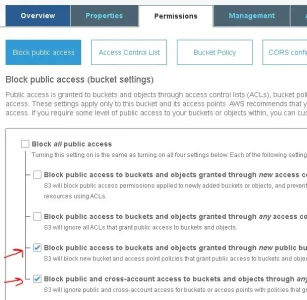

This permissions setting work for me, it will change the access status to "Objects can be public" (The bucket is not public but anyone with appropriate permissions can grant public access to objects.)

Chromaniac

Well-known member

thanks. another question i had about this integration. is it possible to restrict s3 to certain subfolders like

internal_data/attachments and data/attachments. with data folder placed on s3, the user profile images are fetched directly through the s3 url which basically leaks the location of the bucket. attachments on the other hand are fetched using xenforo url. this means that user profile images totally bypass any caching integration like cloudflare. and they would break down if there are any outage at amazon.Chromaniac

Well-known member

should be simple enough with s3cmd command i believe?

aws n00b...

everything seems to be working as far as i can see from the xf side but i'm getting the 403 on the write to s3. I'm struggling with applying the policy to the bucket. I've made the bucket and the IAM but i can't figure out the correct way to attach it VS the public/private bucket selectors.

Is there a quick ref guide to help guide me? I ran the instructions 4 times and i'm still broken so i'm not getting it... I realize this is a bit outside of supported realm here...

Thank you! This was my issue as well.This permissions setting work for me, it will change the access status to "Objects can be public" (The bucket is not public but anyone with appropriate permissions can grant public access to objects.)

View attachment 219840

I got everything offloaded now. 16gb! excited to have 5 min backups again instead of 10 hours.

s3cmd was fun to setup on centOS. had to get python, pip, a few packages like dateutil, and finally it worked.

I deleted it... looked old, no issues. probably a relic from a previous install.

Now, onto serving the images... they are coming in uncompressed and causing some headaches on the speedtest reports.

Anyone integrate cloudfront in front of their bucket to enable ondemand compression?

Now, onto serving the images... they are coming in uncompressed and causing some headaches on the speedtest reports.

Anyone integrate cloudfront in front of their bucket to enable ondemand compression?

ManagerJosh

Well-known member

Chromaniac

Well-known member

I used the normal endpoint and then after a few days I tried switching it to the CDN endpoint (a custom domain) again and it worked.

whoa. how many days are we talking about here! i have only been able to use the cdn endpoint for the data folder. nothing else works here

'endpoint' => 'https://xxxx.digitaloceanspaces.com'!even if it's the same config, using glacier is probably a mistake. Any hit to your page requesting an image will take a LONG time (hours maybe?) to deliver the image, and it costs more to pull outHey @Chris D ,

It's my first time messing with S3 in any fashion and I was wondering if the configuration for S3 would be identical to S3 Glacier.

Last edited:

Chromaniac

Well-known member

right. last i checked, glacier was for archival storage. data that you do not need on demand. any data stored on glacier needs to be requested to be fetched. this could take hours.

I read it like this initially too guys but I don’t think that’s what @ManagerJosh means.

I think he is saying that he already uses S3 Glacier for the proper archival storage purposes and may be somewhat familiar with it and its configuration and was inquiring as to whether standard S3 for the purpose of serving attachments was similar.

I think he is saying that he already uses S3 Glacier for the proper archival storage purposes and may be somewhat familiar with it and its configuration and was inquiring as to whether standard S3 for the purpose of serving attachments was similar.

ManagerJosh

Well-known member

I read it like this initially too guys but I don’t think that’s what @ManagerJosh means.

I think he is saying that he already uses S3 Glacier for the proper archival storage purposes and may be somewhat familiar with it and its configuration and was inquiring as to whether standard S3 for the purpose of serving attachments was similar.

What @Chris D wrote. I know first hand that S3 glacier is more so for archival stuff, but the website I'm running SimsWorkshop.net has around 4k+ in custom content for The Sims 4. I'm starting the next phase/step and planning where maybe the older stuff can be "archived" rather than constantly be made available, but again, it's all about the planning and exploratory aspects of the upper limits of XF. I don't foresee needing it in the next 6 months, but that may all change in 2 years times. Who knows.

Either way, I'm taking the time to think about these things before pressing "the button"

Thinking out loud here...

use this addon product.

cloudfront sources from s3

s3 is configured with aws lifecycle rules to move to glacier after x days https://docs.aws.amazon.com/AmazonS3/latest/dev/object-lifecycle-mgmt.html

create a cron to move the threads that have said attachments (maybe use tags?) to a non-public forum as the attachment will break.

s3 is .023 and Glacier is .004 cents per GB, or roughly 5x cheaper.

honestly, the cost savings of even 4tb is hardly worth the extra effort. you'd have to have massive data to make it worth moving threads and breaking them for any random bump in my opinon.

use this addon product.

cloudfront sources from s3

s3 is configured with aws lifecycle rules to move to glacier after x days https://docs.aws.amazon.com/AmazonS3/latest/dev/object-lifecycle-mgmt.html

create a cron to move the threads that have said attachments (maybe use tags?) to a non-public forum as the attachment will break.

s3 is .023 and Glacier is .004 cents per GB, or roughly 5x cheaper.

honestly, the cost savings of even 4tb is hardly worth the extra effort. you'd have to have massive data to make it worth moving threads and breaking them for any random bump in my opinon.