You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Using DigitalOcean Spaces or Amazon S3 for file storage in XF 2.1+

No permission to download

- Thread starter Chris D

- Start date

Lee

Well-known member

KSA

Well-known member

I wouldn't recommend it. XF expects them to be there, and there are scenarios where we'll complain if they don't exist. Internal data also contains some other stuff which isn't offloaded by default.

We also cannot guarantee that any add-ons (those which haven't followed XF standards) haven't written directly into those directories and therefore won't be offloaded.

You should, however, at minimum be able to remove data/avatars, data/attachments, data/video (in XF 2.1), data/resource_icons and data/xfmg. And internal_data/attachments, internal_data/file_check, internal_data/image_cache, internal_data/sitemaps.

What will happen if we decided to upgrade the forum to lets say 2.0.x or 2.1? will the files contain within data and internal_data folders be auto copied to the ones remotely stored?

phong.vt

Member

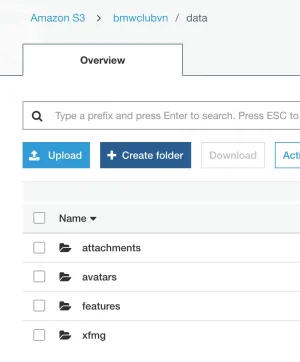

Hello @Chris D , I've found out that this add-on conflict with XenPorta2 on "promote thread to features", that I can't upload the custom thumbnail (which is requires, we can't choose existing photo for thumbnail of featured thread in XenPorta2).

Normally, XenPorta will create a "features" folder to store all those thumb images in "data", but the add-on can't get images uploaded to AWS.

Please have check! Thank you.

Normally, XenPorta will create a "features" folder to store all those thumb images in "data", but the add-on can't get images uploaded to AWS.

Please have check! Thank you.

Attachments

If an add-on isn’t uploading to or reading from AWS then it means they haven’t been using the abstracted file system properly.

The tools are there. It’s entirely up to add on developers to use them and they really should be.

The tools are there. It’s entirely up to add on developers to use them and they really should be.

Hello,

how i can integrate google bucket?

json is like this:

"type": "service_account",

"project_id": ",

"private_key_id": "",

"private_key": "-----BEGIN PRIVATE KEY---------END PRIVATE KEY-----\n",

"client_email": "",

"client_id": "",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/.gserviceaccount.com"

}

how i can integrate google bucket?

json is like this:

"type": "service_account",

"project_id": ",

"private_key_id": "",

"private_key": "-----BEGIN PRIVATE KEY---------END PRIVATE KEY-----\n",

"client_email": "",

"client_id": "",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/.gserviceaccount.com"

}

fingon

Active member

Is this correct?

1 step, install addon

Amazon S3 for XenForo 2.0.1

2 step, edit config php:

but not works.

Did I missed something? composer ?

1 step, install addon

Amazon S3 for XenForo 2.0.1

2 step, edit config php:

PHP:

<?php

$config['db']['host'] = '127.0.0.1';

$config['db']['port'] = '3306';

$config['db']['username'] = 'root';

$config['db']['password'] = 'root';

$config['db']['dbname'] = 'mt';

$config['superAdmins'] = '1';

$config['enableTfa'] = false;

$s3 = function()

{

return new \Aws\S3\S3Client([

'credentials' => [

'key' => '1234',

'secret' => '5678'

],

'region' => 'sfo2',

'version' => 'latest',

'endpoint' => 'https://sfo2.digitaloceanspaces.com'

]);

};

$config['fsAdapters']['data'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'xftest', 'data');

};

$config['externalDataUrl'] = function($externalPath, $canonical)

{

return 'https://xftest.sfo2.digitaloceanspaces.com/data/' . $externalPath;

};but not works.

Did I missed something? composer ?

Last edited:

fingon

Active member

I'm trying this on a new fresh XF2.0.12 and 'Amazon S3 for XenForo 2.0.1',

then with this config.php file and uploaded an avatar it still not work:

I'm not sure what's wrong, maybe I missed Autoloading the AWS SDK?

I copied info below to config.php and whole site down.

then with this config.php file and uploaded an avatar it still not work:

PHP:

<?php

$config['db']['host'] = 'localhost';

$config['db']['port'] = '3306';

$config['db']['username'] = 'root';

$config['db']['password'] = 'root';

$config['db']['dbname'] = 'mt';

$config['fullUnicode'] = true;

$config['fsAdapters']['data'] = function()

{

$s3 = new \Aws\S3\S3Client([

'credentials' => [

'key' => '1234',

'secret' => '4567'

],

'region' => 'sfo2',

'version' => 'latest',

'endpoint' => 'https://sfo2.digitaloceanspaces.com'

]);

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3, 'xftest', 'data');

};

$config['externalDataUrl'] = function($externalPath, $canonical)

{

return 'https://xftest.sfo2.digitaloceanspaces.com/data/' . $externalPath;

};I'm not sure what's wrong, maybe I missed Autoloading the AWS SDK?

I copied info below to config.php and whole site down.

PHP:

\XFAws\Composer::autoloadNamespaces(\XF::app());

\XFAws\Composer::autoloadPsr4(\XF::app());

\XFAws\Composer::autoloadClassmap(\XF::app());

\XFAws\Composer::autoloadFiles(\XF::app());You don’t have to do anything other than the guide asks you to do (it definitely does not tell you to add those auto load lines).

All I can tell you is that the examples you have given appear to be correct so it should just work like it does for everyone else.

All I can tell you is that the examples you have given appear to be correct so it should just work like it does for everyone else.

fingon

Active member

thanks, I'm seeking what's wrong and testing.You don’t have to do anything other than the guide asks you to do (it definitely does not tell you to add those auto load lines).

All I can tell you is that the examples you have given appear to be correct so it should just work like it does for everyone else.

deanna

Member

Hi, new user here. The instructions were great and this worked fine, but I found one little bug in the 2.0.1 version: the ContentType logic is backwards, so everything is uploaded with type 'application/octet-stream', which results in image links being downloaded rather than displayed in the browser. This fixed it for me:

Thanks!

Diff:

diff -r 0a4e1078630e xenforo/src/addons/XFAws/_vendor/league/flysystem-aws-s3-v3/src/AwsS3Adapter.php

--- a/xenforo/src/addons/XFAws/_vendor/league/flysystem-aws-s3-v3/src/AwsS3Adapter.php Sun Jan 06 06:03:48 2019 +0000

+++ b/xenforo/src/addons/XFAws/_vendor/league/flysystem-aws-s3-v3/src/AwsS3Adapter.php Tue Jan 08 04:46:52 2019 +0000

@@ -553,7 +553,7 @@

$options = $this->getOptionsFromConfig($config);

$acl = isset($options['ACL']) ? $options['ACL'] : 'private';

- if ( ! isset($options['ContentType']) && is_string($body)) {

+ if ( ! isset($options['ContentType']) && !is_string($body)) {

$options['ContentType'] = Util::guessMimeType($path, $body);

}Thanks!